Codefresh API pipeline integration

Integrate Codefresh CI pipelines with other systems

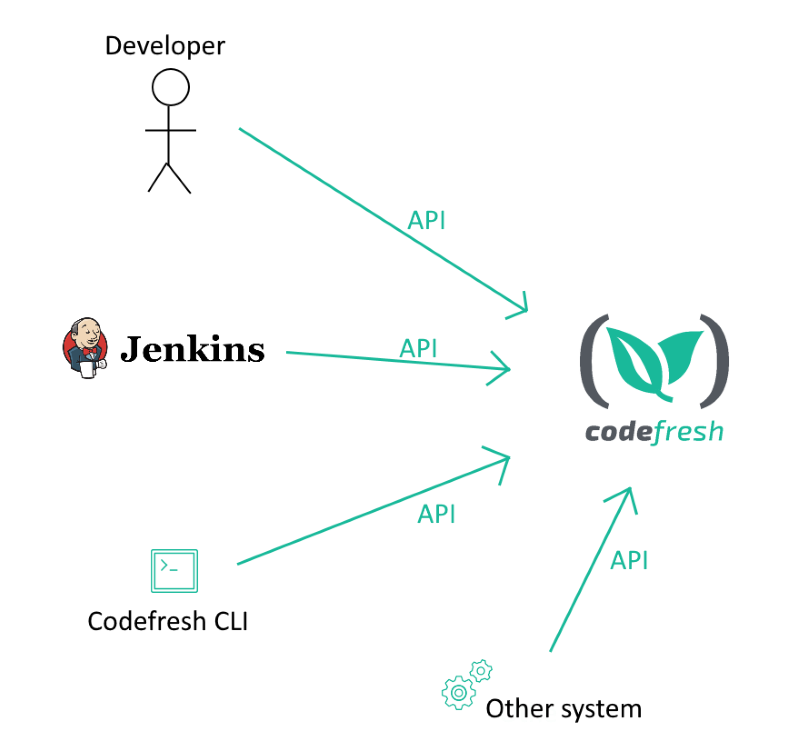

Codefresh offers a comprehensive API that you can use to integrate with any other application or solution you already have.

The full details of the API are documented at https://g.codefresh.io/api/.

You can use the API in various ways:

- From your local workstation, with any tool that speaks HTTP (such as postman, httpie, curl etc.).

- From another HTTP-enabled tool such as Jenkins. For example, you can trigger Codefresh pipelines from Jenkins jobs.

- Through the Codefresh command line interface which itself uses the API.

- Calling it programmatically from any other system. You can use your favorite programming language to make HTTP calls to Codefresh.

- Via the Terraform provider

The Codefresh API is updated when new features are added in the Codefresh platform so you can expect any new functionality to appear in the API as well.

Ways to use the Codefresh API

There are several ways to use the API. Some of the most popular ones are:

- Triggering builds from another system. You can start a Codefresh pipeline from any other internal system that you already have in your organization.

- Getting the status of builds in another system.

- Creating pipelines externally. You don’t have to use the Codefresh UI to create pipelines. You can create them programmatically using your favorite template mechanism. You can reuse pipelines using your own custom implementation if you have special needs in your organization.

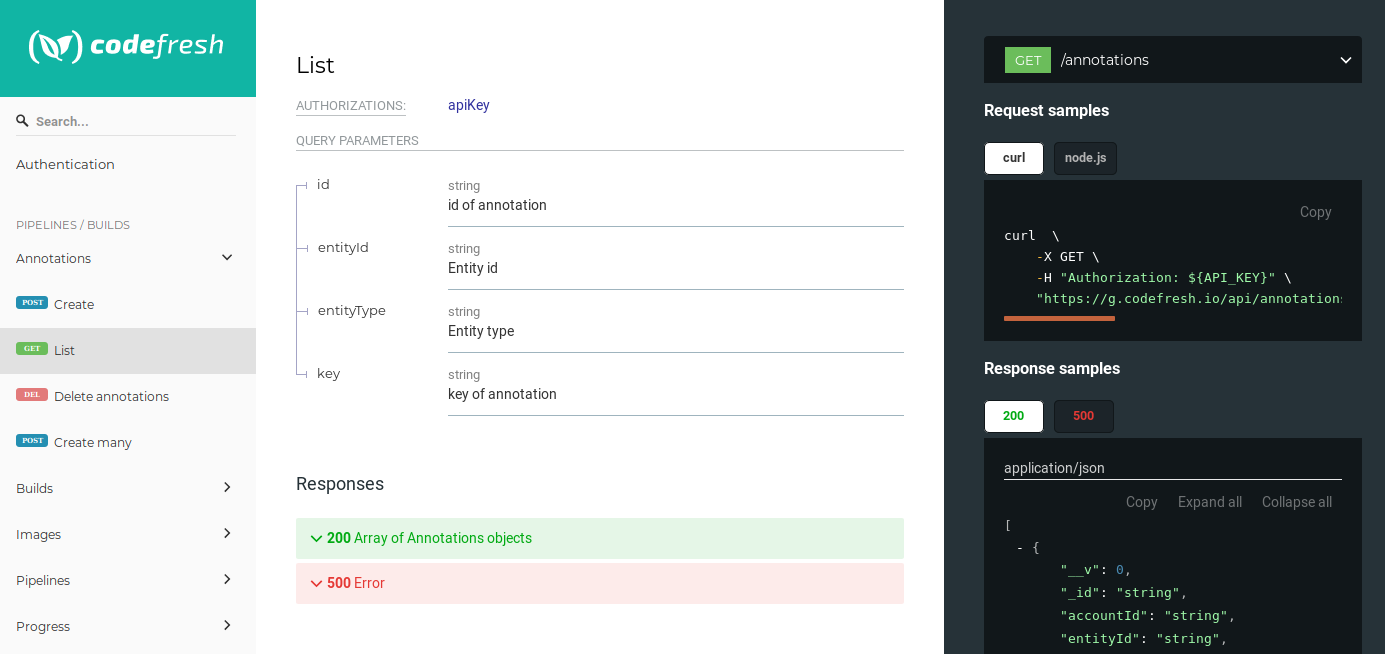

You can browse the current API at https://g.codefresh.io/api/.

For each call you will also see an example with curl.

Authentication instructions

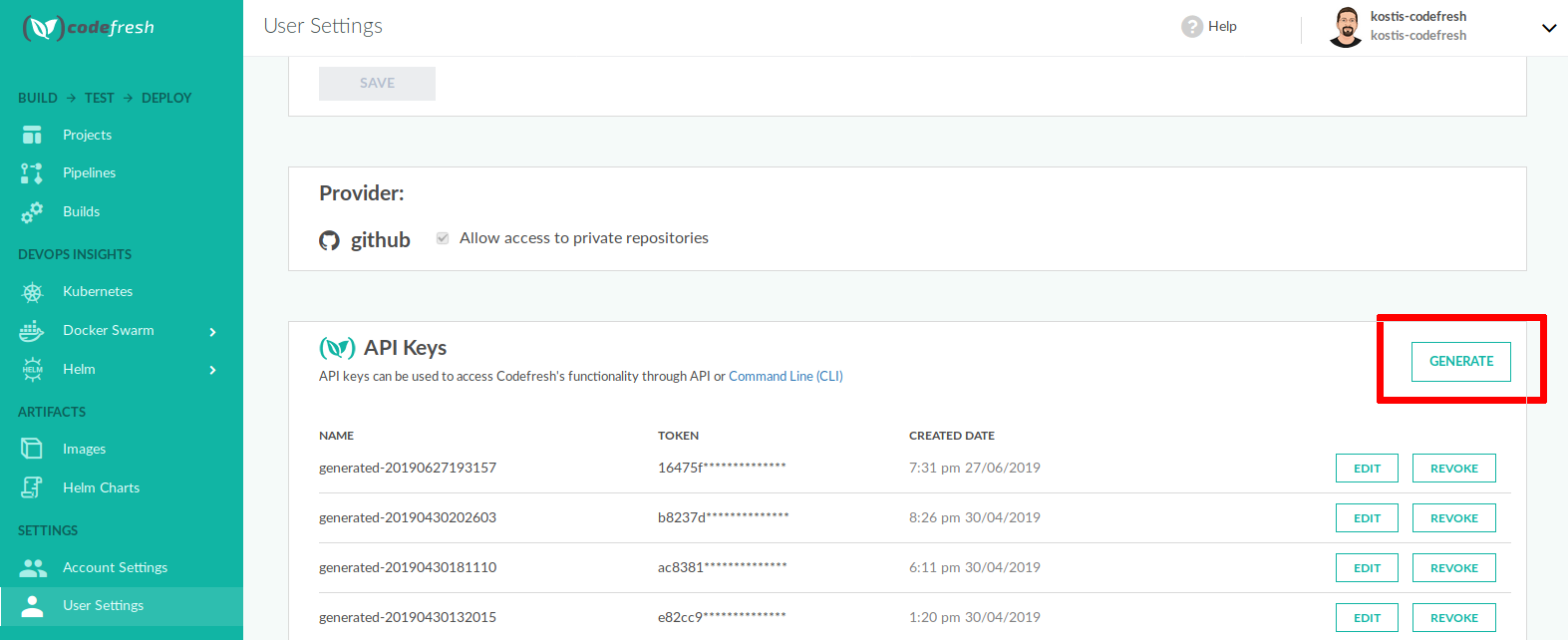

- Log in to your Codefresh account, and from your avatar dropdown, select User Settings.

- Scroll down to API Keys.

- To create a new API key, click Generate, and do the following:

- Key Name: Enter the name of the key, preferable one that will help you remember its purpose. The token is tied to your Codefresh account and should be considered sensitive information.

- Scopes: Select the required access scopes.

- Copy the token to your clipboard.

- Click Create.

From the same screen you can also revoke keys if you don’t need them anymore.

Access scopes

The following resources can be targeted with the API:

- Agent - Used for Codefresh Runner installation

- Audit - Read Audit logs

- Build - Get/change build status

- Cluster - Access control for Kubernetes clusters

- Environments-v2 - Read/Write Environment Dashboard information

- GitHub Actions - Run GitHub Actions inside Codefresh pipelines

- Pipeline - Access control for pipelines

- Repos - Refers to Git repositories

- Step Type - Refers to custom pipeline steps

The scopes available for each resource differ according to the type of resource.

Using the API Key with the Codefresh CLI

Once you have the key, use it in the Codefresh CLI:

codefresh auth create-context <context-name> --api-key <your_key_here>Now the Codefresh CLI is fully authenticated. The key is stored in ~/.cfconfig so you only need to run this command once. The CLI

can also work with multiple authentication contexts so you can manage multiple Codefresh accounts at the same time.

Example: Triggering pipelines

You can trigger any pipeline in Codefresh and even pass extra environment variables (even if they are not declared in the UI).

Triggering a pipeline via the Codefresh CLI:

codefresh run kostis-codefresh/nestjs-example/ci-build -b master -t nestjs-example-trigger-nameYou can pass extra environment variables as well:

codefresh run kostis-codefresh/nestjs-example/ci-build -b master -t nestjs-example-trigger-name -v sample-var1=sample1 -v SAMPLE_VAR2=SAMPLE2For the API, you can trigger a pipeline by finding its serviceId from the UI

curl 'https://g.codefresh.io/api/builds/5b1a78d1bdbf074c8a9b3458' --compressed -H 'content-type:application/json; charset=utf-8' -H 'Authorization: <your_key_here>' --data-binary '{"serviceId":"5b1a78d1bdbf074c8a9b3458","type":"build","repoOwner":"kostis-codefresh","branch":"master","repoName":"nestjs-example"}'You can also pass extra environment variables using an array

curl 'https://g.codefresh.io/api/builds/5b1a78d1bdbf074c8a9b3458' --compressed -H 'content-type:application/json; charset=utf-8' -H 'Authorization: <your_key_here>' --data-binary '{"serviceId":"5b1a78d1bdbf074c8a9b3458","type":"build","repoOwner":"kostis-codefresh","branch":"master","repoName":"nestjs-example","variables":{"sample-var1":"sample1","SAMPLE_VAR2":"SAMPLE2"}}'Example: Getting status from builds

You can get the status of a build from the CLI by using the build ID:

codefresh get builds 5b4f927dc70d080001536fe3Same thing with the API:

curl -X GET --header "Accept: application/json" --header "Authorization: <your_key_here>" "https://g.codefresh.io/api/builds/5b4f927dc70d080001536fe3"Example: Creating Codefresh pipelines externally

Codefresh has a great UI for creating pipelines for each of your projects. If you wish, you can also create pipelines programmatically in an external manner. This allows you to use your own templating solution for re-using pipelines and creating them from an external system.

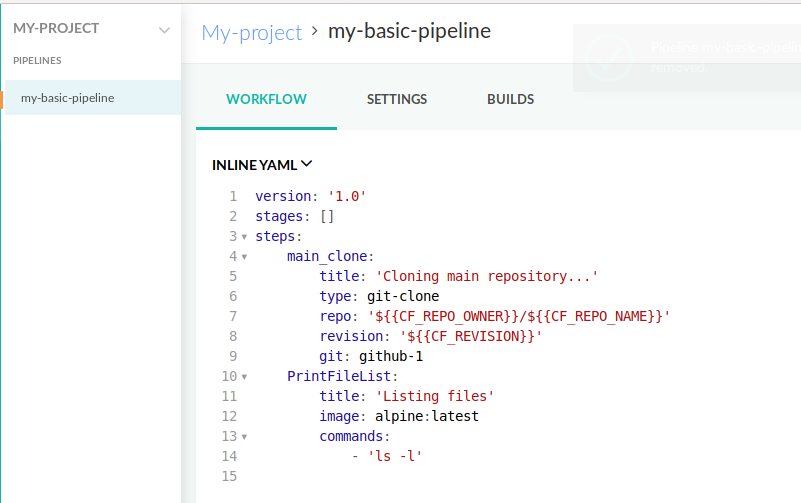

First you need a YAML file that defines the pipeline. This is a pipeline specification.

TIP

It is also very easy to create a a dummy pipeline in the Codefresh UI and then get its specification by running codefresh get pipeline my-project/my-pipeline -o yaml > my-pipeline-spec.yml

Here is an example:

Pipeline Spec

version: '1.0'

kind: pipeline

metadata:

name: my-project/my-basic-pipeline

description: my description

labels:

key1: value1

key2: value2

deprecate:

applicationPort: '8080'

project: my-project

spec:

triggers:

- type: git

provider: github

name: my-trigger

repo: kostis-codefresh/nestjs-example

events:

- push

branchRegex: /./

contexts: []

variables:

- key: PORT

value: 5000

encrypted: false

- key: SECRET

value: "secret-value"

encrypted: true

steps:

main_clone:

title: Cloning main repository...

type: git-clone

repo: '${{CF_REPO_OWNER}}/${{CF_REPO_NAME}}'

revision: '${{CF_REVISION}}'

git: github-1

PrintFileList:

title: Listing files

image: 'alpine:latest'

commands:

- ls -l

stages: []Save this spec into a file with an arbitrary name like my-pipeline-spec.yml. First create the new project (if it doesn’t exist already):

codefresh create project my-projectThen you can create the pipeline with the cli

codefresh create pipeline -f my-pipeline-spec.ymlAnd your pipeline will be available in the GUI

Notice that you must prefix the name of the pipeline with your username and repository so that it becomes visible in the GUI under the correct project.

Example: Referencing shared configuration contexts

Use shared configuration contexts in your pipelines by referencing it in the pipeline’s YAML. This method is an alternative to importing the shared configuration contexts with the variables to use from the UI.

Add the spec.contexts field followed by the name or names of the shared configuration context or contexts to use.

IMPORTANT

Referencing shared configuration contexts with spec.contexts only works programmatically via the CLI/API.

The UI-based YAML options (inline YAML, Use YAML from Repository/URL) do not support this.

If you have a shared configuration named test-hello that includes the variable test=hello, you can add spec.contexts.test-hello to the pipeline YAML, and then reference this variable in the pipeline as you would any other variable.

version: "1.0"

kind: pipeline

metadata:

name: default/test-shared-config-from-pipe-spec

spec:

contexts:

- test-hello

steps:

test:

image: alpine

commands:

- echo ${{test}} # should output "hello"Full pipeline specification

If you don’t want to create a pipeline from an existing one, you can also create your own YAML from scratch.

The following sections contain an explanation of the fields.

NOTE

Codefresh automatically generates additional fields, usually fields with dates and internal ID numbers. While you cannot edit these fields, you can view them by exporting the pipeline.

Top level fields

| Field name | Parent field | Type | Value |

|---|---|---|---|

version |

string | Always '1.0' |

|

kind |

string | Always pipeline |

|

metadata |

object | Holds various meta-information | |

spec |

object | Holds the pipeline definition and other related information |

Metadata fields

| Field name | Parent field | Type | Value |

|---|---|---|---|

name |

metadata |

string | the full pipeline name should be formatted project_name/pipeline_name |

project |

metadata |

string | the project that contains this pipeline |

originalYamlString |

metadata |

string | the full contents of the pipeline editor. Only kept for archival purposes |

labels |

metadata |

object | Holds the tags array |

tags |

labels |

array | A list of access control tags for this pipeline |

description |

metadata |

string | Human readable description of the pipeline |

isPublic |

metadata |

boolean | If true, the pipeline logs will be public even for non-authenticated users |

template |

metadata |

boolean | If true, this pipeline will be listed as a template when creating a new pipeline |

Example of metadata:

Pipeline Spec

version: '1.0'

kind: pipeline

metadata:

name: project_name/pipeline_name

project: project_name

labels:

tags:

- tag1

- tag2

description: pipeline description here

isPublic: false

template:

isTemplate: falseSpec fields

| Field name | Parent field | Type | Value |

|---|---|---|---|

steps |

spec |

object | The pipeline steps to be executed |

stages |

spec |

array | The pipeline stages for a better visual overview |

variables |

spec |

array | List of variables defined in the pipeline itself |

contexts |

spec |

array | Variable sets imported from shared configuration |

runtimeEnvironment |

spec |

array | where to execute this pipeline |

terminationPolicy |

spec |

array | Termination settings of this pipeline |

concurrency |

spec |

number | How many instances of this pipeline can run at the same time |

triggerConcurrency |

spec |

number | How many instances of this pipeline can run at the same time per trigger |

branchConcurrency |

spec |

number | How many instances of this pipeline can run at the same time per branch |

externalResources |

spec |

array | Optional external files available to this pipeline |

triggers |

spec |

array | a list of Git triggers that affect this pipeline |

options |

spec |

object | Extra options for the pipeline |

enableNotifications |

options |

boolean | if false the pipeline will not send notifications to Slack and status updates back to the Git provider |

Pipeline variables

The variables array has entries with the following fields:

| Field name | Parent field | Type | Value |

|---|---|---|---|

key |

variables |

string | Name of the variable |

value |

variables |

string | Raw value |

encrypted |

variables |

boolean | if true the value is stored encrypted |

Example of variables:

Pipeline Spec

variables:

- key: my-key

value: my-value

encrypted: false

- key: my-second-variable

value: '*****'

encrypted: trueEncrypted variables cannot be read back by exporting the pipeline.

Runtime environment

The runtimeEnvironment selects the cluster that will execute the pipeline (mostly useful for organizations using the Codefresh Runner).

| Field name | Parent field | Type | Value |

|---|---|---|---|

name |

runtimeEnvironment |

string | Name the environment as connected by the runner |

cpu |

runtimeEnvironment |

string | CPU share using Kubernetes notation |

memory |

runtimeEnvironment |

string | memory share using Kubernetes notation |

dindStorage |

runtimeEnvironment |

string | storage size using Kubernetes notation |

Example of metadata:

Pipeline Spec

runtimeEnvironment:

name: my-aws-runner/cf

cpu: 2000m

memory: 800Mi

dindStorage: nullGiExternal resources

The externalResources field is an array of objects that hold external resource information.

| Field name | Parent field | Type | Value |

|---|---|---|---|

type |

externalResources |

string | Only git is supported |

source |

externalResources |

string | Source folder or file path in Git repo |

context |

externalResources |

string | Name of Git provider to be used |

destination |

externalResources |

string | Target folder or file path to be copied to |

isFolder |

externalResources |

boolean | if true path is a folder, else it is a single file |

repo |

externalResources |

string | git repository name for the trigger. should be in format of git_repo_owner/git_repo_name |

revision |

externalResources |

string | branch name or git hash to checkout |

Pipeline Spec

externalResources:

- type: git

source: /src/sample/venonalog.json

context: my-github-integration

destination: codefresh/volume/helm-sample-app/

isFolder: false

repo: codefresh-contrib/helm-sample-app

revision: masterGit triggers

The triggers field is an array of objects that hold Git trigger information.

| Field name | Parent field | Type | Value |

|---|---|---|---|

name |

triggers |

string | user defined trigger name |

type |

triggers |

string | Always git |

repo |

triggers |

string | git repository name for the trigger. should be in format of git_repo_owner/git_repo_name |

events |

triggers |

array | All possible values are documented later. The possible values depend on Git provider |

pullRequestAllowForkEvents |

triggers |

boolean | If this trigger is also applicable to Git forks |

commentRegex |

triggers |

string | Only activate trigger if regex expression matches PR comment |

branchRegex |

triggers |

string | Only activate trigger if regex expression/string matches branch |

branchRegexInput |

triggers |

string | Defines what type of content is in branchRegex. Possible values are regex, multiselect, multiselect-exclude |

provider |

triggers |

string | Name of provider as found in Git integrations |

modifiedFilesGlob |

triggers |

string | Only activate trigger if changed files match glob expression |

disabled |

triggers |

boolean | if true, trigger will never be activated |

options |

triggers |

array | Choosing caching behavior of this pipeline |

noCache |

options |

boolean | if true, docker layer cache is disabled |

noCfCache |

options |

boolean | if true, extra Codefresh caching is disabled |

resetVolume |

options |

boolean | if true, all files on volume will be deleted before each execution |

context |

triggers |

string | Name of git context to use |

contexts |

spec |

array | Variable sets imported from shared configuration |

variables |

triggers |

array | Override variables that were defined in the pipeline level |

runtimeEnvironment |

triggers |

array | Override the runtime environment that was defined in the pipeline level |

The possible values for the events array are the following (only those supported by the Git provider can be actually used)

push.heads- for push commitpush.tags- for push tag eventpullrequest- any pull request eventpullrequest.opened- pull request openedpullrequest.closed- pull request closedpullrequest.merged- pull request mergedpullrequest.unmerged-closed- pull request closed (not merged)pullrequest.reopened- pull request reopenedpullrequest.edited- pull request editedpullrequest.assigned- pull request assignedpullrequest.unassigned- pull request unassignedpullrequest.reviewRequested- pull request review requestedpullrequest.reviewRequestRemoved- pull request review request removedpullrequest.labeled- pull request labeledpullrequest.unlabeled- pull request unlabeledpullrequest.synchronize- pull request synchronizedpullrequest.commentAdded- pull request comment added (restricted)pullrequest.commentAddedUnrestricted- Any pull request comment will trigger a build. We recommend using only on private repositories.release- Git release event

The variables and runtimeEnvironment fields have exactly the same format as in the parent pipeline fields but values defined in the trigger will take higher priority.

Full example:

Pipeline Spec

triggers:

- name: guysalton-codefresh/helm-sample-app

type: git

repo: guysalton-codefresh/helm-sample-app

events:

- push.heads

- pullrequest.commentAdded

pullRequestAllowForkEvents: true

commentRegex: /.*/gi

branchRegex: /^((master|develop)$).*/gi

branchRegexInput: regex

modifiedFilesGlob: /project1/**

provider: github

disabled: false

options:

noCache: false

noCfCache: false

resetVolume: false

verified: true

context: guyGithub

contexts:

- artifactory

variables:

- key: key

value: '*****'

encrypted: true

runtimeEnvironment:

name: docker-desktop/cf

cpu: 400m

memory: 800Mi

dindStorage: nullGiCron triggers

The cronTriggers field is an array of objects that hold Cron trigger information.

| Field name | Parent field | Type | Value |

|---|---|---|---|

event |

cronTriggers |

string | Leave empty. Automatically generated by Codefresh for internal use. |

name |

cronTriggers |

string | The user-defined name for the Cron trigger. |

message |

cronTriggers |

string | The free-text message to be sent as an additional event payload every time the Cron trigger is activated. For example, Successful ingress tests |

expression |

cronTriggers |

string | The Cron expression that defines the time and frequency of the Cron trigger. For example, 0 3 * * 1-5 triggers the pipeline at 3:00 AM every weekday (Monday to Friday). |

disabled |

cronTriggers |

boolean | Determines if the Cron trigger is enabled for activation. By default, set to false meaning that it is always enabled. To disable the trigger, set to true. |

gitTriggerId |

cronTriggers |

string | The ID of the Git trigger to simulate for the pipeline, retrieved from the pipeline for which it is defined. To simulate a Git trigger, the pipeline must have at least one Git trigger defined for it. See Git triggers in this article. |

branch |

cronTriggers |

string | Valid only when a Git trigger is simulated. The branch of the repo retrieved from the Git trigger defined in gitTriggerId. |

variables |

cronTriggers |

array | The environment variables to populate for the pipeline when the Cron trigger is activated. See Variables in pipelines. |

options |

cronTriggers |

array | The behavior override options to implement for the current build. By default all overrides are set to false, meaning that the build inherits the default behavior set for the pipeline at the account level. Can be any of the following:

|

Pipeline Spec example for Cron triggers

...

cronTriggers:

- name: SSO sync

type: cron,

message: "Sync successfull"

expression: "0 0/1 * 1/1 * *"

gitTriggerId: 64905de8589da959de81d31d

branch: main

variables: []

options:

noCfCache: false

noCache: false

resetVolume: false

enableNotifications: true

disabled: true

event: cron:codefresh:0 0/1 * 1/1 * *:tests:ecef63b9ed20

verified: true

status: verified

id: 64ddd8b04fdbcc74cc74fe80

...Using Codefresh from within Codefresh

The Codefresh CLI is also packaged as a Docker image on its own. This makes it very easy to use it from within Codefresh in a freestyle step.

For example, you can easily call pipeline B from pipeline A

with the following step:

codefresh.yml of pipeline A

version: '1.0'

steps:

myTriggerStep:

title: triggering another pipeline

image: codefresh/cli

commands:

- 'codefresh run <pipeline_B> -b=${{CF_BRANCH}}' -t <pipeline-b-trigger-name>

when:

condition:

all:

validateTargetBranch: '"${{CF_PULL_REQUEST_TARGET}}" == "production"'

validatePRAction: '''${{CF_PULL_REQUEST_ACTION}}'' == ''opened'''This step only calls pipeline B when a pull request is opened for the branch named production.

Note that when you use the Codefresh CLI in a pipeline step, it is already configured, authenticated, and ready for use. No additional authentication is required.