This article is part of our series “Codefresh in the Wild” which shows how we picked public open-source projects and deployed them to Kubernetes with our own pipelines. The results are public at https://github.com/codefresh-contrib/example-pastr

We will use several tools such as GitHub, Docker, AWS, Codefresh, Argo CD, Kustomize. This guide chronicles how we integrated all those tools together in order to build an end-to-end Kubernetes deployment workflow.

About Pastr

This week’s pick is Pastr, a super minimal URL shortener. “Pastr” stands out for its simplicity and functionality, utilizing flat-file storage with no dependencies. By choosing to work with this project, we aimed to delve deep into CI/CD and GitOps methodologies.

We began by forking “Pastr” into a public GitHub repository, “codefresh-contrib/example-pastr“. This repository became the central hub for all our work, including the docker files, CI/CD pipeline YAMLs, and manifests.

We also created a repository to store ArgoCD app manifests, called “codefresh-contrib/example-pastr-apps”.

Creating the CI Pipeline

The first step is always to create a Continuous Integration (CI) pipeline that takes the source code and creates a docker image after performing several tests.

Using Codefresh, we built a comprehensive CI pipeline. Below we explain all the main points we took into account for its implementation.

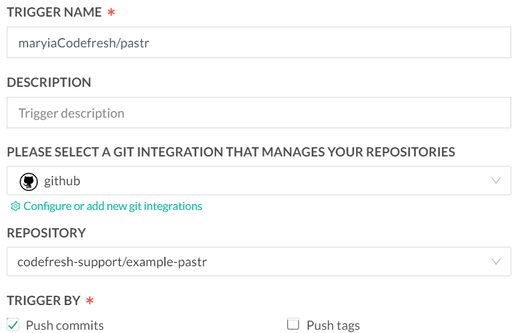

First, we want to trigger Builds on each code change. In Codefresh, you can set up a pipeline that’s triggered every time there’s a commit to your repository. This is done by creating a Git trigger. And here is how the trigger is defined:

As you can see, we also added the “Modified files” field to prevent an endless cycle of running builds when we later show you how we change the Kubernetes manifests.

This concludes the basic on-demand build settings.

Scheduling nightly builds for security updates

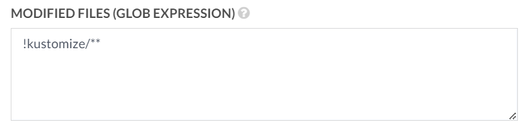

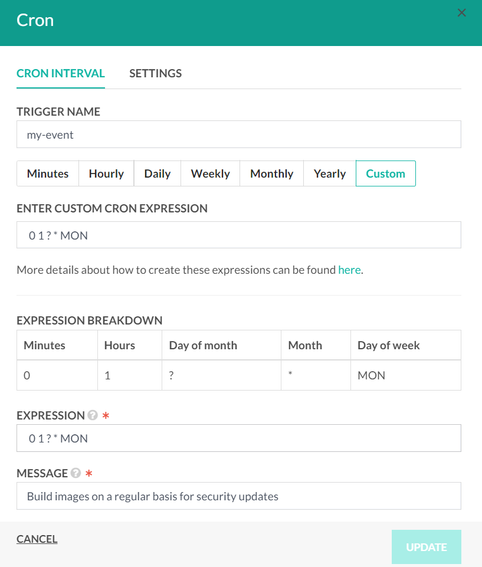

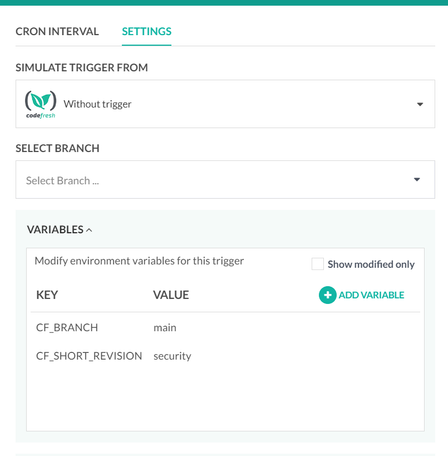

Next we want to schedule periodic builds to check for security updates. Codefresh allows you to schedule pipelines to run at regular intervals using Cron triggers. Here’s how we set a cron trigger:

This trigger we have created is going to run the build every Monday at 01:00 AM.

We also added specific variables for our Cron trigger so it would build an image with a specific “security” tag.

Detection and Alerting of Build Errors

In real life, pipelines and deployments are not always successful. We also want to configure Codefresh to send notifications (like emails, Slack messages, etc.) if a build fails. Codefresh has native support for notifications with all the popular methods.

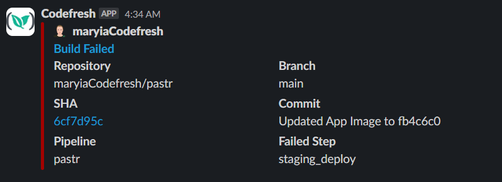

Here is how it looks in Slack:

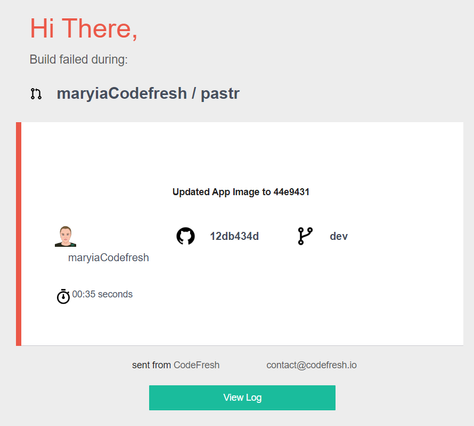

And here is how an email notification looks:

This way developers are instantly notified when things go wrong.

Essential Continuous Integration steps

We want to run unit tests for our application. These tests must be run in the pipeline before the build process. If a test fails, the pipeline can be configured to stop, preventing faulty code from moving further down the pipeline. We already set up alerts for any build errors, ensuring immediate feedback to the development team.

Our Dockerfile was already set up to build the “Pastr” application. In Codefresh, you’d use the “build” step in your pipeline to build the Docker image, and push it to a Docker registry like Docker Hub or others.

Kubernetes Deployment

If you’re deploying to Kubernetes like we are, you might have deployment manifests that need updating with the new Docker image version. This can be automated in Codefresh by adding steps in our pipeline.

For complex workflows, we can trigger child pipelines from a main pipeline. This is useful for multi-stage deployments, multi-environment setups, or when different teams are responsible for different parts of the deployment process.

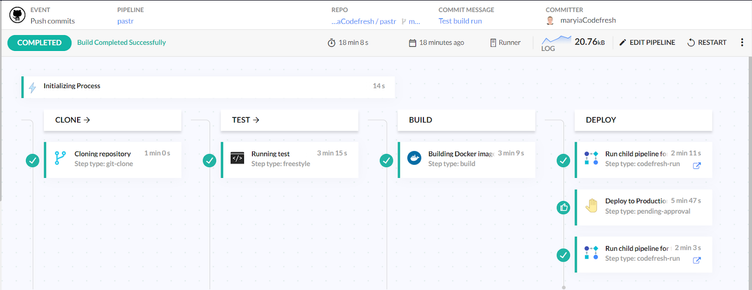

To ensure all of the above works together, we built a Codefresh pipeline.

- There is a “git-clone” step at the very beginning of the pipeline, which clones our repository in a shared volume.

- Then the tests, build, and push of the image.

- After that, the pipeline runs a step to change the image tag in the staging environment and commit it to the manifest, which lies in the repository.

- Next, we have an “approval” step that pauses the execution of the build for 3 hours and waits for input from the user. Upon confirmation, the build will continue, and the tag for the production environment will be updated; if rejected, the build will finish its execution.

This is how we have created 3 YAML files for our pipelines. You can find them in the repository in the directory called “.codefresh”. Don’t forget to set up your integrations correctly following our guides before running the pipeline:

- Git: Git provider pipeline integrations

- Docker: Docker registries for pipeline integrations

- K8s: Kubernetes pipeline integration

- AWS: Amazon Web Services (AWS) pipeline integration

Using Codefresh GitOps for deployments

Once the pipelines are ready, we can deploy the application using the GitOps features in Codefresh. The process was seamless, illustrating the power and efficiency of combining Codefresh and GitOps principles.

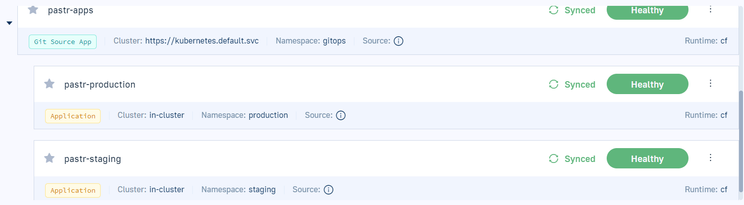

We created ArgoCD app manifests using the Codefresh UI; they’re stored at the “maryiaCodefresh/pastr-apps‘ ‘ repository. It’s a matter of a few minutes. Try it out yourself!

Following the best practices of GitOps, we divided the configuration into code and separate repositories. Using Kustomize, we also divided the environments into directories. The result was elegant and efficient, showcasing the benefits of trunk-based deployment with Kubernetes.

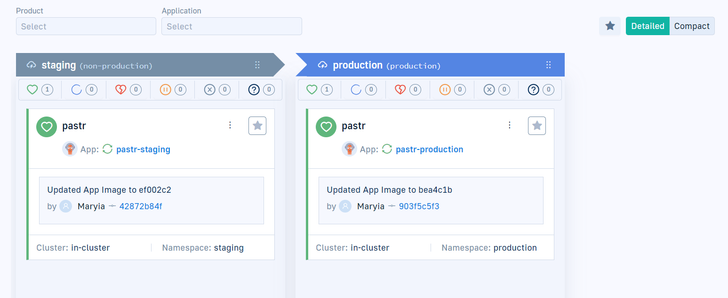

The GitOps Environments Dashboard

We would also like to highlight a cool feature of Codefresh – the GitOps Environment Dashboard. It’s incredibly helpful for tracking the status of not only the Argo applications but also for viewing the latest deployment, and commit information, comparing environments.

While we currently manage only two environments and two app instances, imagine managing more—perhaps 100 or even 1000 environments! This dashboard provides a live overview of all processes, facilitating control over application statuses, deployment details, and providing insights into who deployed what and when. It’s an invaluable tool for maintaining oversight of clusters and namespaces, especially as complexity grows.

Summary

By documenting this process and sharing our experiences, we hope to inspire and guide others in the tech community. This journey not only provided a robust deployment pipeline for “Pastr” but also a template that others can adapt to their projects, ensuring best practices in CI/CD and GitOps are more widely understood and utilized. Happy deploying!

Photo by Cara Fuller on Unsplash