What is Kubernetes Deployment Strategy?

To understand the concept of a Kubernetes Deployment strategy, let’s explain two possible meanings of “deployment” in a Kubernetes environment:

- A deployment is the process of installing a new version of an application or workload on Kubernetes pods.

- A

Deployment, with capital D, is a Kubernetes object that has its own YAML configuration, and lets you define how a deployment should occur, what exactly should be deployed, and also how requests should be routed to the newly installed application.

The deployment strategy is the way in which pods should be updated to a new version of the application. For example, one option is to delete all the pods and recreate them with the new version, which incurs downtime. There are more advanced options that can update the application gradually with limited service interruption.

The “holy grail” of Kubernetes deployments is known as progressive delivery. This includes strategies like blue/green deployment, in which two versions of the application are deployed side by side, and traffic is switched seamlessly to the new version. Progressive delivery is not built into Kubernetes, but you can configure it by customizing the Deployment object or using specialized deployment tools.

Learn how to define Kubernetes Deployment objects in our guide to Kubernetes Deployment YAML

Top Kubernetes Deployment Strategies

We’ll review several Kubernetes Deployment strategies. It is important to realize that only Recreate and Rolling deployments are supported by the Kubernetes Deployment object out of the box.

It is possible to perform blue/green or other types of deployments in Kubernetes, but this will require customization or specialized tooling.

1. Recreate Deployment

A recreate deployment strategy is an all-or-nothing process that lets you update an application immediately, with some downtime.

With this strategy, existing pods from the deployment are terminated and replaced with a new version. This means the application experiences downtime from the time the old version goes down until the new pods start successfully and start serving user requests.

A recreate strategy is suitable for development environments, or when users prefer a short period of downtime rather than a prolonged period of reduced performance or errors (which might happen in a rolling deployment). The bigger the update, the more likely you are to experience errors in rolling updates.

Additional scenarios where a recreate deployment is appropriate:

- You have the ability to schedule planned maintenance during off times (for example, during the weekend for an application only accessed during business hours).

- For technical reasons it is impossible to have 2 versions of the same app running at the same time. A recreate deployment lets you shut down the previous version and only then start the new one.

2. Rolling Deployment

Rolling deployment is a deployment strategy that updates a running instance of an application to a new version. All nodes in the target environment are incrementally updated to a new version; the update occurs in pre-specified batches. This means rolling deployments requires two versions of a Service—one for the old version and another for the new version of the application.

The advantages of a rolling deployment are that it is relatively easy to roll back, is less risky than a recreate deployment, and is easier to implement.

The downsides are that it can be slow, and there is no easy way to roll back to the previous version if something goes wrong. Also, it means your application might have multiple versions running at the same time in parallel. This might be problematic for legacy applications, which might force you to use a recreate strategy.

3. Blue/Green Deployment (Red/Black Deployment)

A blue/green (or red/black) deployment strategy enables you to deploy a new version while avoiding downtime. Blue represents the current version of the application, while green represents the new version.

This strategy keeps only one version live at any given time. It involves routing traffic to a blue deployment while creating and testing a green deployment. After the testing phase is concluded, you start routing traffic to the new version. Then, you can keep the blue deployment for a future rollback or decommission it.

A blue/green deployment eliminates downtime and reduces risk because you can immediately roll back to the previous version if something occurs while deploying the new version. It also helps avoid versioning issues because you change the entire application state in one deployment.

However, this strategy requires double resources for both deployments and can incur high costs. Furthermore, it requires a way to switch over traffic rapidly from blue to green version and back.

4. Canary Deployment

A canary deployment strategy enables you to test a new application version on a real user base without committing to a full rollout. It involves using a progressive delivery model that initiates a phased deployment. Canary deployment strategies encompass various deployment types, including A/B testing and dark launches.

Typically, a canary strategy gradually deploys a new application version to the Kubernetes cluster, testing it on a small amount of live traffic. It enables you to test a major upgrade or experimental feature on a subset of live users while providing all other users with the previous version.

A canary deployment requires using two almost identical ReplicaSets – one for all active users and another rolling out new features to a small subset of users. Once confidence increases in the new version, you can gradually roll it out to the entire infrastructure. This process ends with all live traffic going to canaries until the canary version becomes the new production version.

Like in a blue/green deployment, there are two disadvantages: the application needs to be able to run multiple versions at the same time, and you need to have a smart traffic mechanism that can route a subset of requests to the new version.

Related content: Read our guide to Kubernetes canary deployments

5. A/B Testing

In the context of Kubernetes, A/B testing refers to canary deployments that distribute traffic to different versions of an application based on certain parameters. A typical canary deployment routes users based on traffic weights, whereas A/B testing can target specific users based on cookies, user agents, or other parameters.

An important application of A/B testing in Kubernetes is testing a few options of a new feature, identifying which one users prefer, and then rolling out that version to all users.

6. Shadow Deployment

Shadow deployments are another type of canary deployments where you test a new release on production workloads. A shadow deployment splits traffic between a current and a new version, without end users noticing the difference. When the stability and performance of the new version meets predefined requirements, operators trigger a full rollout.

The advantage of shadow deployments is that they can help test non-functional aspects of a new version, such as performance and stability.

The downside is that shadow deployments are complex to manage and require twice the resources to run compared to a standard deployment.

Learn more in our detailed guide to Kubernetes in production

Which Strategy to Choose?

The following table summarizes deployment strategies and their pros and cons, to help you choose the best strategy for your use case.

| Deployment Strategy | Available in K8s out of the box? | Pros |

|---|---|---|

| Recreate | Yes | Fast and consistent. |

| Rolling | Yes | Minimizes downtime, provides security guarantees. |

| Blue/Green | No | No downtime and low risk, easy to switch traffic back to the current working version in case of issues. |

| Canary | No | Seamless to users, makes it possible to evaluate a new version and get user inputs with low risk. |

| A/B Testing | No | Makes it possible to test multiple versions of a new deployment. |

| Shadow Deployment | No | Seamless to users, enables low-risk production testing of stability and performance issues. |

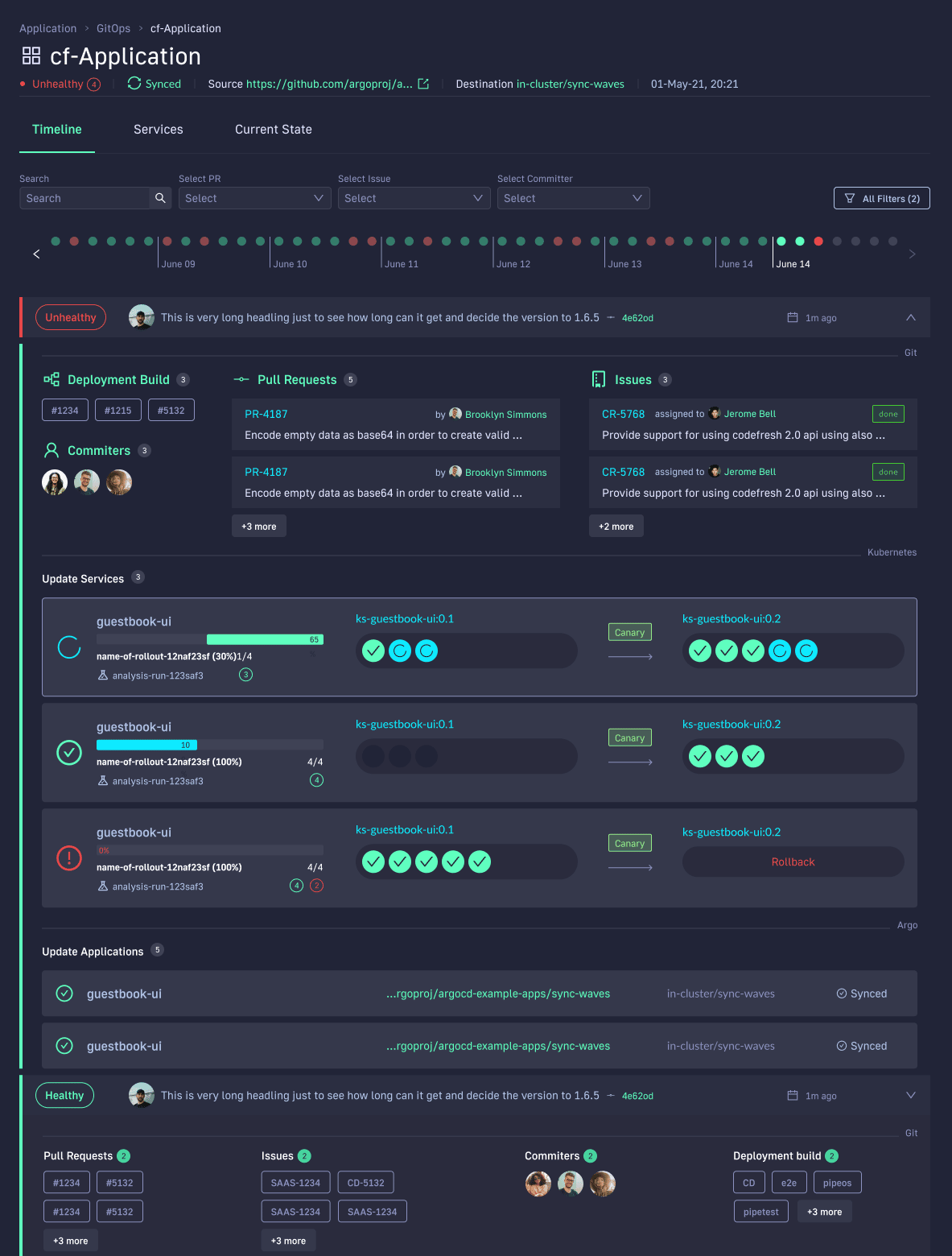

Kubernetes Deployment with Codefresh

Codefresh lets you answer many important questions within your organization, whether you’re a developer or a product manager. For example:

- What features are deployed right now in any of your environments?

- What features are waiting in Staging?

- What features were deployed last Thursday?

- Where is feature #53.6 in our environment chain?

What’s great is that you can answer all of these questions by viewing one single dashboard. Our applications dashboard shows:

- Services affected by each deployment

- The current state of Kubernetes components

- Deployment history and log of who deployed what and when and the pull request or Jira ticket associated with each deployment

This allows not only your developers to view and better understand your deployments, but it also allows the business to answer important questions within an organization. For example, if you are a product manager, you can view when a new feature is deployed or not and who was it deployed by.