What Is a GitLab CI/CD Pipeline?

GitLab is a collaborative DevOps platform and open source code repository for large software development projects.

GitLab provides an online code storage location, as well as CI/CD and issue tracking capabilities. The GitLab repository allows you to host different versions and development chains, so users can explore older code and roll back the software when unexpected issues arise.

GitLab pipelines are the basic building blocks of CI/CD—a pipeline is a top-level component for continuous integration and delivery/deployment. A pipeline includes the following components:

- Jobs—these define the desired actions, such as compiling or testing code. A runner executes each job, and you can run multiple parallel jobs if you have concurrent runners.

- Stages—these determine when each job runs, such as the code compilation stage followed by the test running stage.

A CI/CD pipeline in Gitlab consists of the following stages, usually in this order:

- Build—includes a “compile” job.

- Testing—usually includes at least two jobs (i.e., Test 1 and Test 2).

- Staging—a preparatory stage that includes a “deployment to staging” job.

- Production—includes a “deployment to production” job.

This is part of a series of articles about GitLab CI.

Types of GitLab Pipelines

There are many ways to configure a GitLab pipeline.

Basic Pipeline

Basic pipelines are the simplest option in GitLab, with all jobs in the build stage running concurrently. After completing the build stage, it runs all the test jobs similarly. While not the most efficient type of pipeline, it is relatively easy to maintain, although it can become complex if you include many steps.

DAG Pipeline

A directed acyclic graph (DAG) is useful for establishing the relationships between the jobs in a CI/CD pipeline. It helps ensure the fastest possible execution regardless of the stages’ configurations.

For instance, your project could have a separate tool or website. You can use a DAG to define the relationship between the jobs—GitLab will execute these jobs as quickly as possible rather than waiting for each stage to finish.

GitLab DAG pipelines differ from other CI/CD DAG solutions because they don’t require choosing between traditional or DAG-based operations. GitLab supports hybrid pipelines with stage-based and DAG execution in the same pipeline. It keeps configuration simple and only requires one keyword to enable any job feature.

Branch, Merge Request, Result, and Train Pipelines

You can set a pipeline to start whenever you commit a new change to a branch—this pipeline type is known as a branch pipeline. Alternatively, you can configure your pipeline to run every time you make changes to the source branch for a merge request. This type of pipeline is called a merge request pipeline.

A branch pipeline runs when you push a new commit to a branch. It can access several predefined variables and protected variables and runners. It is the default pipeline type.

A merge request pipeline runs when you create new merge requests, push new commits to a merge request’s source branch, or select “run pipeline” on a merge request’s pipeline tab (the third option only applies to merge request pipelines with a source branch that has one or more commits.

Merge request pipelines can access many predefined variables but not protected variables or runners. They are not the default pipeline type. The CI/CD config file must set all jobs to run in a merge request pipeline.

There are three varieties of merge request pipelines:

- Standard merge request pipeline—runs on changes in the source branch of a merge request. This pipeline has been available since GitLab 14.9 and displays a “merge request” label indicating that the pipeline only runs on the source branch contents, not the target branch. GitLab 14.8 and older versions don’t have this label attached.

- Merged results pipeline—runs on a result of merging changes to the source branch with the target branch.

- Merge train—runs when you merge multiple requests simultaneously. It combines each merge request’s changes into the target branch alongside earlier changes to ensure everything works together.

Parent-Child Pipeline

With pipelines becoming more complex, several related issues can arise:

- A hierarchical stage structure is rigid and can slow down the process. The pipeline must complete all steps in one stage before the first action in the next stage can start, often resulting in jobs waiting for a long time.

- Building a global pipeline can be a long, complex task. The pipeline configuration becomes difficult to manage.

Imports can add complexity to your configuration and cause namespace conflicts if you duplicate jobs accidentally. - The pipeline user experience becomes unintuitive with too many stages and jobs to handle.

You may also want a more dynamic pipeline that lets you choose when to start a sub-pipeline. This capability is especially useful with dynamically generated YAML.

A parent-child pipeline can trigger multiple child pipelines from one parent pipeline—all the sub-pipelines run in one project and use the same SHA. It is a popular pipeline architecture for mono-repositories. Sub-pipelines work well with other CI/CD and GitLab features.

Multi-Project Pipeline

GitLab CI/CD supports multiple projects, allowing you to configure pipelines from one project to trigger downstream pipelines in another. GitLab lets you visualize your entire pipeline in a single place, including all interdependencies between projects.

For instance, you can deploy a web application from separate GitLab projects, with building, testing, and deployment processes for each project. A multi-project pipeline allows you to visualize all these stages from all projects. It is also useful for large-scale products with interdependencies between projects.

How to Optimize GitLab CI/CD Pipelines

Here are some practices to help optimize your CI/CD pipelines in GitLab.

Identify Common Errors and Bottlenecks

Check the runtimes of jobs and stages to identify pipeline inefficiencies. A pipeline’s total duration depends on the repository size, number of jobs and stages, dependencies, and availability of resources and runners. Other factors include slow connections and latency.

If a pipeline fails frequently, look for common patterns in failed jobs, such as randomly failing unit tests, insufficient test coverage, and failed tests that slow down feedback.

Leverage Pipeline Information

Use GitLab’s pipeline duration and success charts to see information about failed jobs and pipeline runtimes. The CI/CD pipeline automatically detects issues through code quality, unit, and integration tests. Accelerate your pipeline’s runtime by running parallel tests within the same stage (this requires more concurrent runners).

Identify Failures Quickly

Detect errors early in the/CD pipeline by running faster jobs first to enable fail-fast testing. The remaining pipeline won’t run if a job fails, so earlier failures save time and resources. Longer jobs take more time to fail.

Test and Document Pipelines

Use an iterative approach to improving your pipelines by monitoring the impact of changes. Document the pipeline architecture using Mermaid charts in your GitLab repo. Record issues and solutions to identify recurring inefficiencies and help onboard new workers.

Related content: Read our guide to GitLab CI/CD tutorial

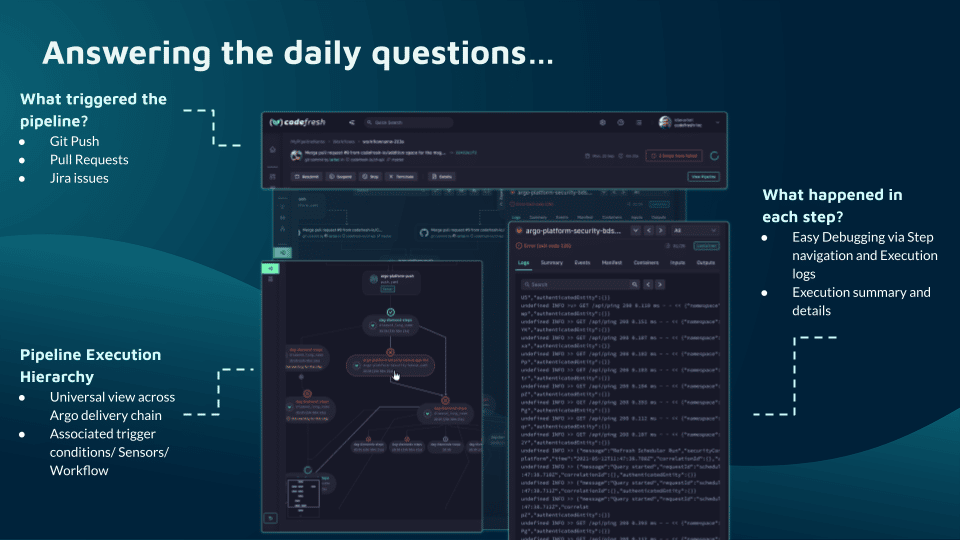

Codefresh: A Modern Alternative to GitLab CI/CD

You can’t get to continuous delivery or deployment without first solving continuous integration. Codefresh automatically creates a Delivery Pipeline, which is a workflow along with the events that trigger it. We’ve added a pipeline creation wizard that will create all the component configurations so you can spend less time with YAML and more time getting work done.

At the end of the pipeline creation wizard, Codefresh commits the configuration to git and allows its built-in Argo CD instance to deploy them to Kubernetes.

The Delivery pipeline model also allows the creation of a single reusable pipeline that lets DevOps teams build once and use everywhere. Each step in a workflow operates in its own container and pod. This allows pipelines to take advantage of the distributed architecture of Kubernetes to easily scale both on the number of running workflows and within each workflow itself.

Teams that adopt Codefresh deploy more often, with greater confidence, and are able to resolve issues in production much more quickly. This is because we unlock the full potential of Argo to create a single cohesive software supply chain. For users of traditional CI/CD tooling, the fresh approach to software delivery is dramatically easier to adopt, more scalable, and much easier to manage with the unique hybrid model.

The World’s Most Modern CI/CD Platform

A next generation CI/CD platform designed for cloud-native applications, offering dynamic builds, progressive delivery, and much more.

Check It Out