Disclaimer: all code snippets below require Docker 1.13+

TL;DR

Docker 1.13 simplifies deployment of composed applications to a swarm (mode) cluster. And you can do it without creating a new dab (Distribution Application Bundle) file, but just using familiar and well-known docker-compose.yml syntax (with some additions) and the --compose-file option. Read about this exciting feature, create a free Codefresh account, and try building, testing and deploying images instantly.

Swarm cluster

Docker Engine 1.12 introduced a new swarm mode for natively managing a cluster of Docker Engines called a swarm. Docker swarm mode implements Raft Consensus Algorithm and does not require using external key value store anymore, such as Consul or etcd.

If you want to run a swarm cluster on a developer’s machine, there are several options.

The first option and most widely known is to use a docker-machine tool with some virtual driver (Virtualbox, Parallels or other).

But, in this post, I will use another approach: using docker-in-docker Docker image with Docker for Mac, see more details in my Docker Swarm cluster with docker-in-docker on MacOS post.

Docker Registry mirror

When you deploy a new service on a local swarm cluster, I recommend setting up a local Docker registry mirror so you can run all swarm nodes with the --registry-mirror option, pointing to your local Docker registry. By running a local Docker registry mirror, you can keep most of the redundant image fetch traffic on your local network and speedup service deployment.

Docker Swarm cluster bootstrap script

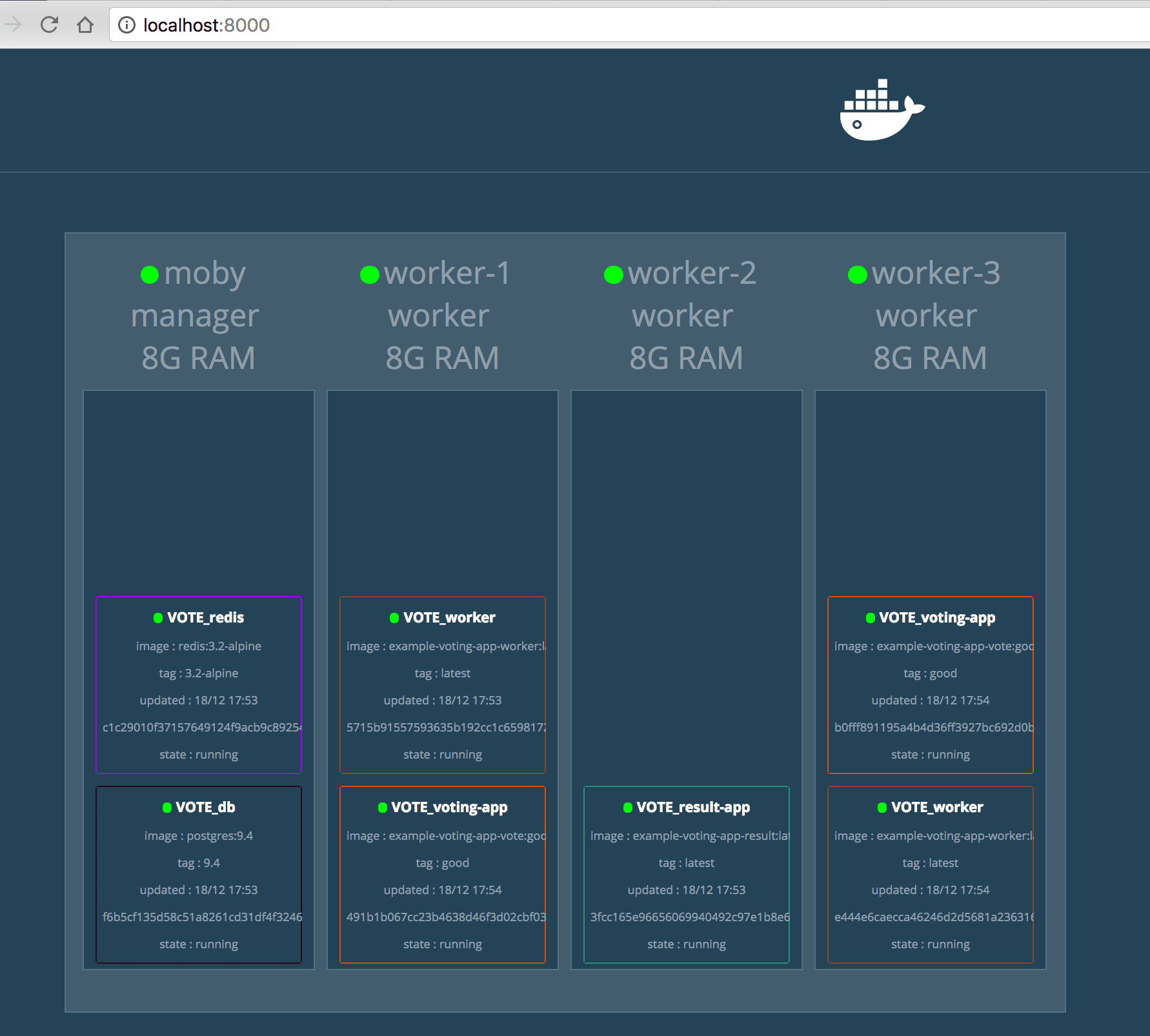

I’ve prepared a shell script to bootstrap a 4 node swarm cluster with Docker registry mirror and the very nice swarm visualizer application.

The script initializes docker engine as a swarm master, then starts 3 new docker-in-docker containers and joins them to the swarm cluster as worker nodes. All worker nodes run with the --registry-mirror option.

#!/bin/bash

# vars

[ -z "$NUM_WORKERS" ] && NUM_WORKERS=3

# init swarm (need for service command); if not created

docker node ls 2> /dev/null | grep "Leader"

if [ $? -ne 0 ]; then

docker swarm init > /dev/null 2>&1

fi

# get join token

SWARM_TOKEN=$(docker swarm join-token -q worker)

# get Swarm master IP (Docker for Mac xhyve VM IP)

SWARM_MASTER=$(docker info --format "{{.Swarm.NodeAddr}}")

echo "Swarm master IP: ${SWARM_MASTER}"

sleep 10

# start Docker registry mirror

docker run -d --restart=always -p 4000:5000 --name v2_mirror

-v $PWD/rdata:/var/lib/registry

-e REGISTRY_PROXY_REMOTEURL=https://registry-1.docker.io

registry:2.5

# run NUM_WORKERS workers with SWARM_TOKEN

for i in $(seq "${NUM_WORKERS}"); do

# remove node from cluster if exists

docker node rm --force $(docker node ls --filter "name=worker-${i}" -q) > /dev/null 2>&1

# remove worker contianer with same name if exists

docker rm --force $(docker ps -q --filter "name=worker-${i}") > /dev/null 2>&1

# run new worker container

docker run -d --privileged --name worker-${i} --hostname=worker-${i}

-p ${i}2375:2375

-p ${i}5000:5000

-p ${i}5001:5001

-p ${i}5601:5601

docker:1.13-rc-dind --registry-mirror http://${SWARM_MASTER}:4000

# add worker container to the cluster

docker --host=localhost:${i}2375 swarm join --token ${SWARM_TOKEN} ${SWARM_MASTER}:2377

done

# show swarm cluster

printf "nLocal Swarm Clustern===================n"

docker node ls

# echo swarm visualizer

printf "nLocal Swarm Visualizern===================n"

docker run -it -d --name swarm_visualizer

-p 8000:8080 -e HOST=localhost

-v /var/run/docker.sock:/var/run/docker.sock

manomarks/visualizer:beta

Deploying multi-container application – the “old” way

The Docker compose is a tool (and deployment specification format) for defining and running composed multi-container Docker applications. Before Docker 1.12, you could use docker-compose to deploy such applications to a swarm cluster. With 1.12 release, that is no longer possible: docker-compose can deploy your application on single Docker host only.

In order to deploy it to a swarm cluster, you need to create a special deployment specification file (also knows as Distribution Application Bundle) in dab format (see more here).

To create this file, run docker-compose bundle. This will create a JSON file that describes a multi-container composed application with Docker images referenced by @sha256 instead of tags. Currently the dab file format does not support multiple settings from docker-compose.yml and does not support options from the docker service create command.

It’s really too bad, the dab bundle format looks promising, but is useless in Docker 1.12.

Deploying multi-container application – the “new” way

With Docker 1.13, the “new” way to deploy a multi-container composed application is to use docker-compose.yml again (hurrah!). Kudos to the Docker team!

*Note: You do not need docker-compose. You only need a yaml file in docker-compose format (version: "3")

$ docker deploy --compose-file docker-compose.yml

Docker compose v3 (version: "3")

So, what’s new in docker compose version 3?

First, I suggest you take a deeper look at the docker-compose schema. It is an extension of the well-known docker-compose format.

Note:docker-compose tool (ver. 1.9.0) does not support docker-compose.yaml version: "3" yet.

The most visible change is around swarm service deployment.

Now you can specify all options supported by docker service create/update commands, this includes:

- number of service replicas (or global service)

- service labels

- hard and soft limits for service (container) CPU and memory

- service restart policy

- service rolling update policy

- deployment placement constraints link

Docker compose v3 example

I’ve created a “new” compose file (v3) for the classic Cats vs. Dogs voting app. This example application contains 5 services with the following deployment configurations:

voting-app– a Python webapp which lets you vote between two options; requiresredisredis– Redis queue which collects new votes; deployed onswarm managernodedb– Postgres database backed by a Docker volume; deployed on swarm manager node- result-app Node.js webapp which shows the results of the voting in real time; 2 replicas, deployed on swarm worker nodes

worker.NET worker which consumes votes and stores them indb;

- # of replicas: 2 replicas

- hard limit: max 25% CPU and 512MB memory

- soft limit: max 25% CPU and 256MB memory

- placement: on

swarm workernodes only - restart policy: restart on-failure, with 5 seconds delay, up to 3 attempts

- update policy: one by one, with 10 seconds delay and 0.3 failure rate to tolerate during the update

version: "3"

services:

redis:

image: redis:3.2-alpine

ports:

- "6379"

networks:

- voteapp

deploy:

placement:

constraints: [node.role == manager]

db:

image: postgres:9.4

volumes:

- db-data:/var/lib/postgresql/data

networks:

- voteapp

deploy:

placement:

constraints: [node.role == manager]

voting-app:

image: gaiadocker/example-voting-app-vote:good

ports:

- 5000:80

networks:

- voteapp

depends_on:

- redis

deploy:

mode: replicated

replicas: 2

labels: [APP=VOTING]

placement:

constraints: [node.role == worker]

result-app:

image: gaiadocker/example-voting-app-result:latest

ports:

- 5001:80

networks:

- voteapp

depends_on:

- db

worker:

image: gaiadocker/example-voting-app-worker:latest

networks:

voteapp:

aliases:

- workers

depends_on:

- db

- redis

# service deployment

deploy:

mode: replicated

replicas: 2

labels: [APP=VOTING]

# service resource management

resources:

# Hard limit - Docker does not allow to allocate more

limits:

cpus: '0.25'

memory: 512M

# Soft limit - Docker makes best effort to return to it

reservations:

cpus: '0.25'

memory: 256M

# service restart policy

restart_policy:

condition: on-failure

delay: 5s

max_attempts: 3

window: 120s

# service update configuration

update_config:

parallelism: 1

delay: 10s

failure_action: continue

monitor: 60s

max_failure_ratio: 0.3

# placement constraint - in this case on 'worker' nodes only

placement:

constraints: [node.role == worker]

networks:

voteapp:

volumes:

db-data:

Run the docker deploy –compose-file docker-compose.yml VOTE command to deploy my version of “Cats vs. Dogs” application on a swarm cluster.

Hope you find this post useful. I look forward to your comments and any questions you have.

Hope you find this post useful. I look forward to your comments and any questions you have. Now, it’s time to get you onboarded. Go on and create a free Codefresh account to start building, testing and deploying Docker images faster than ever.

New to Codefresh? Schedule a FREE onboarding and start making pipelines fast. and start building, testing and deploying Docker images faster than ever.