What Is Harness?

Harness is a software delivery platform that automates continuous delivery (CD) processes. Harness provides features such as continuous integration, continuous deployment, and automated rollbacks, which help manage and automate deployment workflows. Its automation capabilities reduce manual intervention, minimizing errors and deployment times.

Beyond its core CD functionalities, Harness also offers monitoring and reporting tools. These tools provide insights into deployment processes and application performance. Additionally, Harness uses machine learning to identify anomalies and improve the delivery process.

How Does Harness Work with Kubernetes?

Harness offers support for Kubernetes deployments, simplifying the complexity of managing containerized applications. It integrates with Kubernetes, enabling various types of deployments, including Helm and Kustomize-based deployments, which are tools for managing and customizing Kubernetes applications.

Harness facilitates deployment strategies like canary and blue/green deployments, making use of Kubernetes’ native capabilities. With these features, Harness can automatically verify deployments and manage traffic splitting, reducing the risk of introducing errors during updates.

In addition to deployment management, Harness supports the lifecycle of Kubernetes resources, such as manifests, custom resource definitions (CRDs), and operators. This ensures that users can leverage Kubernetes’ extensibility while maintaining control over the deployment process.

Quick Tutorial: Kubernetes Deployments with Harness

These instructions are based on the Harness documentation.

Setting Up a Kubernetes Cluster

To deploy an application using Harness, you’ll first need a target Kubernetes cluster that meets specific requirements:

- Nodes: The cluster should have at least 2 nodes.

- vCPUs: Each node should have 4 virtual CPUs.

- Memory: Allocate 16GB of memory.

- Disk size: Ensure there is at least 100GB of disk space available.

For a quickstart, a Google Kubernetes Engine (GKE) e2-standard-4 machine type will suffice. Your cluster must also have outbound HTTPS access to connect to key services like app.harness.io (Harness), github.com (source control), and hub.docker.com (container registry). In addition, ensure that TCP port 22 is open for SSH access.

You’ll need a Kubernetes service account with permissions to manage resources within the target namespace. This account should have list, get, create, and delete permissions, typically provided by the cluster-admin role or a namespace admin role. These permissions allow the service account to handle the necessary operations for deployment.

Creating the Deploy Stage for Your Kubernetes Pipeline

Harness pipelines consist of stages that define each step in the deployment process. To create a deploy stage for your Kubernetes pipeline:

- If you haven’t already, set up a new Harness project and make sure it includes the continuous delivery module.

- Go to the Deployments section in your project dashboard and select Create a Pipeline. Name the pipeline CD Quickstart and click Start. Your pipeline will now be created.

- Click Add Stage, choose Deploy, and name the stage deploy service. Ensure the Service option is selected, then click Set Up Stage.

- In the Service tab, click New Service. Name this service nginx and click Save. This service represents your application (in this case, NGINX) and can be reused across different stages of the pipeline.

Adding a Kubernetes Manifest for Deployment

With your service defined, you now need to specify the Kubernetes manifest, which defines the desired state of your application:

- In the Service Definition section, choose Kubernetes as the deployment type.

- Under Manifests, click Add Manifest. Choose K8s Manifest and then click Continue.

- Choose GitHub as the manifest store and set up a new GitHub connector by providing the following details:

- Name: Enter a descriptive name for the connector.

- URL type: Select Repository.

- Connection type: Choose HTTP.

- Git repository URL: Enter https://github.com/kubernetes/website.

- Username and token: Provide your GitHub username and a personal access token (PAT) that you’ve configured with the necessary access rights.

You’ll also need to set up a Harness secret for securely storing the PAT. Once the connector is configured, test the connection to ensure it works correctly.

- In the Manifest Details section, provide the following details:

- Manifest identifier: Enter nginx.

- Git fetch type: Select Latest from Branch.

- Branch: Specify main.

- File/folder path: Enter the path to the manifest file: content/en/examples/application/nginx-app.yaml. This path points to a publicly available NGINX deployment manifest.

After setting this up, the manifest will be listed in the pipeline, linking the NGINX service to its corresponding Kubernetes deployment configuration.

Defining the Target Cluster for the Deployment

Next, you’ll configure the target Kubernetes cluster where your application will be deployed:

- In the Infrastructure Details section, click New Environment. Name the environment, then click on Pre-Production and select Save.

- Select Kubernetes as the infrastructure definition type. This represents the physical resources where your deployment will occur.

- To define the cluster details, create a connecting by choosing Select a connector and then New Connector. Enter the following details:

- Name: Enter Kubernetes quickstart as the name.

- Delegate: Select Use the credentials of a specific Harness Delegate. You’ll need to have a Delegate set up in your Kubernetes cluster to manage the connection. Select the appropriate Delegate using its tags, then click Save and Continue.

- Cluster details: Provide the namespace in your Kubernetes cluster where the application will be deployed (e.g., default). In the Advanced section, enter a unique release name, such as quickstart, which will be applied as a label to the deployed resources. This name is used by Harness to track the deployment across the cluster.

- Click Next to continue to the Execution page.

Adding a Rollout Deployment Step to the Stage

After configuring the infrastructure, you’ll add a Kubernetes rollout deployment step to manage the actual deployment process:

- In the Execution Strategies section, choose Rolling as the deployment strategy, then click Use Strategy. This will add the Rollout Deployment step to your stage.

- The rolling update strategy ensures that new versions of your application are gradually rolled out with minimal downtime. Harness uses a default configuration of 25% max unavailable and 25% max surge.

Deploying and Reviewing Your Kubernetes Pipeline

Finally, deploy the configured pipeline:

- Click Save, then Save Pipeline, followed by Run. In the Primary Artifact section, choose the stable artifact version, which corresponds to the nginx:stable image in Docker Hub.

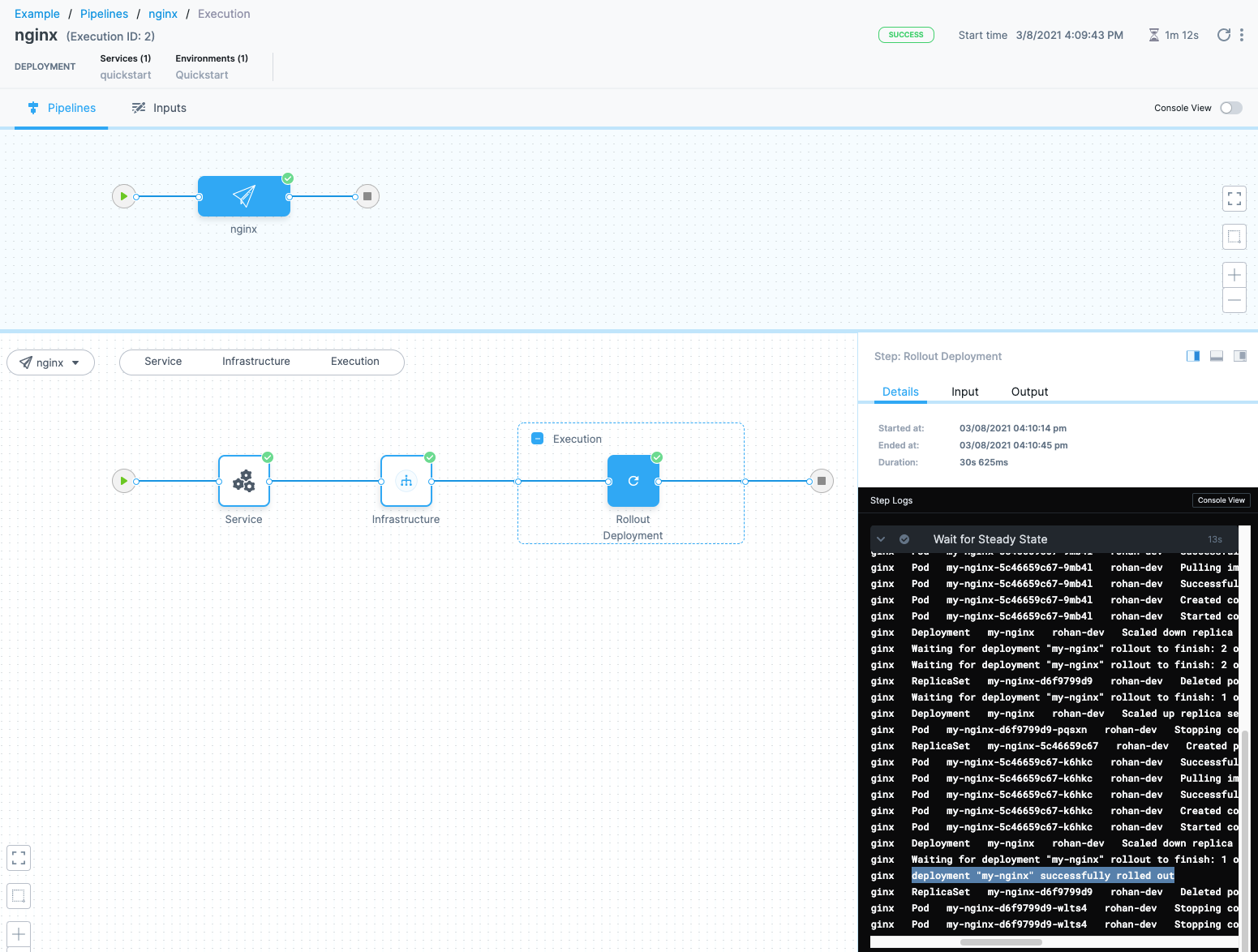

- As the pipeline runs, you can monitor the progress in real time using the console view. Expand the Wait for Steady State section under Rollout Deployment to see detailed logs. The deployment will be tracked, and you should see the successful rollout of your NGINX application, labeled my-nginx.

Source: Harness

Limitations of Deploying Kubernetes with Harness

While Harness offers useful tools for Kubernetes deployments, it has several limitations that can affect its effectiveness in certain scenarios, as reported by users on the G2 platform:

- Lack of support for non-Linux platforms: This limits the versatility of Harness, especially in environments where diverse operating systems are used. Additionally, the build tools within Harness are not highly customizable, making it difficult to tailor the deployment process to specific project needs.

- Separation of build configurations from the codebase: This separation can complicate collaboration in larger teams, as it may require a single administrator to manage configurations, reducing flexibility and increasing the risk of bottlenecks.

- Settings are overly basic: There are limited options to customize dependencies or configure more complex build scenarios. This simplicity extends to the dashboard and the user interface, which lack features like a comprehensive project summary map or real-time feedback on deployment issues.

- Performance issues: Particularly with live console output and build log updates, which often lag behind the actual build process. This delay hinders real-time debugging and problem resolution during deployments.

- Transition from older to newer versions can be cumbersome, particularly when custom configurations need to be migrated.

- Documentation also presents a challenge, with some users reporting gaps in basic usage instructions and integration details.

Codefresh: Mature Harness Alternative

At the time of writing Harness GitOps is under beta, so it is hard to make comparisons between the two platforms. Looking at the existing products and their history we can make the following observations:

- Codefresh is and has always been a very focused solution. It is a Kubernetes native CI/CD platform based around GitOps. The end result is a unified platform designed from the ground up to accommodate full traceability for the whole software lifecycle.

- Harness started as a CD solution for virtual machines. Then it was extended to cover CI with the acquisition of Drone.io in 2020. Harness is now retrofitted with GitOps capabilities. Even after the Drone.io acquisition, there was a long period of time where Harness CI and Harness CD were disjointed products. It remains to be seen whether GitOps support would be central to the platform or just an afterthought.

To address the critical point of the GitOps implementation in these products:

- Harness is in the early stages of adding GitOps functionality to its product.

- Codefresh is one of the founding members of the GitOps workgroup at opengitops.dev (Harness is not a member there)

- Codefresh is an active contributor to the Argo Project, as evidenced by the project contribution stats. At the time of this writing, Harness does not contribute to the Argo Project.

- Codefresh is fully invested and committed to GitOps and the Argo community.