What Is Flagger?

Flagger is a progressive delivery tool primarily designed to work with Kubernetes, automating the release process and ensuring applications are rolled out safely. It is part of the Flux family of open-source GitOps tools. Flagger integrates with Kubernetes and applies custom resource definitions to handle orchestrated application deployments. This simplifies complex deployment strategies into manageable, automated steps.

Flagger aims to help manage the lifecycle of applications by reducing the risk of introducing new software versions in production. By automating deployments and employing strategies like canary releases, A/B testing, and blue/green deployments, Flagger minimizes the impact of new deployments on user experience and system stability.

In early 2024, Weaveworks, the primary developer and corporate sponsor of the Flux project, shut down. This raises uncertainty around the future of the Flagger project and is causing some organizations to consider migrating to alternative solutions, such as Argo Rollouts.

Flagger Features for Kubernetes

Flagger offers the following features for deploying applications with Kubernetes:

- Progressive delivery: Provides developers with tools to release software incrementally, controlling and monitoring every phase of the release process. This mitigates risks, optimizes performance, and ensures a smoother transition between software versions. It enables reduced rollback frequency and better user experience.

- Safer releases: Progressively increases the amount of traffic to new versions based on predefined metrics and health checks. If any anomalies are detected during updates, it automatically halts the rollout and rolls back to the previous stable version. It integrates monitoring tools like Prometheus to enable real-time analysis, providing insights into application performance and user impact during the rollout.

- Flexible traffic routing: Allows for fine-grained control over how traffic is directed between application versions. This flexibility is useful for implementing blue/green or canary deployment strategies, where traffic gradually shifts from an old to a new version based on specified criteria. It integrates with service mesh technologies such as Istio and Linkerd to manipulate traffic flows, enabling developers to test features on a subset of the user base before wider rollout.

- Extensible validation: Allows teams to create custom validation checks as part of the deployment process. This enables the incorporation of automated testing and quality assurance processes directly into the deployment workflow. By extending validations with webhooks, Flagger ensures that all aspects of the software meet the necessary standards before they reach end-users.

- GitOps: Supports declarative configuration that maintains version control and synchronizes system states from a Git repository. This approach aligns with the principles of infrastructure as code and allows granular control over deployment pipelines and configurations.

How Flagger Works

Flagger automates the release process for applications running on Kubernetes by using a custom resource called “canary.” While it is called “canary”, it is used for multiple deployment strategies, including blue/green. This resource defines the release strategy and is adaptable across different clusters, service meshes, and ingress providers. Here’s an overview of the Flagger workflow:

- Canary resource: This specifies how an application will be deployed. It outlines the gradual traffic shift to a new version while monitoring metrics like request success rate and response duration. The deployment is configured with details such as the target reference, service port, analysis intervals, and metrics thresholds. The canary analysis can be extended with custom metrics and automated testing to enhance validation.

- Deployment: Flagger manages both primary and preview deployments. The primary deployment is the stable version that receives all traffic by default. When a new version is deployed, Flagger performs a canary analysis by shifting traffic to the new version incrementally. If any issues are detected, Flagger rolls back to the primary version.

- Autoscaling: It supports scaling configurations through Kubernetes’ Horizontal Pod Autoscaler (HPA). The autoscaler reference can pause traffic increases during scaling operations, optimizing resource usage during canary analysis.

- Service configuration: The canary resource also dictates how the target workload is exposed within the cluster. Flagger creates ClusterIP services for both the primary and canary deployments, ensuring traffic routing is handled correctly. Additional configurations, such as port mapping, metadata annotations, URI matching, and retry policies, can be specified to customize the service behavior.

- Status and monitoring: Flagger provides detailed status updates for canary deployments. The status includes the progress of the canary analysis, traffic weight, and any failures detected. Users can monitor the deployment status using kubectl commands.

- Finalizers and deletion: Upon canary deletion, Flagger typically leaves resources in their current state to simplify the process. However, enabling the revertOnDeletion attribute allows Flagger to revert resources to their initial state, ensuring system consistency.

Which Deployment Strategies Are Supported by Flagger?

Here’s an overview of the different deployment strategies that can be used with Flagger.

Canary Release

In canary release strategies, a new version is gradually rolled out to a small percentage of users before being made available to everyone. This reduces the risk of negatively impacting the entire user base due to a faulty update and allows for performance and reliability testing under real conditions.

By fine-tuning the percentage of traffic and integrating real-time feedback from monitoring systems, Flagger manages and automates canary deployments. This ability to increase traffic to new versions based on success criteria provides a safety net.

A/B Testing

A/B testing allows teams to present two or more versions of an application simultaneously to different user segments. This strategy is useful for testing new features, UI changes, or functional adjustments to determine the best version based on user interaction and feedback.

With its traffic routing capabilities and integration with analytics tools, Flagger controls and directs user traffic to different service versions, gathering insights that inform product development and optimization decisions.

Blue/Green

In a blue/green deployment, Flagger manages two identical environments where only one is live at any given time. This guarantees zero downtime during deployments and fast rollback. When a new version is deployed to the “green” environment, and once deemed stable, traffic is switched from the “blue” (current production) to the green, minimizing disruption.

This method ensures that potential issues are resolved in the green environment without affecting the live blue environment, providing a safe buffer. Flagger automates the switch and closely monitors the process.

Blue/Green Mirroring

An extension of the traditional blue/green model is blue/green mirroring, where Flagger deploys the new version alongside the old, mirroring traffic to both environments without affecting end-user traffic direction. This allows real-world testing under production load, providing an accurate assessment of the new version’s performance relative to the existing one.

This setup ensures that both versions are evaluated under exact, controlled conditions before full deployment. It minimizes risks associated with direct user impact and leads to more informed deployment decisions.

Flagger Limitations

It’s important to note that Flagger also has some potential drawbacks:

- Complexity in debugging and troubleshooting: When issues arise, understanding the interplay between multiple components—such as service meshes, traffic routing rules, and custom resource definitions—can be challenging. The intricate setup required might obscure the root cause of problems.

- Dependency on external tools for full functionality: Flagger often relies on external systems like Prometheus for monitoring and Linkerd for traffic management. Organizations must ensure these dependencies are properly configured and maintained, adding to the operational overhead and potential points of failure.

- Learning curve and configuration overhead: Configuring Flagger involves setting up and understanding custom resource definitions, metric thresholds, and integration points with other tools. This initial setup can be time-consuming and requires a thorough understanding of both Flagger and the underlying infrastructure.

- Complexity of integrating with existing workflows: Organizations with established deployment processes may need to make adjustments to accommodate Flagger’s requirements. This includes reconfiguring pipelines, updating monitoring systems, and rearchitecting parts of the application to support Flagger’s capabilities.

Tutorial: Installing Flagger with Helm on Kubernetes

In this section, we will walk through the steps required to install Flagger on a Kubernetes cluster using Helm. This tutorial assumes that you have a Kubernetes cluster v1.16 or newer and Helm v3 installed. The code below was shared in the official Flagger documentation.

Step 1: Add the Flagger Helm Repository

First, add the Flagger Helm repository to your Helm configuration:

helm repo add flagger https://flagger.app

Step 2: Install Flagger’s Canary CRD

Flagger requires its Custom Resource Definition (CRD) to be installed. Apply the CRD with the following command:

kubectl apply -f https://raw.githubusercontent.com/fluxcd/flagger/main/artifacts/flagger/crd.yaml

Step 3: Deploy Flagger for Different Service Meshes

Flagger can integrate with various service meshes. Below are the steps to deploy Flagger for Istio, Linkerd, App Mesh, and Open Service Mesh (OSM).

Deploy Flagger for Istio

If you are using Istio, deploy Flagger with the following command:

helm upgrade -i flagger flagger/flagger \ --namespace=istio-system \ --set crd.create=false \ --set meshProvider=istio \ --set metricsServer=http://prometheus:9090

For an Istio multi-cluster shared control plane setup, use:

helm upgrade -i flagger flagger/flagger \ --namespace=istio-system \ --set crd.create=false \ --set meshProvider=istio \ --set metricsServer=http://istio-cluster-prometheus:9090 \ --set controlplane.kubeconfig.secretName=istio-kubeconfig \ --set controlplane.kubeconfig.key=kubeconfig

Ensure that the Istio kubeconfig is stored in a Kubernetes secret with the data key named kubeconfig.

Deploy Flagger for Linkerd

For Linkerd, deploy Flagger with the following command:

helm upgrade -i flagger flagger/flagger \ --namespace=linkerd \ --set crd.create=false \ --set meshProvider=linkerd \ --set metricsServer=http://linkerd-prometheus:9090

Step 4: Installing Flagger for Ingress Controllers

Flagger can also be installed to work with various ingress controllers like Contour, Gloo, NGINX, Skipper, Traefik, and APISIX. You can generate the necessary YAML files using the helm template command and apply them with kubectl.

Here’s an example for generating the YAML for Istio:

helm fetch --untar --untardir . flagger/flagger && helm template flagger ./flagger \ --namespace=istio-system \ --set metricsServer=http://prometheus.istio-system:9090 \ > flagger.yaml

To apply the YAML:

kubectl apply -f flagger.yaml

Codefresh and Argo Rollouts: Future-Proof Flagger Alternative

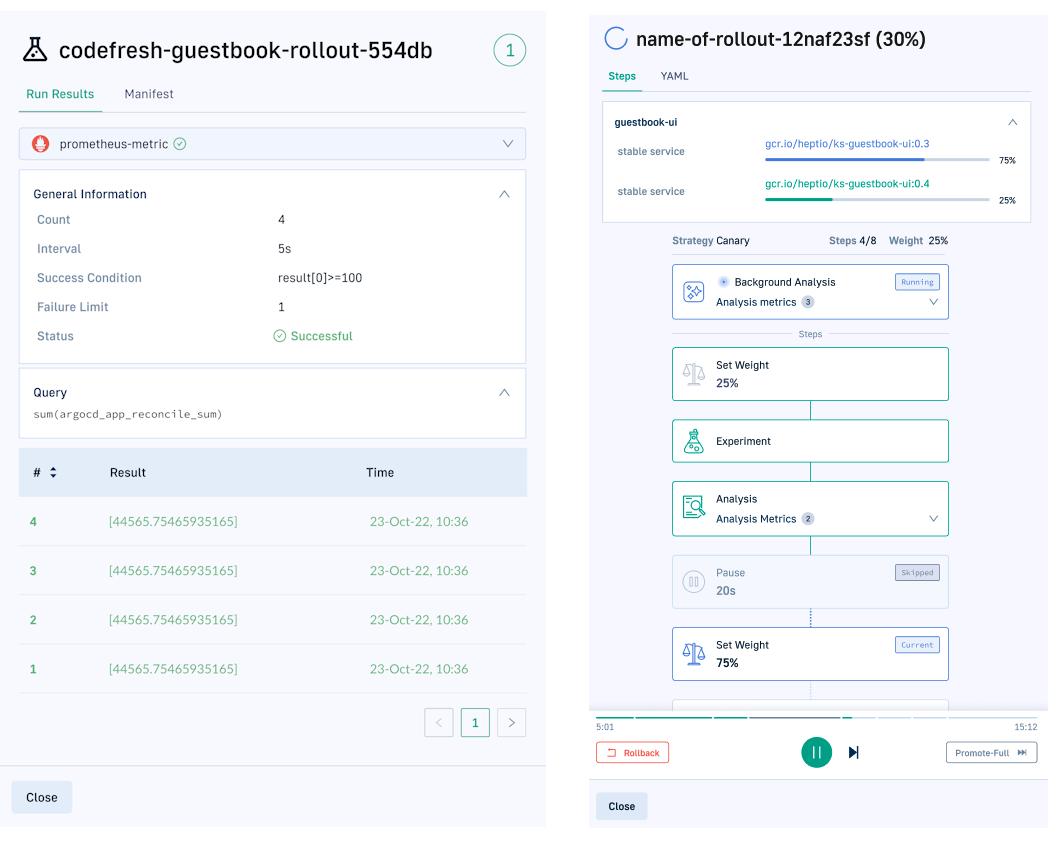

If you are looking for an alternative way of handling Progressive Delivery, Argo Rollouts is another Kubernetes controller with similar capabilities including canary deployments and blue/green deployments. The Enterprise version of Codefresh includes Argo Rollouts and offers additional features such as:

- Detailed analysis overview for smoke tests and metrics

- Interactive dashboard for monitoring canaries and promoting them

- Enterprise grade security and authentication

- Support for single sign-on (SSO)

- Integration with Argo CD

- Deployment history, insights and other analytics

Teams that adopt Codefresh deploy more often, with greater confidence, and are able to resolve issues in production much more quickly. This is because we unlock the full potential of Argo to create a single cohesive software supply chain. For users of traditional CI/CD tooling, the fresh approach to software delivery is dramatically easier to adopt, more scalable, and much easier to manage with the unique hybrid model.

Learn more about Codefresh for continuous delivery

Deploy more and fail less with Codefresh and Argo