This week we welcomed the Founder of Testim, Oren Rubin to talk about how machine learning, Kubernetes pipelines, and Testim can make test creation painless and easy to accomplish.

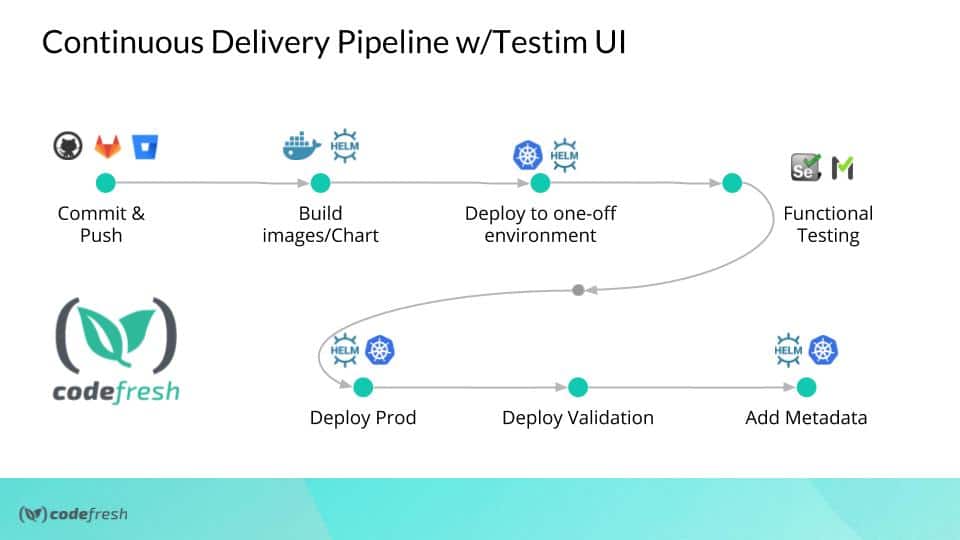

There are two big hurdles to solve when adding UI testing to your software delivery pipeline:

1) How to stand up an environment and

2) How to create tests that scale

In this webinar, Oren and Kubernaut Dan Garfield show you the benefits & challenges of UI/E2E Testing, and how to do it better with Machine Learning. There is a great demo, and live Q&A.

Don’t worry if you missed it, we recorded it for you here:

Have questions? Schedule a 1:1 with us by clicking here

You can also view the slide deck below:

The YAML – Simple version

This is all the YAML needed to run Testim in a Codefresh pipeline.

RunTestim:

title: Use Testim to do AI UI Tests

image: testim/docker-cli

environment:

- token=${{TESTIM_TOKEN}}

- project=${{TESTIM_PROJECT}}

- label=sanity

- host=ondemand.testim.io

- port=4444

- base-url=${{APP_URL}}

commands:

- testim --token "${token}" --project "${project}" --host "${host}" --port "${port}" --label "${label}" --report-file ${{CF_VOLUME_PATH}}/testim-results.xml

The YAML – Parsing Output

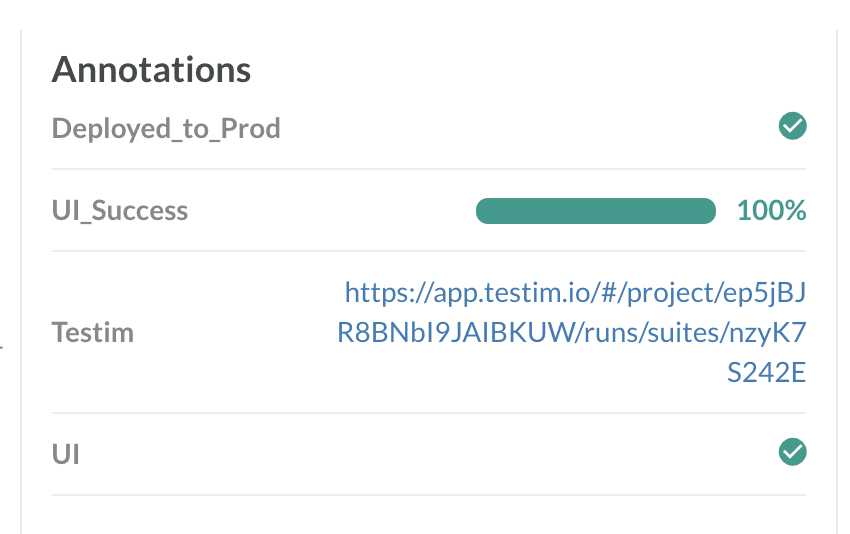

To parse the test results from Testim and append the info onto our images takes a few more steps. Here’s the YAML. This longer version will add the nice test results metadata to our image.

RunTestim:

title: Use Testim to do AI UI Tests

image: testim/docker-cli

environment:

- token=${{TESTIM_TOKEN}}

- project=${{TESTIM_PROJECT}}

- label=sanity

- host=ondemand.testim.io

- port=4444

- base-url=${{APP_URL}}

commands:

- testim --token "${token}" --project "${project}" --host "${host}" --port "${port}" --label "${label}" --report-file ${{CF_VOLUME_PATH}}/testim-results.xml | tee ${{CF_VOLUME_PATH}}/testims

- cat ${{CF_VOLUME_PATH}}/testims | grep "Batch execution" | grep -Eo '[a-zA-Z0-9]{10,}' > ${{CF_VOLUME_PATH}}/batchid

- url=`cat ${{CF_VOLUME_PATH}}/batchid`

- echo "https://app.testim.io/#/project/${{TESTIM_PROJECT}}/runs/suites/$url"

- tests=`cat ${{CF_VOLUME_PATH}}/testim-results.xml | grep -Eo 'tests="[0-9]{1,}' | grep -Eo '[0-9]{1,}'`

- testsfailed=`cat ${{CF_VOLUME_PATH}}/testim-results.xml | grep -Eo 'failure="[0-9]{1,}' | grep -Eo '[0-9]{1,}'`

- cf_export URL=$url

- cf_export TESTS=$tests

- cf_export TESTSFAILED=$testsfailed

on_success: # Execute only once the step succeeded

metadata: # Declare the metadata attribute

set: # Specify the set operation

- ${{BuildingTestDockerImage.imageId}}: # Select any number of target images

- UI: true

on_fail:

metadata: # Declare the metadata attribute

set: # Specify the set operation

- ${{BuildingTestDockerImage.imageId}}: # Select any number of target images

- UI: false

CalulcateTests: #This step will pull in bc so we can do some division to find out the percentage of tests passed.

title: Caculate Test Passed

image: frolvlad/alpine-bash

commands:

- SUCCESSRATE=`echo "scale=2; (${TESTS} / (${TESTS}-${TESTSFAILED}))*100" | bc`

- echo $SUCCESSRATE

- cf_export SUCCESSRATE=$SUCCESSRATE

CollectTestResults: #This step will take all the variables we gathered and add them to our image.

title: Capture Results

image: codefresh/cli

commands:

- codefresh --help

- echo ${SUCCESSRATE}

on_finish:

metadata:

set:

- ${{BuildingTestDockerImage.imageId}}: # Select any number of target images

- Testim: "https://app.testim.io/#/project/${{TESTIM_PROJECT}}/runs/suites/${{URL}}"

- UI_Success: "${{SUCCESSRATE}}%"

New to Codefresh? Get started with Codefresh by signing up for an account today!

Like what you saw? Schedule a demo with Testim at testim.io!