What Is Artifactory?

Artifactory is a universal repository manager designed to store, manage, and distribute software artifacts and containers across development and deployment pipelines. It acts as a centralized solution for managing binary artifacts such as Docker images, Maven libraries, and npm packages. By providing a unified interface, Artifactory simplifies dependency management, version control, and artifact sharing for development teams.

Artifactory can integrate with CI/CD pipelines, enabling seamless automation of build, test, and deployment workflows. Its flexibility allows it to support multiple package formats and integrate with various DevOps tools, making it a useful component in modern software development ecosystems.

What Is a Docker Registry?

A Docker registry is a storage and distribution system for Docker images, which are used for containerized application deployment. It acts as a centralized location where users can push, pull, and manage their Docker images securely.

Registries can be public or private, with public ones like Docker Hub offering images for a wide array of applications, while private registries provide controlled environments for organizations to store sensitive or proprietary images. Artifactory can be used to set up private registries.

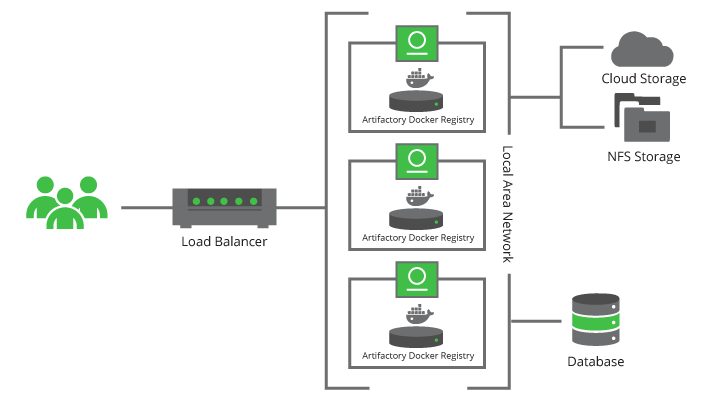

Deployment Options for Artifactory as Docker Registry

Artifactory can be deployed as a Docker registry in a cloud or on-premises system.

Cloud-Based

Using Artifactory in the cloud eliminates the need for managing infrastructure, as the solution is hosted by JFrog or other cloud providers. This approach is appropriate for organizations seeking rapid deployment and scalability. The cloud-based option provides seamless updates, reliable backups, and high availability without requiring manual configuration or maintenance.

Additionally, integrating with cloud-native services like Kubernetes or serverless architectures is simplified in a cloud-based setup. Cloud Artifactory can leverage the scalability of the hosting platform, ensuring performance and reliability even under changing workloads.

On-Premises

Deploying Artifactory on-premises offers full control over the environment, making it preferable for organizations with strict compliance or data residency requirements. On-premises installations allow teams to configure the server according to their specific network and security needs, such as using custom firewalls or VPNs.

However, this option requires maintaining hardware, applying updates, and ensuring high availability. On-prem deployments are suited for teams with the necessary resources and expertise to handle these complexities.

Source: JFrog

Benefits of Using JFrog Artifactory as Docker Registry

JFrog Artifactory Is a flexible Docker registry solution, enabling organizations to securely store, manage, and distribute Docker images. Here’s why Artifactory is a popular choice for Docker registry management:

- Flexible registry setup: Supports unlimited Docker registries, allowing teams to create multiple repositories for different projects, improving organization and access control.

- Docker client integration: Fully compatible with Docker registry API calls, enabling straightforward use with Docker clients for easy image push and pull.

- Enhanced security with fine-grained access control: Offers secure local repositories with strict access protocols to protect sensitive images and ensure only authorized access.

- Optimized image access: Caches images from remote sources, such as Docker Hub, reducing network dependency and providing faster, reliable access to images.

- Support for multi-architecture builds: Integrates with Docker Buildx to create and store multi-platform images in one registry, simplifying deployment across varied architectures.

- OCI compliance: Supports Open Container Initiative (OCI) standards, enabling compatibility with OCI clients and expanding usability in modern cloud-native setups.

Tutorial: Using Artifactory as a Docker Registry

This tutorial provides an overview of how to set up and start using a Docker repository in Artifactory as a cloud or on-premises deployment. These instructions are adapted from the Artifactory documentation.

Using Docker and Artifactory Cloud

Step 1: Set Up the Docker Repository on Artifactory Cloud

To begin using JFrog Artifactory as a Docker registry, you first need to set up a Docker repository on Artifactory Cloud. Artifactory Cloud is hosted, so you don’t need to configure it with a reverse proxy.

Once you have an Artifactory account, follow these steps:

- Create a Docker repository: In the Artifactory interface, navigate to the Repositories section, and create a virtual Docker repository. This will serve as the main point for pushing and pulling Docker images.

- Log into Artifactory from Docker: Use the Docker client to log in to the Artifactory repository with your credentials:

docker login ${Artifactory-Cloud-server}.jfrog.io

You’ll be prompted to enter a username and password or an API token.

Step 2: Pull and Push Docker Images

To confirm the setup, try pulling and pushing Docker images:

- Pull an image: Start by pulling a sample image, such as hello-world, from Docker Hub.

docker pull hello-world

2. Tag the image: Assign a new tag to the hello-world image so it aligns with the repository structure in Artifactory.

docker tag hello-world ${server-name}.jfrog.io/{repo-name}/hello-world

3. Push the image: Finally, push the tagged image to the Artifactory Docker repository.

docker push ${server-name}.jfrog.io/{repo-name}/hello-world

Step 3: Integrate Artifactory with Kubernetes (Optional)

If you’re deploying to Kubernetes, configure it to pull images from your private Artifactory registry:

- Create a Docker registry secret: Generate a Kubernetes secret to connect to Artifactory.

kubectl create secret docker-registry regcred \ --docker-server=<JFROG-HOSTNAME> \ --docker-username=<JFROG-USERNAME> \ --docker-password=<YOUR-PASSWORD> \ --docker-email=<YOUR-EMAIL> \ --namespace <YOUR-NAMESPACE>

For added security, use a dedicated user with restricted permissions.

2. Set up imagePullSecrets in Kubernetes: To ensure Kubernetes uses the secret for pulling images, add it to the imagePullSecrets list in the namespace’s default Service Account.

kubectl edit serviceaccount default -n <YOUR-NAMESPACE>

3. Update the imagePullSecrets section to include the secret name regcred as shown below:

apiVersion: v1 kind: ServiceAccount imagePullSecrets: - name: regcred

4. Verify the configuration: To confirm everything is working, retrieve the list of running pods in the namespace:

kubectl get pods -n <YOUR-NAMESPACE>

TIPS FROM THE EXPERT

In my experience, here are tips that can help you maximize efficiency and security when using Artifactory as a Docker registry:

- Use tag immutability for production images: Enable tag immutability on production repositories to ensure image consistency across environments. This prevents overwrites and keeps builds predictable, making it easier to trace back deployments and fixes.

- Set up local caching for frequently used images: Configure local caching for popular base images and dependencies. This reduces external dependencies and accelerates build times, particularly useful in remote or restricted network environments where access to Docker Hub may be limited.

- Automate image promotions using Artifactory’s REST API: Use the Artifactory API to automate image promotion between repositories (e.g., from staging to production). This not only maintains consistency but also streamlines promotion workflows, reducing human error in moving images between stages.

- Establish retention policies for tagged images based on lifecycle: Create retention policies aligned with your development cycle (e.g., shorter retention for development tags and longer for production images). Automated purging of outdated tags keeps the repository clean and reduces storage overhead.

- Enable checksum-based deduplication for multi-arch images: Artifactory’s checksum-based storage is especially effective with multi-architecture images, as shared layers can be stored once and reused. This significantly reduces storage consumption when using multi-platform builds with Docker Buildx.

Using Docker and Artifactory On-Premises (Self-Hosted)

Setting up a self-hosted JFrog Artifactory as a Docker registry involves creating a Docker repository, configuring a reverse proxy, and integrating with the Docker client. Here’s a step-by-step guide.

Step 1: Configure Artifactory

Start by configuring Artifactory to support Docker registries.

- Create a Docker repository: In Artifactory, go to the Repositories section and set up a virtual Docker repository (e.g., named docker-virtual). This virtual repository will aggregate any local and remote Docker repositories.

- Obtain an SSL certificate: Use a wildcard SSL certificate or create a self-signed certificate if using a local setup.

- Configure the reverse proxy method: Choose a reverse proxy setup for managing Docker requests, such as the Subdomain, Ports, or Repository Path method, based on the network setup and requirements.

Step 2: Configure the Reverse Proxy

Depending on the reverse proxy setup, you can follow different methods. Here, we outline the Subdomain method, which simplifies mapping Docker commands to your chosen repositories and requires only a one-time configuration:

- Generate the configuration file: Use Artifactory’s Reverse Proxy Configuration Generator to create a configuration file for NGINX or Apache.

- Edit and place the configuration:

- For NGINX: Copy the generated configuration into a file called artifactory-nginx.conf. Place it in /etc/nginx/sites-available and create a symbolic link to enable it:

sudo ln -s /etc/nginx/sites-available/artifactory-nginx.conf /etc/nginx/sites-enabled/artifactory-nginx.conf

- For Apache: Copy the generated configuration into a file called artifactory-apache.conf. Place it in /etc/apache2/sites-available and enable it:

sudo ln -s /etc/apache2/sites-available/artifactory-apache.conf /etc/apache2/sites-enabled/artifactory-apache.conf

3. Restart the reverse proxy:

sudo systemctl restart nginx # For NGINX sudo systemctl restart apache2 # For Apache

Step 3: Configure the Docker Client

Follow these steps:

- Add DNS or host entry: Map the Artifactory subdomain to your IP in the /etc/hosts file.

<ip-address> art.local

2. Configure insecure registry (if self-signed): If using a self-signed certificate, add the following line in the Docker configuration file /etc/docker/daemon.json:

{ "insecure-registries": ["art.local"] }

3. Then, restart the Docker daemon:

sudo systemctl restart docker

Step 4: Test the Setup

Run the following commands to verify connectivity and functionality:

- Pull a test image:

docker pull hello-world

2. Login to Artifactory:

docker login art.local

3. Tag and push the image:

docker tag hello-world art.local/docker-virtual/hello-world docker push art.local/docker-virtual/hello-world

4. Verify reverse proxy connection: Check the reverse proxy response to confirm configuration:

curl -I -k -v https://art.local/api/system/ping

Following these steps sets up a self-hosted JFrog Artifactory instance as a Docker registry, allowing you to manage images securely and streamline Docker image distribution across environments.

Best Practices for Using Artifactory as a Docker Registry

Leveraging Artifactory as a Docker registry requires implementing best practices to optimize its use and ensure operational efficiencies.

Use Virtual Repositories for Simplified Access

Virtual repositories in Artifactory aggregate multiple repositories, exposing a single URL for client access and simplifying Docker image management. By consolidating repositories, users reduce complexity and improve image searching and retrieval efficiency. This setup also improves security by providing a unified control point for repository access and permissions.

Organizations can categorize images using virtual repositories, aligning them with project phases or department needs. This categorization streamlines development processes by providing developers with clear paths to required images, ultimately optimizing build and deployment cycles.

Regularly Clean Up Unused Images

Regularly purging unused Docker images helps prevent unnecessary resource consumption. Over time, a registry can accumulate redundant images, occupying space and potentially increasing storage costs. Implementing automated policies for image cleanup can prevent this, helping manage storage efficiently and ensuring the registry remains organized.

Unused images should be flagged and removed systematically, which improves registry operations by simplifying image management and reducing retrieval times. By maintaining a lean repository, organizations can achieve faster deployment cycles and increase the efficiency of their CI/CD pipelines.

Monitor Repository Storage Usage

Regular monitoring helps identify trends and anomalies in Docker image storage, addressing potential issues before they impact performance. Artifactory dashboards and reports enable easier tracking of repository metrics such as image size, access frequency, and storage consumption.

Predictive analytics derived from storage monitoring enable agile resource allocation, ensuring sufficient capacity and supporting scaling efforts as needed. Proactive monitoring minimizes disruptions, enabling uninterrupted service delivery.

Implement Disaster Recovery Plans

A disaster recovery strategy encompasses regular data backups, redundancy, and recovery procedures to ensure business continuity. Establishing offsite or cloud-based backups shields data against onsite disasters, maintaining the integrity of Docker images stored in Artifactory.

Regularly testing recovery plans ensures preparedness and reliability, allowing swift restoration of services post-disruption. Best practices include setting up failover systems and periodic recovery drills to validate the efficacy of recovery protocols. By prioritizing disaster recovery, organizations protect their Docker assets, mitigate risks, and ensure operational resilience.

Keep Artifactory and Docker Clients Up to Date

Regular updates to Artifactory and Docker clients are necessary to maintain security and introduce new features. Updates often contain patches for vulnerabilities, hardening the protection of stored images. Staying current with software versions ensures compatibility with the latest driver support, reducing issues related to outdated systems.

Automated update processes can simplify the maintenance of both Artifactory and Docker environments, minimizing manual intervention and downtime. By adopting a proactive update policy, organizations enhance operational stability and security posture.

Related content: Read our guide to Artifactory alternatives (coming soon)

Codefresh Artifactory Integration

Codefresh is a modern deployment solution built for GitOps and containers. It has built-in support for Docker registries hosted in Artifactory in a number of ways:

- An Artifactory Docker registry can be used as a target registry

- The native push step can tag and push images to the Docker registry hosted in Artifactory

- The images dashboard can show all contains stored in Artifactory

Beyond Artifactory integration, Codefresh helps you meet the continuous delivery challenge. Codefresh is a complete software supply chain to build, test, deliver, and manage software with integrations so teams can pick best-of-breed tools to support that supply chain.

Built on Argo, the world’s most popular and fastest-growing open source software delivery toolchain, Codefresh unlocks the full enterprise potential of Argo Workflows, Argo CD, Argo Events, and Argo Rollouts and provides a control-plane for managing them at scale.

Deploy more and fail less with Codefresh and Argo