Planning to deploy Argo CD and support a lot of Kubernetes clusters? In this article, we’ll cover the different deployment strategies and architectures used along with their pros and cons.

Guidelines for Scaling with Argo CD

First off, Argo CD has great support for scaling across several dimensions:

- Performance

- Security

- Useability

- Fail over

Performance

Argo CD comes in two main flavors, Argo CD and Argo CD HA (High-availability). High availability deploys more replicas for scalable components such as repo-server. Argo CD uses Redis as a cache for objects, this greatly improves performance without sacrificing reliability because the cache can be discarded and rebuilt whenever needed. Argo CD HA deploys a minimum of 3 Redis replicas across 3 nodes but can be scaled up with additional nodes.

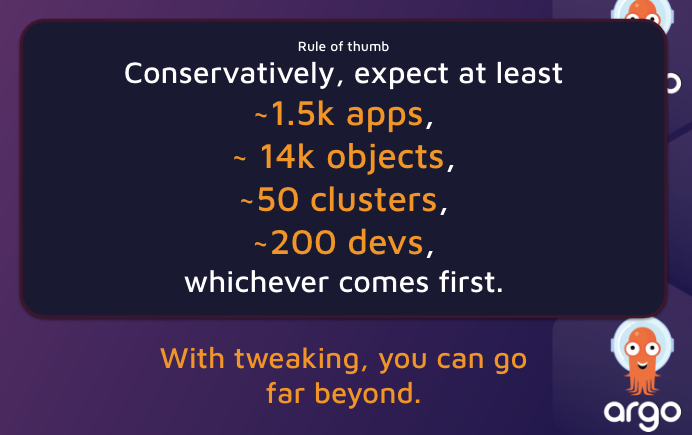

While Argo CD has many knobs and levers for expanding performance, you should expect Argo CD HA to comfortably support without tweaks:

- 1,500 applications

- 14,000 objects

- 50 clusters

- 200 devs

This general rule of thumb is fairly conservative because applications vary greatly in objects, manifests, memory requirements for rendering manifests, and frequency of updates. This rule of thumb comes from the KubeCon talk “How Adobe Planned For Scale With Argo CD, Cluster API, And VCluster – Joseph Sandoval & Dan Garfield” and I encourage you to watch this talk for a variety of considerations as well as testing methodology. The guideline is meant as a “plan on at least X before you need to worry about tweaking anything.”

Of course, if you’re working with a giant git repos that requires 5GBs just to checkout it will have an impact on performance. Likewise, if manifest generation is extremely complex. Using an umbrella chart with hundreds of child applications may require tweaking memory before any of these limits are reached.

Past a few thousand applications, Argo CD’s UI will start to slow down just because of the amount of rendering done client side.

Security

For a deep dive on security concerns on scaling instances check out Scaling Argo CD Securely, which goes in depth on this topic.

Argo CD has Role Based Access Control and Single-Sign-On, when combined the security model is quite robust. Argo CD also does all manifest rendering client-side which greatly reduces the threat model. Manifest rendering is still a potential risk area for any GitOps operator. Helm, Kustomize, JSonnet, and others are very powerful and essentially allow users to execute arbitrary code (more so with JSonnet than the others) and anytime you allow arbitrary code execution in a server it’s an area of potential concern. This is one reason that when building SaaS Argo CD Codefresh chose to use an Argo CD per user with vcluster for better isolation. Argo CD, or any GitOps operator should not be considered public multi-tenant user safe. While a lot of work has been done to limit the potential avenues for abuse in manifest generation, it should be a consideration, especially in larger instances.

Usability

Argo CD is heralded for its amazing ease of use and extensibility. This remains true at scale though there is the potential for cross-talk between teams as they manage applications across Kubernetes instances. For example, each application managed by Argo CD must have a unique name which leads to conventions like “app-staging” and “app-prod”.

Likewise, filters in the UI are powerful and persistent as you move through the app but not saveable. Enterprising teams may turn to bookmarks to handle saved filters but in reality, having 2,000 people working off a single instance with all their own apps might be a bit much. Of course, with RBAC you can limit read access so there are fewer things to wade through.

Failover

The power of GitOps is to bring up and change all applications and infrastructure with a single git commit. Whenever something is easy to stand up, it’s generally easy to tear down. For example, consider a scenario where an admin updates the config management plugin for Kustomize and makes a mistake automatically causing all manifest generation to fail. Further, consider that the admin may have previously set finalizers on all applications managed by Argo CD. Finalizers make it so that when an application or resource is removed from Argo CD, it will automatically be deleted by Kubernetes. This convergence of configuration compounded by a small mistake would trigger all Kustomize applications to automatically be deleted because of a misconfiguration with config management tools. Of course, recovering from this failure would be just as quick assuming good configuration of applications etc.

This consideration can be summed as “Blast radius” – what should the maximum blast radius be for potential catastrophic failures? Argo CD is very reliable and the most likely cause of failure is far and away misconfiguration. Still, before you choose an architecture you should carefully consider all of these options.

Comparing Argo CD Architectures

Very few companies settle on a single architecture, instead, they pick the architecture that best matches their use case. Let’s look at the architectures available to Argo CD users.

Hub and Spoke

Definition: A single Argo instance is used to connect and deploy to many Kubernetes instances. The Argo forms the hub with each Kubernetes cluster added forming a spoke.

| Pros | Cons |

| ✅ Easy management of a single Argo instance✅ Simple access and ease of use for all developers etc ✅ Simple disaster recovery | ⛔ Single potential point of failure⛔ Lack of isolation for security ⚠️ Kubernetes apis must be accessible to Argo instance. |

When to use Hub and Spoke for Argo CD

Hub and spoke is usually the first stop for teams looking to operationalize Argo CD across many clusters. Argo CD supports high availability and most components can be scaled with additional pods or tweaks with additional threads or memory. Likewise, Argo CD supports role-based access control and SSO so integrating and scaling additional users is generally simple.

This model also works very well for managing dev, staging, and production environments in a single instance. If you’re working on a small team, with all resources accessible to each other this is a great model. Some companies choose to run fairly large hub-and-spoke instances. As the team grows you should put dedicated resources into managing Argo and making sure all components are running smoothly.

If the Kubernetes API is not accessible, for example, if Kubernetes is hosted in a virtual private cloud, or behind another firewall hub and spoke is still possible with the addition of network tunnels. Several solutions exist such as inlets or Google’s connect gateway.

When to avoid Hub and Spoke for Argo CD

This model also comes with the largest blast radius. A critical failure could impact all connected clusters and apps. This model is also less flexible for working with large numbers of users and will require performance tweaks to keep running smoothly. Companies deploying across networks, with many independent teams, and large teams should avoid this model above certain scales.

Network tunnels are generally reliable but bring some small added complexity.

Standalone

Definition: An Argo instance is installed and co-located with the cluster it’s managing. Each cluster has its own Argo instance.

| Pros | Cons |

| ✅ Reliability improvement as each cluster operates independently✅ Isolation of concerns, better security | ⛔ Complexity in management and updates⛔ Complexity in providing access to users ⚠️ Special considerations for disaster recovery |

When to use Standalone Argo CD Instances

Standalone Argo CD instances provide the greatest reliability and accessibility with no external networking access required for operation (absent docker files, git repo etc). Standalone is the defacto way to deploy to clusters at edge because each cluster operates completely independently. We’ve even seen air gapped deployments of Argo CD where repos are updated via USB drive.

Using standalone Argo CD instances at scale is often paired with infrastructure management tools like Crossplane or Terraform to make setup and teardown more efficient. Another variation on this model uses a hub-and-spoke instance to manage “standalone” Argo CD instances on a great number of clusters.

When to avoid Standalone Argo CD Instances

Standalone instances do carry with them the burden of management overhead. If you have a single team with a simple staging -> production setup it might not make sense to split Argo CD for each cluster. Likewise testing changes across instances can be a bit more challenging with most users adopting a sort of canary release approach to updating a fleet of instances.

Split-Instance

Definition: A single management instance of Argo CD connects and manages many clusters. Unlike hub and spoke, the components of Argo CD are split, with some components living on target clusters and networking generally provided via tunnel.

This is a newer, and more experimental model for managing Argo. Examples of open-source implementations exist in Argo CD Agent, and Open Cluster Manager.

| Pros | Cons |

| ✅ Secure Connections to K8s apis behind firewalls✅ Centralized configuration ✅ Ease of user access | ⛔ Instances must be updated together, creating management complexity⛔ Central point of failure ⚠️ Semi-independent components require special care and have limited community support ⚠️ Components operate with some independence |

When to use Argo CD as a Split Instance

A split instance’s primary advantage is that it can deploy to and manage clusters in separate networks via some kind of networking tunnel mechanism. Argo CD itself is resilient so if networking is lost no failures should occur and updates will wait for networking to be restored.

There is some very marginal potential benefit to networking as manifest rendering may happen on target clusters rather than on a central cluster. This potential benefit is small and may be wiped out by increased traffic between orphaned controllers and their parent instances of Argo CD.

Some argue putting Argo CD’s repo server on each cluster is potentially valuable for scale. This isn’t a very compelling point on its own as the repo server controller can be sharded on a hub-and-spoke cluster to provide the same benefit without complex networking.

When to avoid Argo CD as a Split Instance

Split instances are still relatively new and are considered a bit experimental. Unless there is a compelling reason to use a centralized Argo CD instance to manage clusters on different networks there really isn’t a reason to consider it.

Control Plane

Definition: Argo instances can be deployed in a mix of standalone and hub and spoke but a control-plane is added for managing and rolling information into a centralized place.

The most popular implementation of a control plane for Argo CD is Codefresh, which allows users to provision, manage, and operate Argo CD across networks, instances, and clusters.

| Pros | Cons |

| ✅ Centralized configuration✅ Ease of user access ✅ Instances can be upgraded independently ✅ Secure behind firewall access ✅ Stability in decentralization | ⚠️ Requires additional software, which includes more potential parts to manage. |

When to use a Control Plane for Argo CD

The advantages and disadvantages of a control plane vary with the control plane used. For this comparison we’ll look at Codefresh, as it is the most popular and battle tested control plane for Argo CD. These considerations may apply to other control planes.

Anytime you have more than a single instance of Argo CD, and even if you’re only using a single instance there are often significant advantages to using a control plane. For example, Codefresh automates repo configuration and organization, app creation, single sign on, security updates and much more. This not only improves the usability of Argo CD, but also simplifies the management overhead dramatically.

Further advantages include when deploying to a large number of clusters or instances as multi-tenancy is part of the control planet with it’s own RBAC and account switching for greater organization.

Again, in the case of Codefresh, the control plane is zero-trust. It does not require access to application repos, or ingress access to connected instances. Instead each connected Argo CD instance operates independently but provides metadata to the control plane to simplify operation. Codefresh also provides additional UI and dashboards with integrations into git, security scanning tools, and much more. The issues of cross talk mentioned from hub and spoke are also improved.

When to avoid a Control Plane for Argo CD

Because control planes are essentially a meta layer, it doesn’t act as a single point of failure for delivering software but there is the potential that users won’t know where to go if the control plane goes down. The control plane also becomes an additional piece of software to manage though in the case of Codefresh it also helps manage Argo instances overall so there really aren’t downsides.

Other Considerations

Should you use Argo CD self-managed?

In Argo CD, the concept of an application is essentially three things:

- A source of truth for the desired state of an application (usually git)

- A target environment for that state

- Policy for how synchronization should occur

These applications can be anything, even Argo CD itself! Self-managing Argo CD is a very popular pattern for managing Argo CD. Admins should ensure security limits who can modify the Argo CD application and make sure they have a disaster recovery plan in place.

Generally self-managed Argo CD can be restored with a single kubectl apply if needed as long as applications themselves are also in git (see “Should you allow users to create applications via the Argo CD UI?” below). Checkout Argo CD Autopilot for an example of a self-managed Argo CD implementation with automation.

We recommend self-managed Argo CD.

Should you allow users to create applications via the Argo CD UI?

This is a touchy subject. One of the big benefits of Argo CD is its UI an ease of use. Most teams that use Argo CD start by deploying and using the UI to create applications. However, applications created this way are only stored in etcd. Should the cluster be lost all configuration for these applications would be lost. Of course the desired state, as stored in git, would still be safe but where these should be deployed to and the synchronization policy that should be followed would be gone.

Because of this we cannot recommend enough that you make sure application configurations are also stored in git. In Codefresh for example, the UI for creating applications actually commits resources directly to git and avoids any api calls for application creation.

The app-of-apps pattern and application sets can greatly simplify this as well.

Should users provision and manage their own Argo CD instances?

Companies often end up with a proliferation of Argo CD instances as each team may stand up and operate their own. There is nothing inherently bad about this assuming all teams are responsible.

But as we’ve seen with sprawling, self-managed tools in the past, not all teams are responsible. Argo CD supports N-2 versions with security patches and releases one new version per quarter (in general) which means any Argo CD version has a life-span shorter than a year. For this reason we recommend leveraging a control-plane which can simplify the update and management overhead without limiting individual teams from operating their instances with more independence.

Where can I learn more about how to run and use Argo CD?

We highly recommend our amazing GitOps certification which includes GitOps Fundamentals with Argo CD and GitOps at Scale with Argo CD. It will teach you:

- How to use Argo CD to deploy and manage applications

- How to use Argo Rollouts for Progressive Delivery

- How to take advantage of Application Sets and App-of-Apps

- How to model environments and changes

- How to organize your Git repos

- And so much more…

It is the world’s most popular and fastest growing GitOps certification and has rave reviews. Check it out at https://learning.codefresh.io/

Conclusions

We’ll be sure to come back and update this post as the landscape evolves and in the meantime it is the most comprehensive resource on the web for comparing these architectures. If you hear of others, or would like to bounce ideas off us please reach out! You can follow me on twitter @todaywasawesome or Codefresh @codefresh and DMS are open on both.

Deploy more and fail less with Codefresh and Argo