What Is Kubernetes in Production?

Kubernetes in production refers to the deployment and management of containerized applications using Kubernetes at a scale and with the reliability needed for business-critical operations. It involves orchestrating containers across multiple hosts, ensuring they run smoothly, scale as required, and remain resilient to failures.

Deploying Kubernetes in production is different from its use in development or testing environments. It requires an infrastructure management approach that includes automated deployments, scaling policies, service discovery, load balancing, and handling of stateful services. Stringent security protocols are needed to protect sensitive data and maintain regulatory compliance.

Given the complexity of managing a Kubernetes cluster at this level, organizations must have access to specialized knowledge and tools for production-grade deployments. Many organizations opt for managed Kubernetes services so they can outsource some of the responsibilities of running production environments.

The Importance of Kubernetes in Modern Infrastructure

Kubernetes has become a ubiquitous system in modern infrastructure due to its orchestration capabilities, enabling organizations to deploy, scale, and manage containerized applications. It can automate complex operational tasks, simplifying the management of microservices architectures and supporting cloud-native applications.

By abstracting the underlying infrastructure, Kubernetes enables a more agile development process. It allows teams to focus on building and deploying applications without worrying about the specifics of each host environment. This level of abstraction accelerates development cycles and increases the scalability and reliability of applications.

This is part of a series of articles about Kubernetes management.

Kubernetes in Production: Trends and Statistics

The adoption of Kubernetes in production environments has grown significantly, with various deployment sizes and types reflecting its versatility and complexity. According to the State of Production Kubernetes report by Dimensional Research:

- Deployment Sizes: The majority of enterprises have more than ten Kubernetes clusters, with 14% operating over a hundred clusters. This indicates a large-scale adoption where enterprises manage extensive containerized environments.

- Kubernetes Distributions: Organizations use multiple Kubernetes distributions to meet diverse needs. The report found that 83% of respondents utilize between two and more than ten different distributions, including service distributions (like AWS EKS-D), self-hosted (like Red Hat OpenShift), and edge-specific distributions (like K3s and MicroK8s).

- Edge Computing: Kubernetes is increasingly popular for edge computing, with 93% of respondents engaging in edge initiatives. However, only 7% have fully deployed Kubernetes at the edge, while another 13% are partially deployed and 29% are in the pilot phase. This trend highlights the growing interest and experimental phase of Kubernetes use at the edge.

- Complexity: 98% of stakeholders report challenges in operating Kubernetes. These include managing diverse environments, setting enterprise guardrails, and dealing with interoperability issues. The need for specialized operations talent and the high demand for automation were also highlighted as critical areas requiring improvement.

Challenges of Running Kubernetes in Production

Deploying Kubernetes in production introduces several environment-specific challenges.

Complexity of Setup and Management

The initial setup of a Kubernetes cluster in production requires a deep understanding of network configurations, storage systems, security protocols, and container orchestration principles. Administrators must plan the cluster architecture, considering high availability, disaster recovery, and scalability.

Integrating Kubernetes with existing IT infrastructure requires attention to compatibility and performance optimization. Once deployed, the day-to-day management of a Kubernetes cluster presents ongoing challenges, including monitoring resource usage, managing deployments, scaling applications based on demand, and troubleshooting potential issues.

Networking Issues

Another challenge is ensuring seamless communication between containers across multiple hosts, while maintaining the security and integrity of the network. Issues such as service discovery, load balancing, and network policies require careful configuration to support the operational needs and security requirements of containerized applications.

Additionally, integrating Kubernetes networking with existing corporate networks can present compatibility challenges. The dynamic nature of container orchestration also introduces challenges in maintaining persistent network connections. As containers are created and destroyed, IP addresses change frequently, requiring DNS resolution and traffic routing tools.

Security Concerns

As applications scale and evolve, maintaining a secure configuration becomes challenging. Vulnerabilities can arise from misconfigured containers, inadequate network policies, or outdated images, exposing sensitive data to potential breaches. The shared resources model of Kubernetes means that a compromise in one container could affect others in the same cluster.

The automated and highly interconnected environment of Kubernetes requires rigorous access controls and authentication mechanisms to prevent unauthorized access.

Storage Scalability and Reliability

Traditional storage solutions often struggle to keep pace with the dynamic nature of containerized environments, where pods may be frequently created and destroyed. This can lead to issues with persistent data storage, as the physical storage must be allocated and reclaimed without data loss or corruption.

Ensuring consistent performance across various storage systems becomes increasingly difficult as workloads grow. Failures in the storage layer can also lead to downtime or data loss. Ensuring high availability of data across multiple zones and managing backup and recovery processes are complex tasks.

Resource Management

Managing CPU, memory, and network resources across a cluster involves constant monitoring and adjustment to ensure optimal performance. However, the large volume of containers and microservices running in a typical production environment complicates this task. Administrators must balance resource allocations to prevent resource contention and avoid over-provisioning.

Another challenge is the prediction and handling of traffic spikes or sudden increases in demand. Kubernetes provides mechanisms like Horizontal Pod Autoscaler (HPA) to automatically scale resources, but setting appropriate thresholds and metrics for scaling often requires fine-tuning based on empirical data. Thus, scaling can lag behind real-time demand.

Key Practices for a Production-Ready Kubernetes Stack

Here are some of the measures that organizations can take to ensure the successful deployment of Kubernetes in production.

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) involves managing and provisioning Kubernetes clusters through code rather than manual processes. It enables teams to automate the setup and maintenance of infrastructure, ensuring consistency and reducing the likelihood of human error. IaC also supports agile development with quick provisioning of testing or staging environments that mirror production.

By defining infrastructure using code, organizations can apply version control practices, making it easier to track changes, roll back configurations, and enhance collaboration among team members. IaC tools like Terraform and Pulumi allow for the deployment of Kubernetes clusters alongside components such as networking configurations, storage solutions, and security policies.

Monitoring and Centralized Logging

By implementing comprehensive monitoring, teams can gain insights into resource usage, application performance, and system health, enabling proactive identification and resolution of issues. Tools like Prometheus for monitoring and Grafana for visualization are widely used to track metrics across Kubernetes clusters, allowing developers to set up dashboards.

Centralized logging aggregates logs from all components of the application stack into a single location. This aids in troubleshooting issues, understanding system behavior, and maintaining security compliance. Solutions like Fluentd or Filebeat can collect logs from containers and nodes, forwarding them to a centralized logging platform like Elasticsearch or Grafana Loki.

Centralized Ingress Controller with SSL Certificate Management

A centralized Ingress Controller in Kubernetes simplifies the management of incoming traffic, providing unified access to applications in the cluster. By deploying a single Ingress Controller, such as Nginx or HAProxy, organizations can route external requests to the right services based on predefined rules. This enables global policies like rate limiting and authentication across all services.

Incorporating SSL certificate management with an Ingress Controller enhances security by automating the process of obtaining and renewing SSL/TLS certificates. Tools like cert-manager work alongside Ingress Controllers to automatically issue and renew certificates through ACME providers like Let’s Encrypt.

Role-Based Access Control (RBAC)

Role-Based Access Control (RBAC) in Kubernetes enforces the principle of least privilege by assigning permissions based on users’ roles within an organization. This allows administrators to control who can access resources and what actions they can perform.

RBAC policies are defined through roles and role bindings, which specify permissions granted to users or groups for interacting with the Kubernetes API. Integrating RBAC with Identity and Access Management (IAM) improves security by centralizing authentication and authorization. This allows for granular access control, reducing the risk of unauthorized access or configuration changes.

GitOps Deployments

GitOps deployments use version control systems, such as Git, for Kubernetes cluster management and application delivery. This approach treats Git repositories as the source of truth for the desired state of infrastructure and applications. By automating deployments through Git, teams can improve reliability and enhance security.

Tools like Argo CD automate the synchronization between the Git repository and the Kubernetes cluster, ensuring that changes made in Git are reflected in the cluster. This method supports continuous delivery practices by allowing developers to use merge/pull requests for deploying applications or updating configurations. It also simplifies rollback to previous states.

Secret Management

Secret management in Kubernetes is critical for maintaining the security of applications by securely storing and managing sensitive information like passwords, tokens, and keys. Integrating secret management solutions ensures that secrets are encrypted at rest and in transit within the Kubernetes ecosystem.

Tools like HashiCorp Vault or cloud provider services such as AWS Secrets Manager and Azure Key Vault provide centralized secret stores, isolating secrets from application code and minimizing direct access. To automate the injection of secrets into applications without exposing them to unauthorized users or systems, Kubernetes enables dynamic secret injection into pods. This allows applications to access secrets at runtime without hardcoding sensitive information.

Backup and Disaster Recovery

Backup and disaster recovery strategies help protect against data loss and ensure business continuity. A backup solution allows organizations to quickly recover from unplanned outages, human errors, or malicious attacks. It regularly captures snapshots of the cluster state, including configurations, persistent volumes, and application data, storing them securely off-site or in a separate environment.

Disaster recovery planning helps restore normal operations with minimal downtime. It includes predefined recovery objectives, automated restoration processes, and regular drills to test the recovery plan. Tools that support application-consistent backups can ensure that applications are restored to a usable state without data corruption or loss.

Managing Production Kubernetes Deployments with Codefresh

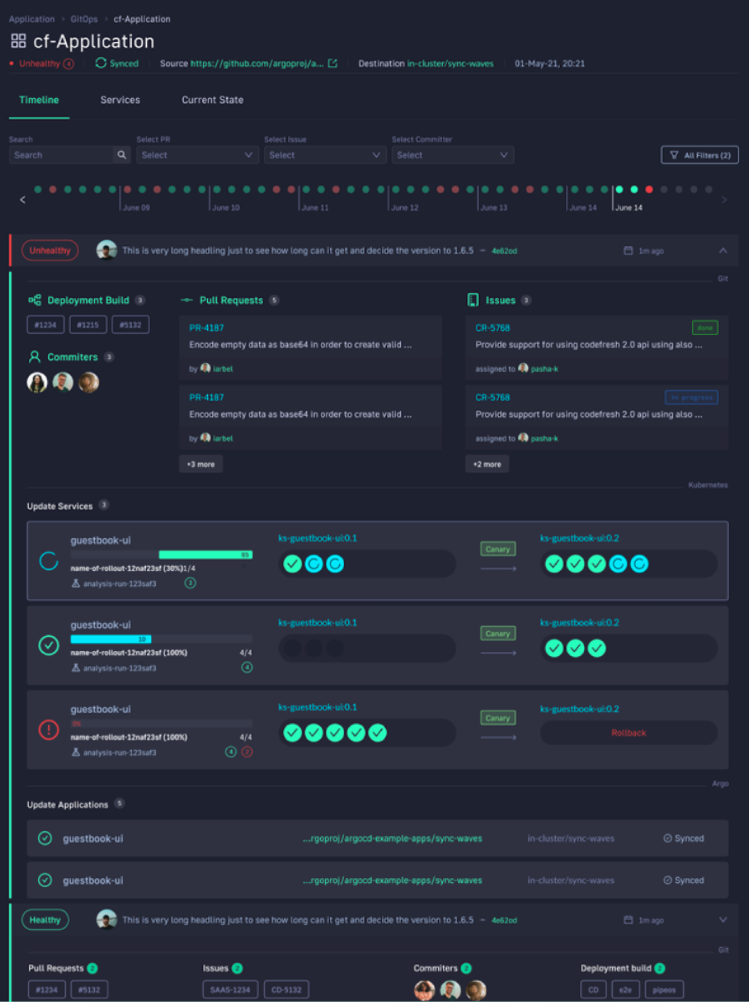

The Codefresh Software Delivery Platform, powered by Argo, lets you answer many important questions within your organization, whether you’re a developer or a product manager both for production and non-production environments. For example:

- What features are deployed right now in any of your environments?

- What features are waiting in Staging?

- What features were deployed last Thursday?

- Where is feature #53.6 in our environment chain?

What’s great is that you can answer all of these questions by viewing one single dashboard. Our applications dashboard shows:

- Services affected by each deployment

- The current state of Kubernetes components

- Deployment history and log of who deployed what and when and the pull request or Jira ticket associated with each deployment

This allows not only your developers to view and better understand your deployments, but it also allows the business to answer important questions within an organization. For example, if you are a product manager, you can view when a new feature is deployed or not and who was it deployed by.

Learn more about the Codefresh Software Delivery Platform

Deploy more and fail less with Codefresh and Argo