GitOps is a set of best practices that build upon the foundation of Infrastructure As Code (IAC) and expand the approach of using Git as the source of truth for Kubernetes configuration. These best practices are the driving force behind new Kubernetes deployment tools such as Argo CD and Flux as well as the Codefresh enterprise deployment platform.

Adopting GitOps in a Kubernetes environment is not a straightforward task when it comes to secret management. On one hand GitOps says that the single source of truth for everything should be Git (including secrets) while on the other hand organizations have traditionally kept their secrets in external systems either on their cloud provider or on dedicated solutions such as Hashicorp Vault.

The big challenge here is how to unify the GitOps practices with traditional secret solutions and how to employ a secret injection method with Kubernetes deployment tools while still managing all secrets in a secure and safe manner.

In this article we will see how we can inject secrets in Codefresh/Argo CD applications from the AWS Secrets Manager.

Solution overview

Argo CD is a Kubernetes controller that deploys applications from Git repositories. It is one of the fastest growing CNCF projects. Codefresh is an enterprise deployment platform that is powered by Argo CD (as well as the other Argo projects). Codefresh offers enterprise Argo CD installations as Codefresh Runtimes that can run either on the cloud or in customer premises.

The Argo CD Vault Plugin is an extra add-on that allows Argo CD to fetch secrets from external sources. As the name implies, it works with Hashicorp Vault but also supports other backends like IBM Cloud Secrets Manager, SOPS, and of course, AWS Secrets Manager.

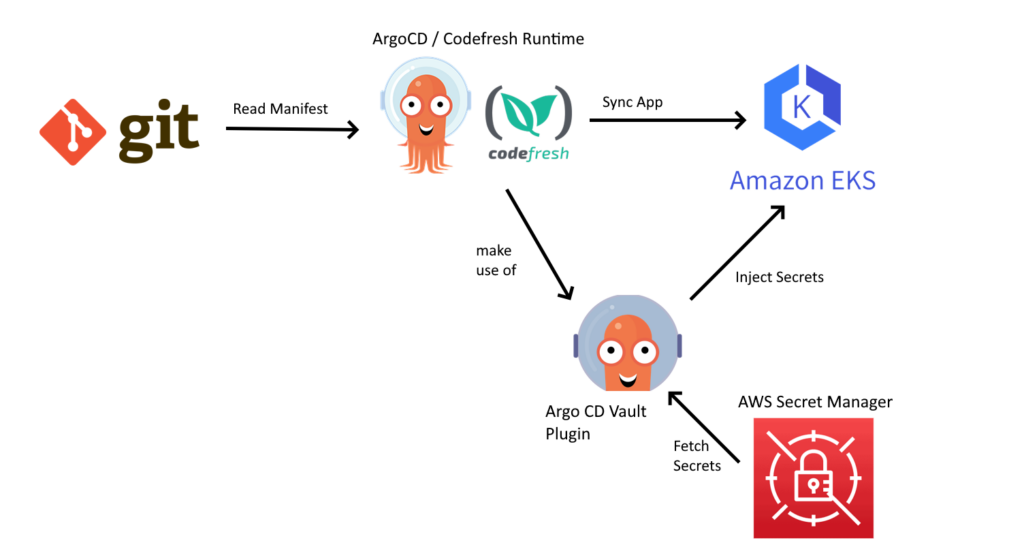

Here is the overall architecture of the solution for Amazon Elastic Kubernetes Service (EKS):

What we want to achieve is to pass secrets to our Kubernetes application, that are stored in AWS Secret manager.

The steps we are going to take are the following:

- Install the Argo CD Vault plugin in Argo CD

- Define our secrets in AWS Secrets Manager

- Setup a Kubernetes application to retrieve secrets during a deployment with Argo CD

Installing the Argo CD Vault plugin in an existing installation

First things first. We need to install the Argo CD Vault plugin in our Codefresh runtime. There are two choices for installing it:

- Modify the Helm value file for the GitOps runtime installation and install the plugin along with Argo CD during our initial setup. A good solution if you know you will need it ahead of time.

- Patch an existing Argo CD installation to add the plugin on the fly. Maybe you did not think you would need it when you installed your first runtime. This assumes that you have configured the Hybrid GitOps runtime as an ArgoCD application in the Codefresh UI.

Those two approaches are very similar and easy to transpose for each value file use case. We will follow the second approach as we assume most readers will have an existing Codefresh installation.

Therefore, the solution is to modify the runtime values.yaml in the Shared Configuration repository (ISC). the file is located at resources/<RUNTIME_NAME>chart/values.yaml

If you want to use an additional tool for the manifest generation, you need to turn to a Config Management Plugin (CMP). The Argo CD “repo server” component is in charge of building Kubernetes manifests based on some source files from a Helm, OCI, or git repository. When a config management plugin is correctly configured, the repo server may delegate the task of building manifests to the plugin.

To achieve the patch process we will setup a side-car container to the repo-server.

- Install the required tools during the pod initialization phase

- Add commands to run during the generation of the manifests at runtime

Those actions will be done in the resources/<RUNTIME_NAME>chart/values.yaml file.

Its contents may vary depending on the ingress and other options chosen at the runtime installation time. For our example:

- Our runtime is named csdp

- We used nginx as our ingress

- We pass a secret containing an example git token

To do that, navigate to the Git repository created during the Runtime installation and into the bootstrap/argo-cd folder. You will find there the kustomization.yaml file which by default looks like this: (at the time of this writing – v1.0.23 – and assuming a runtime named my-runtime):

gitops-runtime:

global:

codefresh:

accountId: 600abcdef123456789

secretKeyRef:

name: codefresh-user-token

key: token

runtime:

ingress:

className: nginx

enabled: true

hosts:

- lrcsdp.mydomain.com

name: csdp

As we are modifying an existing ArgoCD installation, we will create an argo-cd block under gitops-runtime.

So let’s add a new container to the repo-server pod

gitop-runtime:

argo-cd:

extraContainers:

- name: argocd-vault-plugin

command:

- "/var/run/argocd/argocd-cmp-server"

image: alpine/k8s:1.24.16

securityContext:

runAsNonRoot: true

runAsUser: 999

env:

- name: AVP_TYPE

value: awssecretsmanager

- name: AWS_REGION

value: us-east-1

volumeMounts:

- mountPath: /var/run/argocd

name: var-files

- mountPath: /home/argocd/cmp-server/plugins

name: plugins

# Remove this volumeMount if you've chosen to bake the config file into the sidecar image.

- mountPath: /home/argocd/cmp-server/config/plugin.yaml

subPath: argocd-vault-plugin.yaml

name: argocd-cmp-cm

- name: custom-tools

mountPath: /usr/local/bin/argocd-vault-plugin

subPath: argocd-vault-plugin

# Starting with v2.4, do NOT mount the same tmp volume as the repo-server container. The filesystem separation helps

# mitigate path traversal attacks.

- mountPath: /tmp

name: cmp-tmp

# -- Init containers to add to the repo server pods

initContainers:

- name: get-avp

image: alpine:3.8

env:

- name: AVP_VERSION

value: 1.14.0

command: [sh, -c]

args:

- |

wget -O argocd-vault-plugin https://github.com/argoproj-labs/argocd-vault-plugin/releases/download/v${AVP_VERSION}/argocd-vault-plugin_${AVP_VERSION}_linux_amd64

chmod +x argocd-vault-plugin

mv argocd-vault-plugin /custom-tools/

ls -ail /custom-tools/

volumeMounts:

- mountPath: /custom-tools

name: custom-tools

# -- Additional volumes to the repo server pod

volumes:

- name: custom-tools

emptyDir: {}

- name: cmp-tmp

emptyDir: {}

- name: argocd-cmp-cm

configMap:

name: argocd-cmp-cm

# -- Additional volumeMounts to the repo server main container

volumeMounts: {}

Let’s look in more details on the content of this file:

- We create a new volume custom-tools

- We download the argocd-vault-plugin, using an environment variable for the version (to make it easier to upgrade in the future) and add it to the volume

- We mount the volume and the tool downloaded

- We added a couple of environments variables to configure AWS region and type of the secrets storage

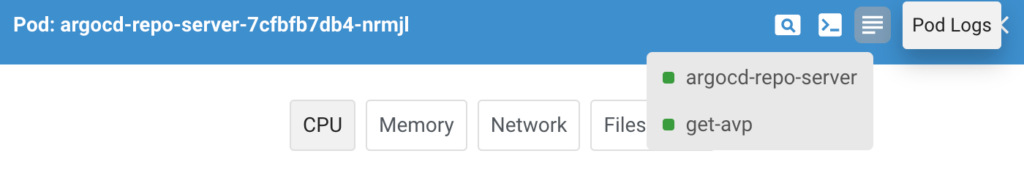

Note: the name of the initContainers block will reflect for the pod log (as seen in Lens)

Now let’s add the path to use the plugin as well as an annotation on the service account to link a IAM role which has permissions to access and decrypt the AWS secrets

gitops-runtime:

argo-cd:

configs:

# ConfigMap for Config Management Plugins

# Ref: https://argo-cd.readthedocs.io/en/stable/operator-manual/config-management-plugins/

# based on https://www.cloudadmins.org/argo-vault-plugin-avp/

cmp:

# -- Create the argocd-cmp-cm configmap

create: true

# -- Annotations to be added to argocd-cmp-cm configmap

annotations:

eks.amazonaws.com/role-arn: 'arn:aws:iam::<ACCOUNT_ID>:role/<ROLE_NAME>'

# -- Plugin yaml files to be added to argocd-cmp-cm

plugins:

argocd-vault-plugin:

generate:

command: ["/usr/local/bin/argocd-vault-plugin"]

args: ["generate", "./"]

This concludes all the changes required to add and use the Vault plugin to your ArgoCD.

After all those changes, your values.yaml file should look like:

gitops-runtime:

global:

codefresh:

accountId: 600abcdef123456789

userToken:

secretKeyRef:

name: codefresh-user-token

key: token

runtime:

ingress:

className: nginx

enabled: true

hosts:

- lrcsdp.mydomain.com

name: csdp

argo-cd:

configs:

cmp:

# -- Create the argocd-cmp-cm configmap

create: true

# -- Annotations to be added to argocd-cmp-cm configmap

annotations:

eks.amazonaws.com/role-arn: 'arn:aws:iam::<ACCOUND_ID>:role/<ROLE_NAME>'

# -- Plugin yaml files to be added to argocd-cmp-cm

plugins:

argocd-vault-plugin:

generate:

command: ["/usr/local/bin/argocd-vault-plugin"]

args: ["generate", "./"]

## Repo Server

repoServer:

# -- Additional containers to be added to the repo server pod

## Ref: https://argo-cd.readthedocs.io/en/stable/operator-manual/config-management-plugins/

## Note: Supports use of custom Helm templates

extraContainers:

- name: argocd-vault-plugin

command:

- "/var/run/argocd/argocd-cmp-server"

image: alpine/k8s:1.24.16

securityContext:

runAsNonRoot: true

runAsUser: 999

env:

- name: AVP_TYPE

value: awssecretsmanager

- name: AWS_REGION

value: us-east-1

volumeMounts:

- mountPath: /var/run/argocd

name: var-files

- mountPath: /home/argocd/cmp-server/plugins

name: plugins

# Remove this volumeMount if you've chosen to bake the config file into the sidecar image.

- mountPath: /home/argocd/cmp-server/config/plugin.yaml

subPath: argocd-vault-plugin.yaml

name: argocd-cmp-cm

- name: custom-tools

mountPath: /usr/local/bin/argocd-vault-plugin

subPath: argocd-vault-plugin

# Starting with v2.4, do NOT mount the same tmp volume as the repo-server container. The filesystem separation helps

# mitigate path traversal attacks.

- mountPath: /tmp

name: cmp-tmp

# -- Init containers to add to the repo server pods

initContainers:

- name: get-avp

image: alpine:3.8

env:

- name: AVP_VERSION

value: 1.14.0

command: [sh, -c]

args:

- |

wget -O argocd-vault-plugin https://github.com/argoproj-labs/argocd-vault-plugin/releases/download/v${AVP_VERSION}/argocd-vault-plugin_${AVP_VERSION}_linux_amd64

chmod +x argocd-vault-plugin

mv argocd-vault-plugin /custom-tools/

ls -ail /custom-tools/

volumeMounts:

- mountPath: /custom-tools

name: custom-tools

# -- Additional volumes to the repo server pod

volumes:

- name: custom-tools

emptyDir: {}

- name: cmp-tmp

emptyDir: {}

- name: argocd-cmp-cm

configMap:

name: argocd-cmp-cm

# -- Additional volumeMounts to the repo server main container

volumeMounts: {}

Once the file is committed to your Shared Configuration repository, ArgoCD will deploy the changes to your environment and the plugin will be ready to be used.

Fetching secrets from AWS Secrets Manager in a GitOps deployment

Let’s check everything is working as expected using a basic Jenkins deployment.

The first thing we need to do is to instruct an Argo CD application to use the plugin matching the name defined in the Kustomization.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: jenkins

spec:

project: dev

destination:

namespace: dev

server: https://kubernetes.default.svc

source:

path: ./manifests/jenkins-aws-secret/

repoURL: https://github.com/lrochette/csdp_applications.git

targetRevision: HEAD

plugin:

name: argocd-vault-plugin

syncPolicy:

automated:

prune: true

selfHeal: true

Then we can simply add a secret manifest to tie the AWS secret with a Kubernetes secret.The example below will create a Kubernetes secret named jenkins taking its value from AWS.

kind: Secret

apiVersion: v1

metadata:

name: jenkins

annotations:

avp.kubernetes.io/path: jenkins/secrets

labels:

app.kubernetes.io/instance: aws-secret

owner: laurent

type: Opaque

stringData:

jk-password: <pwd>

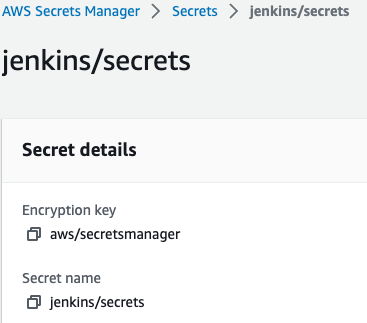

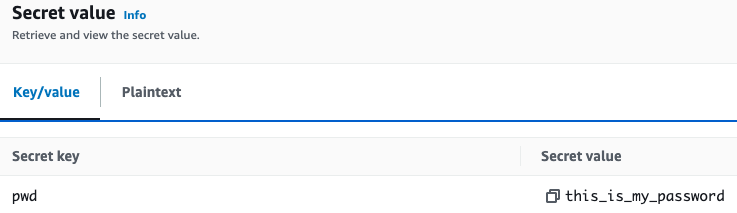

That matches our definition in AWS Secrets Manager:

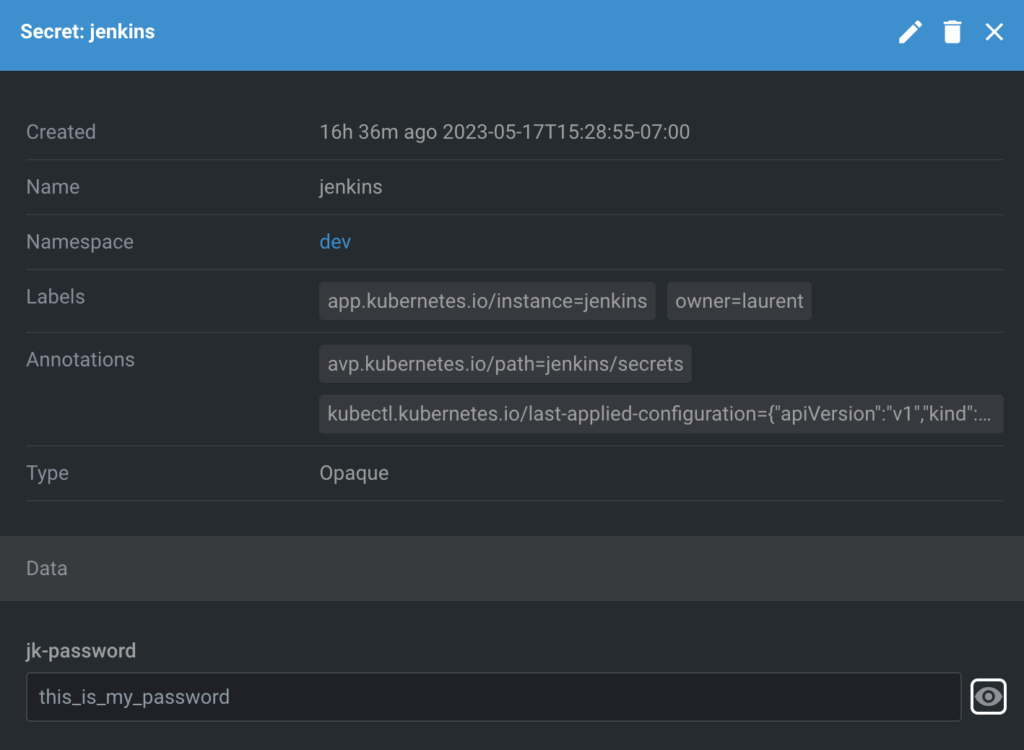

and value

Now you can use the jenkins secret like any other Kubernetes secret. For example, as the initial password for my server deployment passed as an environment variable:

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

containers:

- name: jenkins

image: bitnami/jenkins:2.249.2

env:

- name: JENKINS_USERNAME

value: "codefresh"

- name: JENKINS_PASSWORD

valueFrom:

secretKeyRef:

name: jenkins

key: jk-password

ports:

- name: http

containerPort: 8080

That concludes the example. The password for the end user application (running in EKS) will now be fetched automatically from AWS Secrets Manager instead of being hardcoded in Git as you can see below:

Updating secrets

Notice that with this method Argo CD doesn’t know when a secret is updated in AWS Secret Manager in order to fetch the new version.

There are many ways to solve this problem. The most straightforward one is to simply set up a cron job that automatically refreshes secrets at regular intervals.

Alternatively you can force an application refresh by modifying a manifest so that Argo CD can sync again on its own.

Conclusion

In this article, we have seen how you can inject secrets from AWS Secret Manager in an Amazon EKS cluster using Argo CD/Codefresh and the Argo CD vault plugin. Note that all the changes we made will survive after a restart/upgrade of the Codefresh runtime that contains the Argo CD instance. This means that the process we describe must only be performed once during initial setup.

It is also important to notice that the Argo CD vault plugin is just one of the many possible ways for fetching secrets. There are several other solutions that work with AWS Secret Manager. Another alternative (that we might look at a future blog post) is by using External Secret Operator. This operator has the added capability of “refreshing” secrets on its own.