What Are Microservices?

Microservices is an architectural style that is increasingly adopted by software development teams. It structures an application as a collection of small autonomous services, modeled around a business domain.

Here are some key characteristics of a microservices architecture:

- Single responsibility: Each microservice is designed around a single business capability or function.

- Independence: Microservices can be developed, deployed, and scaled independently. They run within their own process and communicate with other services via well-defined APIs (application programming interfaces).

- Decentralization: Microservices architecture favors a decentralized model of data management, where each service has its own database to ensure loose coupling.

- Fault isolation: A failure in one service does not impact the functionality of other services. This aspect improves the resilience of the system.

- Polyglot: Microservices can potentially use any programming language. A microservices pattern allows developers to choose the most suitable technology stack (programming languages, databases, software environment) for each service.

- Containerization: Microservices often take advantage of containerization technologies (like Docker) and orchestration systems (like Kubernetes) for automating deployment, scaling, and management of applications.

Microservices architecture offers various benefits such as flexibility in using different technologies, ease of understanding, adaptability, and scalability. However, it also brings its own set of challenges such as data consistency, service coordination, and increased complexity due to distributed system concerns like network latency, fault tolerance, and message serialization.

How Kubernetes Supports Microservices Architecture

Kubernetes is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery.

Kubernetes provides a platform to schedule and run containers on clusters of physical or virtual machines. By abstracting the underlying infrastructure, it provides a degree of portability across cloud and on-premises environments. It also provides a rich set of features including service discovery, load balancing, secret and configuration management, rolling updates, and self-healing capabilities.

Kubernetes supports the microservices architecture in several ways:

- It provides a robust foundation on which to deploy and run your microservices.

- It provides services such as service discovery and load balancing that are critical for running a microservices architecture.

- It provides the necessary tooling and APIs for automating the deployment, scaling, and management of your microservices.

Managing and Maintaining Microservices with Kubernetes

Deploying Microservices to Kubernetes

Deploying microservices to Kubernetes typically involves creating a Kubernetes Deployment (or a similar object such as a StatefulSet) for each microservice. A Deployment specifies how many replicas of a microservice to run, which container image to use, and how to configure the microservice.

Once the Deployment is created, Kubernetes will schedule the specified number of microservice replicas to run on nodes in the cluster. It will also monitor these replicas to ensure they continue running. If a replica fails, Kubernetes will automatically restart it.

Scaling Microservices on Kubernetes

Scaling microservices on Kubernetes involves adjusting the number of replicas specified in the Deployment. Increasing the number of replicas allows the microservice to handle more load. Decreasing the number of replicas reduces the resources used by the microservice.

Kubernetes also supports automatic scaling of microservices based on CPU usage or other application-provided metrics. This allows the microservice to automatically adjust to changes in load without manual intervention.

Monitoring Microservices with Kubernetes

Monitoring microservices in a Kubernetes environment involves collecting metrics from the Kubernetes nodes, the Kubernetes control plane, and the microservices themselves.

Kubernetes provides built-in metrics for nodes and the control plane, and these metrics can be collected and visualized using tools like Prometheus and Grafana.

For the applications running within each microservice, you can use application performance monitoring (APM) tools to collect detailed performance data. These tools can provide insights into service response times, error rates, and other important performance indicators.

Debugging Microservices in a Kubernetes Environment

Debugging microservices in a Kubernetes environment involves examining the logs and metrics of the microservices, and potentially attaching a debugger to the running microservice.

Kubernetes provides a built-in mechanism for collecting and viewing logs. It also provides metrics that can help diagnose performance issues. A new feature is the kubectl debug node command, which lets you deploy a Kubernetes pod to a node that you want to troubleshoot. This is useful when you cannot access a node using an SSH connection.

If these tools are not sufficient, you can attach a debugger to the running microservice. However, this is more complex in a Kubernetes environment due to the fact that the microservices are running in containers on potentially many different nodes.

Implementing Continuous Delivery/Continuous Deployment (CD) with Kubernetes

Kubernetes provides a solid foundation for implementing continuous delivery or continuous deployment (CD) for microservices. The Kubernetes Deployment object provides a declarative way to manage the desired state of your microservices. This makes it easy to automate the process of deploying, updating, and scaling your microservices.In addition, Kubernetes provides built-in support for rolling updates. This allows you to gradually roll out changes to your microservices, reducing the risk of introducing a breaking change. Open source tools like Argo Rollouts provide more reliable rollback functionality, as well as support for progressive deployment strategies like blue/green deployments and canary releases.

6 Best Practices for Microservices on Kubernetes

Here are a few ways you can implement microservices in Kubernetes more effectively.

1. Manage Traffic with Ingress

Managing traffic in a microservices architecture can be complex. With many independent services, each with its own unique endpoint, routing requests to the correct service can be a challenge. This is where Kubernetes Ingress comes in.

Ingress is an API object that provides HTTP and HTTPS routing to services within a cluster based on host and path. Essentially, it acts as a reverse proxy, routing incoming requests to the appropriate service. This allows you to expose multiple services under the same IP address, simplifying your application’s architecture and making it easier to manage.

In addition to simplifying routing, Ingress also provides other features such as SSL/TLS termination, load balancing, and name-based virtual hosting. These features can greatly improve the performance and security of your microservices application.

2. Leverage Kubernetes to Scale Microservices

One of the key benefits of using a microservices architecture is the ability to scale individual services independently. This allows you to allocate resources more efficiently and handle varying loads more effectively. Kubernetes provides several tools to help you scale your microservices.

One such tool is the Horizontal Pod Autoscaler (HPA). The HPA automatically scales the number of pods in a deployment based on observed CPU utilization or, with custom metrics support, on any other application-provided metrics. This allows your application to automatically respond to changes in load, ensuring that you have the necessary resources to handle incoming requests.

Kubernetes also supports manual scaling, which allows you to increase or decrease the number of pods in a Deployment on-demand. This can be useful for planned events, such as a marketing campaign, where you expect a temporary increase in load.

3. Use Namespaces

In a large, complex application, organization is key. Kubernetes namespaces provide a way to divide cluster resources between multiple users or teams. Each namespace provides a scope for names, and the names of resources in one namespace do not overlap with the names in other namespaces.

Using namespaces can greatly simplify the management of your microservices. By grouping related services into the same namespace, you can manage them as a unit, applying policies and access controls at the namespace level.

4. Implement Health Checks

It is essential to implement health checks for your microservices. Health checks are a way to monitor the status of your services and ensure that they are functioning correctly.

Kubernetes provides two types of health checks: readiness probes and liveness probes. Readiness probes are used to determine whether a pod is ready to accept requests, while liveness probes are used to determine whether a pod is still running.

Health checks are a crucial part of maintaining a resilient and responsive application. They allow Kubernetes to automatically replace pods that are not functioning correctly, ensuring that your application remains available and responsive.

5. Use Service Mesh

A service mesh is a dedicated infrastructure layer for handling service-to-service communication in a microservices architecture. It’s responsible for the reliable delivery of requests through the complex topology of services that constitute a microservices application.

In the context of microservices on Kubernetes, a service mesh provides several benefits, including traffic management, service discovery, load balancing, and failure recovery. It also provides powerful capabilities like circuit breakers, timeouts, retries, and more, which can be vital for maintaining the stability and performance of your microservices.

While Kubernetes does provide some of these capabilities out of the box, a service mesh takes it to the next level, giving you fine-grained control over your service interactions. Whether you choose Istio, Linkerd, or any other service mesh platform, it’s a powerful tool to have in your Kubernetes toolbox.

6. Design Each Microservice for a Single Responsibility

Designing each microservice with a single responsibility is a foundational principle of microservices architecture and is just as critical when deploying on Kubernetes. The single responsibility principle promotes cohesion and helps in achieving a clean separation of concerns.

In the context of microservices running on Kubernetes, a focused scope for each service ensures easier scaling, monitoring, and management. Kubernetes allows you to specify different scaling policies, resource quotas, and security configurations at the microservice level. By ensuring that each microservice has a single responsibility, you can take full advantage of these features, tailoring each aspect of the infrastructure to the specific needs of each service.

Furthermore, a single-responsibility design simplifies debugging and maintenance. When an issue arises, it’s much easier to diagnose and fix problems in a service that has a well-defined, singular role. This is especially beneficial in a Kubernetes environment where logs, metrics, and other debugging information can be voluminous and complex due to the distributed nature of the system.

Learn more in our detailed guide to microservices best practices (coming soon)

Microservices Delivery with Codefresh

Codefresh helps you answer important questions within your organization, whether you’re a developer or a product manager:

- What features are deployed right now in any of your environments?

- What features are waiting in Staging?

- What features were deployed on a certain day or during a certain period?

- Where is a specific feature or version in our environment chain?

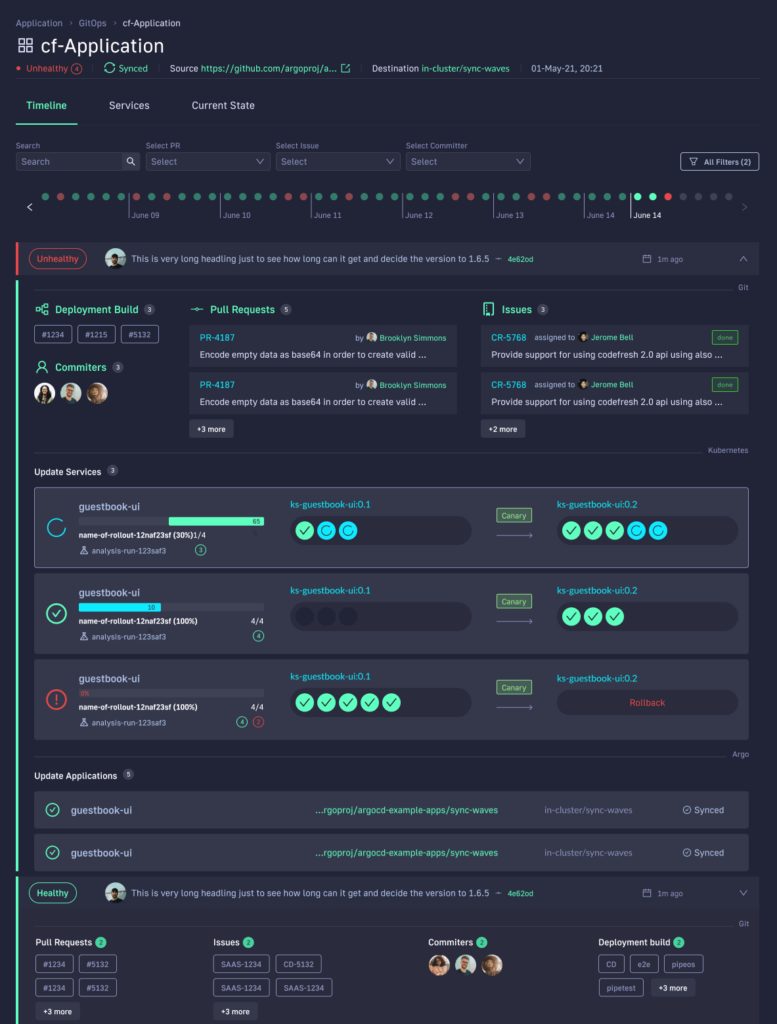

With Codefresh, you can answer all of these questions by viewing one dashboard, our Applications Dashboard that can help you visualize an entire microservices application in one glance:

The dashboard lets you view the following information in one place:

- Services affected by each deployment

- The current state of Kubernetes components

- Deployment history and log of who deployed what and when and the pull request or Jira ticket associated with each deployment

The World’s Most Modern CI/CD Platform

A next generation CI/CD platform designed for cloud-native applications, offering dynamic builds, progressive delivery, and much more.

Check It Out