What Is Continuous Integration?

In a traditional software development process, multiple developers would produce code, and only towards the end of the release, integrate their work together. This introduced many bugs and issues, which were identified and resolved after a long testing stage. Only then software could be released. As a result, software quality suffered and teams would typically release new versions only once or twice a year.

Continuous integration (CI) aims to solve this problem, making agile development processes possible. Continuous integration means that every change developers make to their code is immediately integrated into the main branch of their software project. CI systems automatically run tests to catch problems in the code, developers get rapid feedback and can resolve any issues immediately. A feature is not considered done until it is working on the main branch and integrated with other code changes.

Technically, organizations implement CI by deploying a build server that can take code changes, perform automated tests using multiple integrated tools, integrate them into a main branch, and create a new version of software artifacts.

CI dramatically increased both the quality and velocity of software development. Teams can create more features that provide value to users, and many organizations release software every week, every day, or even multiple times per day.

What Is Continuous Delivery?

Traditionally, deploying a new software release was a large, complex, risky endeavor. After a new release was tested, operations teams had the task of deploying it to production. Depending on the scale of the software this could take hours, days, or weeks, involved checklists and manual steps, and required specialized expertise. Deployments frequently failed, requiring workarounds or urgent support from developers.

This traditional approach presented many challenges. It was stressful for teams, expensive and highly risky for the organization, and introduced bugs and failures in production environments.

Continuous delivery (CD) aims to solve these challenges with automation. In a CD approach, software is packaged and deployed to production as often as possible. A core principle of CD is that every change to the software can be deployed to production with no special effort.

After the CI system integrates new changes and creates a new version of the system, CD systems package the new version, deploy it to a testing environment, automatically evaluate how it operates, and push it to a production environment. This last step can be approved by a human, but should not require any manual effort.

Implementing CD requires automation of the entire software development lifecycle, including builds, tests, environment setup, and software deployment. All artifacts must be in a source code repository, and there should be automated mechanisms for creating and updating environments.

A true CD pipeline has significant benefits – allowing development teams to immediately deliver value to customers, creating a truly agile development process.

Related product offering: Octopus Deploy | Continuous Delivery and Deployment Platform

What Is a CI/CD Pipeline?

A Continuous Integration/Continuous Delivery (CI/CD) pipeline is a framework that emphasizes iterative, reliable code delivery processes for agile DevOps teams. It involves a workflow encompassing continuous integration, testing, delivery, and continuous delivery/deployment practices. The pipeline arranges these methods into a unified process for developing high-quality software.

Test and build automation is key to a CI/CD pipeline, which helps developers identify potential code flaws early in the software development lifecycle (SDLC). It is then easier to push code changes to various environments and release the software to production. Automated tests can assess crucial aspects ranging from application performance to security.

In addition to testing and quality control, automation is useful throughout the different phases of a CI/CD pipeline. It helps produce more reliable software and enables faster, more secure releases.

Learn more in our detailed guide to CI/CD in agile

Benefits of a CI/CD Pipeline

Using a CI/CD pipeline provides the following benefits:

- Rapid feedback—continuous integration enables frequent tests and commits. The shorter development cycles allow developers and testers to identify issues only discoverable at runtime quickly.

- Frequent deployments—by focusing on smaller commits and regularly available deployment-ready code, teams can quickly deploy changes to the staging or production environment.

- Accurate planning—the faster pace and increased visibility achieved by CI/CD workloads enable teams to plan more accurately, incorporating up-to-date feedback and focussing on the relevant issues. Insights like cadence history help inform roadmaps.

- Cost reduction—teams spend less time on manual tasks such as testing, deployment, and infrastructure maintenance. CI/CD practices help identify flaws early in the SDLC, making them easier to fix. This reduced overall workload helps lower costs, especially over the long term.

- Competitiveness—CI/CD pipelines allow DevOps teams to adopt new technologies and rapidly respond to customer requirements, to give their product a competitive advantage. They can quickly implement new integrations and code, which is important in a constantly evolving market.

Stages of a CI/CD Pipeline

A CI/CD pipeline builds upon the automation of continuous integration with continuous deployment and delivery capabilities. Developers use automated tools to build software, and the pipeline automatically tests and commits each code change that passes the tests.

A CI/CD pipeline typically includes these four basic stages:

- Source—usually, a source code repository triggers the pipeline. The CI/CD solution identifies a code change (or receives an alert) and responds by running the pipeline. Other pipelines and user-initiated or automatically scheduled workflows may also trigger a pipeline to run.

- Build—various DevOps teams may contribute code they develop on separate machines, introducing them to the central repository. While simple in principle, integrating code developed with different tools and techniques and on different systems can introduce complexities. In addition to version control, divergent code quality can affect performance. With the build stage incorporated into the unified CI/CD pipeline, it is possible to automate developer contributions and leverage software standardization tools to ensure consistent quality and compatibility.

- Testing—developers use automated tests to validate the performance and correctness of their code. Testing provides a safety layer to prevent errors and bugs from reaching production and impacting end-users. Developers are responsible for writing the tests, preferably during behavior and test-driven development. This stage may take seconds or hours, depending on the project’s size and complexity. The testing stage might contain separate phases for smoke testing, sanity checks, and the like. Large projects tend to run tests simultaneously to save time. The testing stage is critical for exposing unforeseen defects in the code and repairing them quickly. Fast feedback is key to maintaining the workflow.

- Artifact generation—the final stage involves generating an artifact that can be deployed to production. There are several approaches for deploying artifacts. One approach is scripting or orchestration systems that automate deployment to a target environment and verify it is deployed correctly. Another, more modern approach is GitOps – in which the new artifact and all its necessary configuration are deployed to a Git repository, and that declarative configuration is applied automatically to environments.

What Are the Challenges of CI/CD Pipelines?

Environment Limitations

Development and testing teams often have access to limited resources or share an environment to test code changes. Sharing environments can be challenging for CD workflows. In large projects, multiple teams might commit code to a single environment simultaneously. Likewise, multiple tests may run in parallel. Different commits and tests may require different configurations, and if they rely on the same infrastructure, their needs can clash.

Poorly configured environments can result in failed tests and deployments, slowing down the overall CI/CD process.

Version Control Issues

Traditional CI/CD pipelines typically require multiple resources, components, and processes. Once a pipeline is up and running, it must have a stable version to run all the processes. Unexpected updates can derail the whole pipeline and slow down the deployment process.

DevOps teams often spend a long time maintaining version control. Some teams may allocate version control management to a specific department or job role within the CI/CD pipeline.

One particularly frustrating scenario is an automated update that switches on and forces a new version update on a critical process. In addition to interrupting the process, the new version might present compatibility issues for the existing CI/CD pipeline. The teams then have to restructure the overall CI/CD deployment process to support the new version.

Integration with Legacy Workflows

Adopting agile DevOps practices can be complex, especially when there’s a need to integrate a new CI/CD pipeline into an existing workflow or project. Large legacy projects may be particularly problematic because a single change to one workflow may necessitate changes in others, potentially triggering an entire restructuring.

Implementing CI/CD in an existing project thus requires careful planning, extensive expertise, and appropriate tooling. An improperly planned implementation may cost significantly to reduce latency and ensure high quality.

Cross-Team Communication

CI/CD pipelines typically involve a large workforce, often divided into several teams with different responsibilities. Interpersonal communication, especially across different teams, is often the largest obstacle in a CI/CD pipeline. Effective communication is essential for solving issues quickly and ensuring the continued operation of the pipeline.

There are various reasons human communication is important, including failures of non-human communication. For example, automated tools can output errors but fail to communicate accurate information to the developer responsible for addressing the issue. In this case, others could forward that information to the relevant individual.

Related content: Read our guide to CI/CD best practices

Additional Challenges

In addition to the above, many teams experience the following difficulties when building and maintaining CI/CD pipelines:

- Inefficient test suites – bloated automated testing suites can be difficult to maintain and may cover software functionality only partially.

- Manual database deployments – databases are complex, mission-critical systems which can be difficult to deploy automatically, especially with schema changes.

- Unplanned downtime – CI/CD pipelines can fail, delaying releases and hurting developer productivity.

- Difficult rollbacks – after deploying new releases, in many cases it can be difficult to roll back to a previous stable release in case of problems in production.

- Missing metrics – CI/CD teams can find it difficult to measure and report on the success of releases.

- Static test environments – many test environments are deployed one time and reused, which creates maintenance overhead and causes divergence between test and production environments.

TIPS FROM THE EXPERT

In my experience, here are tips that can help you better adapt to CI/CD:

- Embrace trunk-based development: Adopt trunk-based development practices to streamline the integration process. This involves keeping branches short-lived and merging changes into the main branch frequently, reducing merge conflicts and integration issues.

- Automate environment provisioning: Automate the creation and teardown of development, testing, and staging environments using infrastructure as code (IaC) tools like Terraform or CloudFormation. This ensures consistency across environments and reduces setup time.

- Integrate monitoring and alerting: Integrate comprehensive monitoring and alerting into your CI/CD pipeline. Use tools like Prometheus, Grafana, or ELK stack to monitor build performance, deployment success rates, and application health in real-time.

- Implement progressive delivery: Use progressive delivery techniques like canary deployments, blue-green deployments, and feature flags to release changes gradually and mitigate risks associated with large-scale deployments.

- Leverage GitOps for consistency: Adopt GitOps principles to manage your CI/CD pipeline configurations. Store all pipeline definitions, infrastructure configurations, and application deployments in Git repositories for version control and transparency.

CI/CD Pipelines in a Cloud Native Environment

Here are a few ways the cloud native environment is changing the way CI/CD pipelines are built and managed, introducing both advantages and challenges.

Public Clouds

Public cloud platforms have made it possible to quickly stand up entire environments in a self-service model. Instead of waiting for IT to provision resources, organizations can simply request and receive them on-demand. It has also become easy to automate resource provisioning as part of CI/CD processes.

Containers

Containers, popularized by Docker, allow DevOps teams to package software with all its dependencies, ensuring that it runs exactly the same way on any machine. A container is a standardized unit that can be deployed in any environment, and is immutable, meaning that its configuration always stays the same until it is torn down and replaced.

Container images can define the development environment, testing or staging environment, and production environment. The same container image with slight differences can be promoted throughout the pipeline, enabling consistency in development and testing. In a CI/CD process, containers can be used to deploy a build to every stage of the pipeline.

Kubernetes

As soon as an organization scales up its use of containers, they become difficult to manage, and issues like networking, storage management, and security arise. This is where Kubernetes comes in.

Kubernetes is a popular open source platform that orchestrates containers at large scale. It creates the concept of a cluster—a group of physical machines called nodes on which a team can run containerized workloads. Instead of managing containers, they manage pods, an abstraction that combines several containers to perform a functional role.

Kubernetes presents major benefits for CI/CD pipelines. It supports declarative configuration and has advanced automation capabilities, making it possible to represent entire CI/CD processes as code, with resource provisioning and infrastructure fully managed by Kubernetes.

The massive adoption of containers and Kubernetes means that many CI/CD pipelines have already, or will soon, transition to Kubernetes. Deploying a CI/CD pipeline to a Kubernetes cluster requires the following components:

- Kubernetes Cluster—an organization must deploy a Kubernetes cluster, either self-managed or in the form of a managed Kubernetes service. Container engines like Docker or CRI-O must be deployed on all physical machines participating in the cluster.

- Version control system—a repository that stores declarative configurations

- CI/CD tool—a system that builds container images, runs tests, and deploys the resulting artifacts to the Kubernetes cluster.

How to Apply GitOps to a CI/CD Pipeline

GitOps is a paradigm that enables developers to operate in a full self-service environment, without requiring assistance from IT staff. GitOps requires that developers create and monitor environments using declarative configurations. These declarative configurations become the basis of the CI/CD process and are used to create all environments—dev, test, and production.

In GitOps, changes start with a pull request to a Git repository. A new version of declarative configuration in the repo triggers a continuous integration (CI) process that builds new artifacts, typically container images. Then a continuous deployment (CD) process begins, automatically updating the infrastructure, so that the environment converges to a desired state defined in Git.

This end-to-end automation eliminates manual changes and human error, improves consistency, and provides a full audit trail of all changes. Most importantly, it enables instant, failsafe rollback to a previous working version in case something breaks in an environment.

To fully adopt GitOps, developers need a platform. GitOps CI/CD pipeline tools can bridge the gap between Git pull requests and orchestration systems like Kubernetes. Development teams create a hook from their Git repository to the platform, and then every configuration change triggers a CI/CD process executed by the orchestrator.

Kubernetes CI/CD Pipelines with Argo

Launched in 2018, Argo CD is an open source project that provides a continuous deployment solution for Kubernetes. Argo CD is Kubernetes-native, and uses a GitOps deployment model, with a Git repository as the single source of truth for Kubernetes infrastructure states.

This deployment model is also known as a pull-based deployment—the solution monitors Kubernetes resources and updates them based on the configurations in the Git repo. It contrasts with push-based deployment, which requires the user to trigger events from an external service or system. Argo CD supports pull-based and push-based deployment models, enabling synchronization between the desired target state and the current (live) state.

How does Argo CD work?

The process starts when a developer issues a pull request, and new code changes are merged into the main branch. This triggers a CI process, which pushes images to a Docker registry and commits image tags to a Git repository. A webhook action then triggers a sync action, which calls Argo CD into action.

Argo CD identifies changes to the Git repository, compares the new configuration with the current state of the Kubernetes cluster, and instructs Kubernetes to make the necessary changes. It functions as a Kubernetes controller, continuously monitoring running applications and comparing their live state to the desired state specified in the Git repository. If there is a difference between the states, the controller identifies the application as OutofSync, and adjusts cluster state until the new version of the application is deployed.

Argo CD allows DevOps teams to leverage existing investments in tooling. It can process declarative configurations written in plain YAML or JSON, packaged as Helm Charts, or created using tools like Kustomize or Jsonnet.

Using Codefresh Workflows to Automate CI/CD Pipelines

Codefresh includes a Workflows capability that allows you to define any kind of software process for creating artifacts, running unit tests, running security scans, and all other actions that are typically used in Continuous Integration (CI).

Codefresh workflows redefine the way pipelines are created by bringing GitOps into the mix and adopting a Git-based process instead of the usual ClickOps.

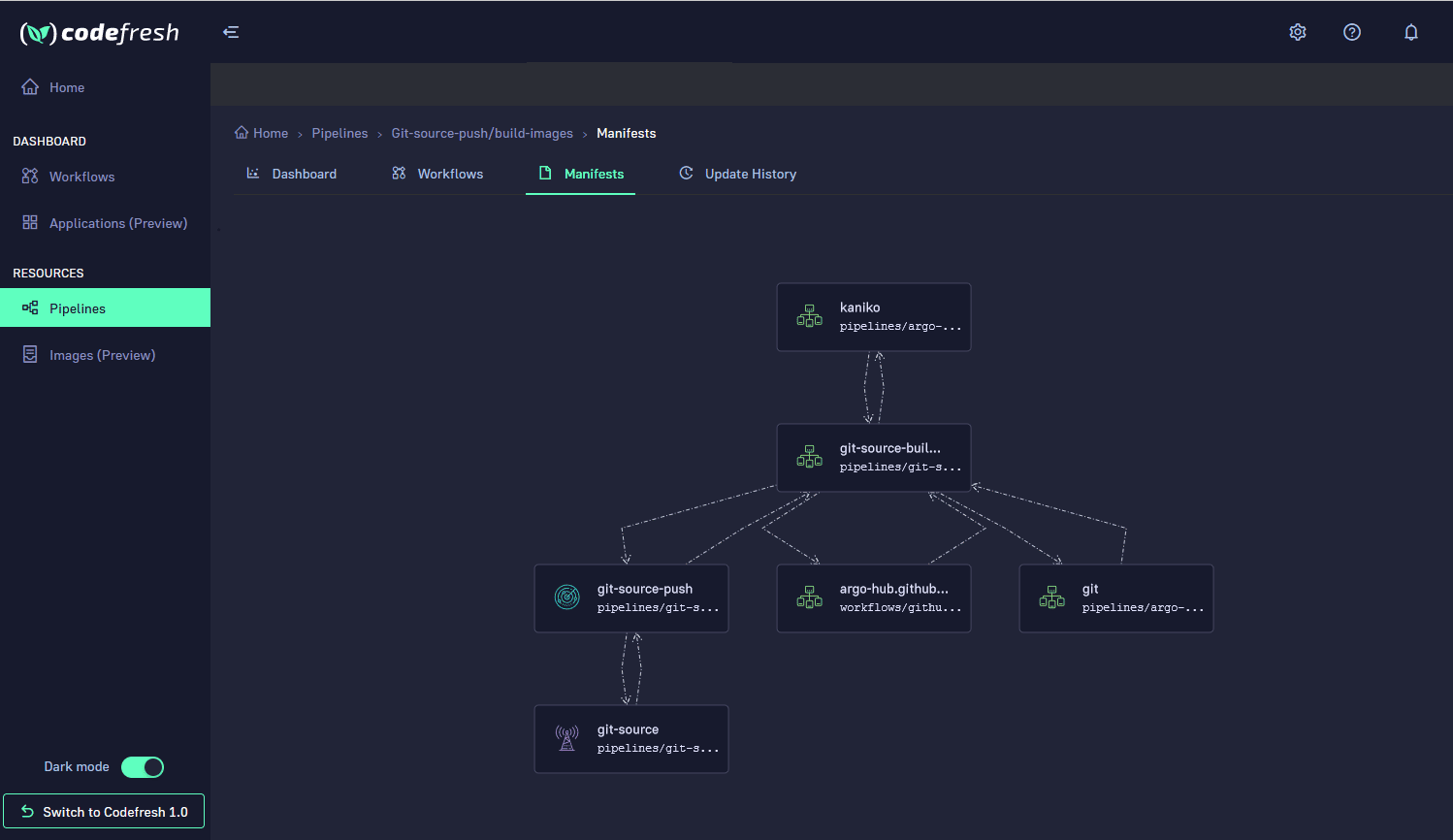

Here is an example of a Codefresh workflow as it is presented in the graphical user interface. The tree representation shows an overview of the workflow along with its major components/steps and how they communicate with each other.

Codefresh Workflows are Powered by Argo Workflows and Argo Events

Codefresh is powered by the open source Argo projects and workflows are no exception. The engine that is powering Codefresh workflows is the popular Argo Workflows project accompanied with Argo Events. Codefresh is fully adopting an open source development model, moving towards a standardized and open workflow runtime while at the same time giving back all our contributions to the community.

Adopting Codefresh with Argo Workflows has the following advantages:

- Mature, battle-tested runtime for all pipelines

- Flexibility of the Argo engine and its Kubernetes native capabilities

- Reusable steps in the form of Workflow templates

- Leveraging existing Artifact integrations

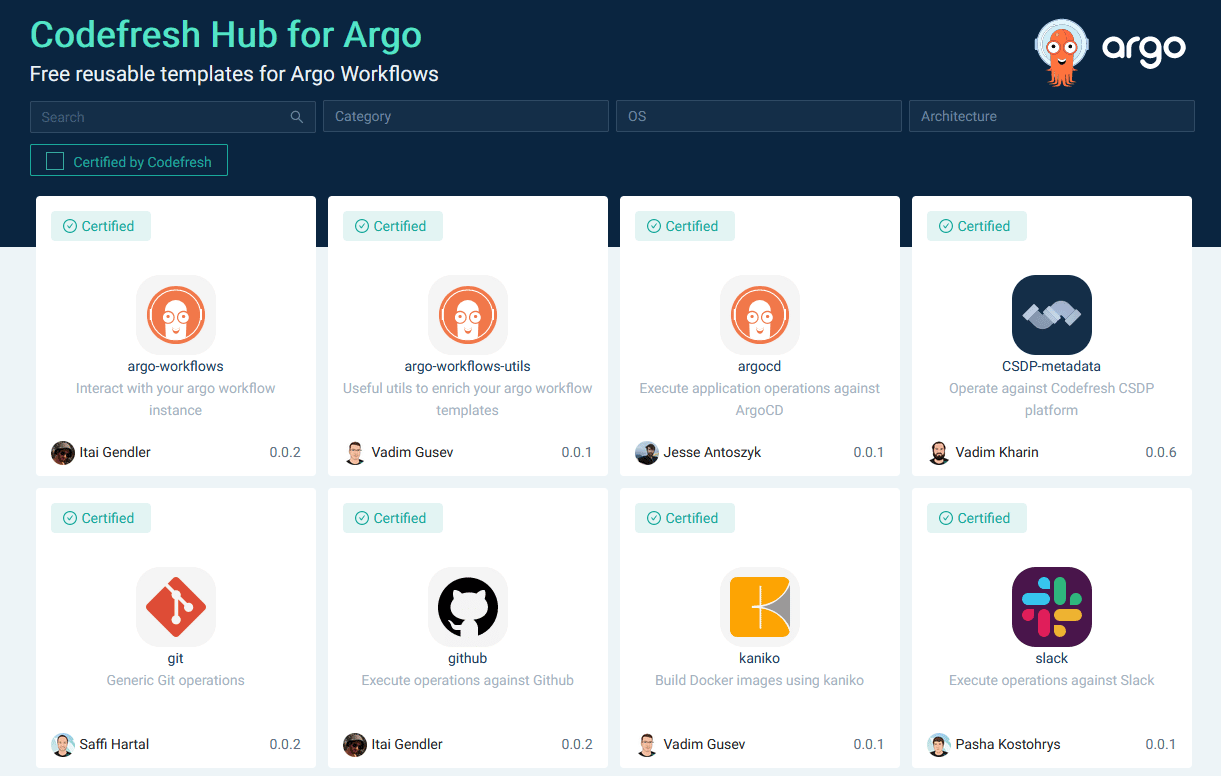

Apart from individual features and enhancements, one major contribution to the Argo ecosystem is the introduction and hosting of the Codefresh Hub for Argo Workflows. The Hub lets you to find curated Argo templates, use them in your workflows, share and reuse them in a way that was never possible before.

See Additional Guides on Key CI/CD Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of CI/CD.

The World’s Most Modern CI/CD Platform

A next generation CI/CD platform designed for cloud-native applications, offering dynamic builds, progressive delivery, and much more.

Check It Out