Part 2

In the previous post, I’ve covered Docker CI, and talked about Continuous Deployment and Continuous Delivery.

This time, I’m going to share our POV for building effective CD (both CD types) for a microservice based application, running on a Kubernetes cluster.

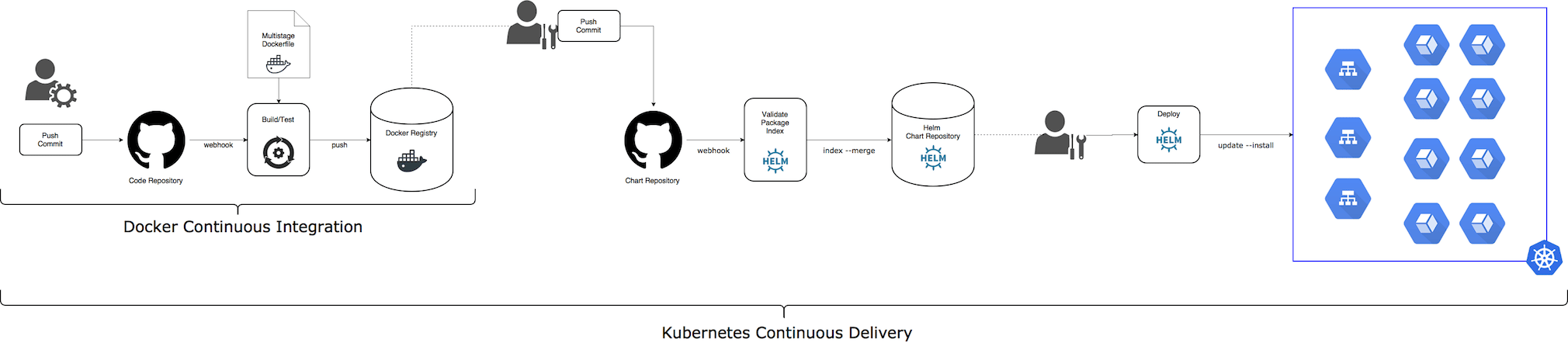

Kubernetes Continuous Delivery (CD)

Building Docker image on git push is a very first step you need to automate, but …

Docker Continuous Integration is not a Kubernetes Continuous Deployment/Delivery

After CI completes, you just have a new build artifact – a Docker image file.

Now, somehow you need to deploy it to the desired environment (Kubernetes cluster) and maybe also need to modify other Kubernetes resources, like configurations, secrets, volumes, policies, and others. Or maybe you do not have a “pure” microservice architecture and some of your services still have some kind of inter-dependency and have to be released together. I know, this is not “by the book”, but this is a very common use case: people are not perfect and not all architectures out there are perfect either. Usually, you start from an already existing project and try to move it to a new ideal architecture step by step.

So, on one side, you have one or more freshly backed Docker images.

On the other side, there are one or more environments where you want to deploy these images with related configuration changes. And most likely, you would like to reduce required manual effort to the bare minimum or dismiss it completely, if possible.

Continuous Delivery is the next step we are taking.

Most of the CD tasks should be automated, while there still may be a few tasks that should be done manually. The reason for having manual tasks can be different: either you cannot achieve full automation or you want to have a feeling of control (deciding when to release by pressing some “Release” button), or there is some manual effort required (bring the new server and switch it on)

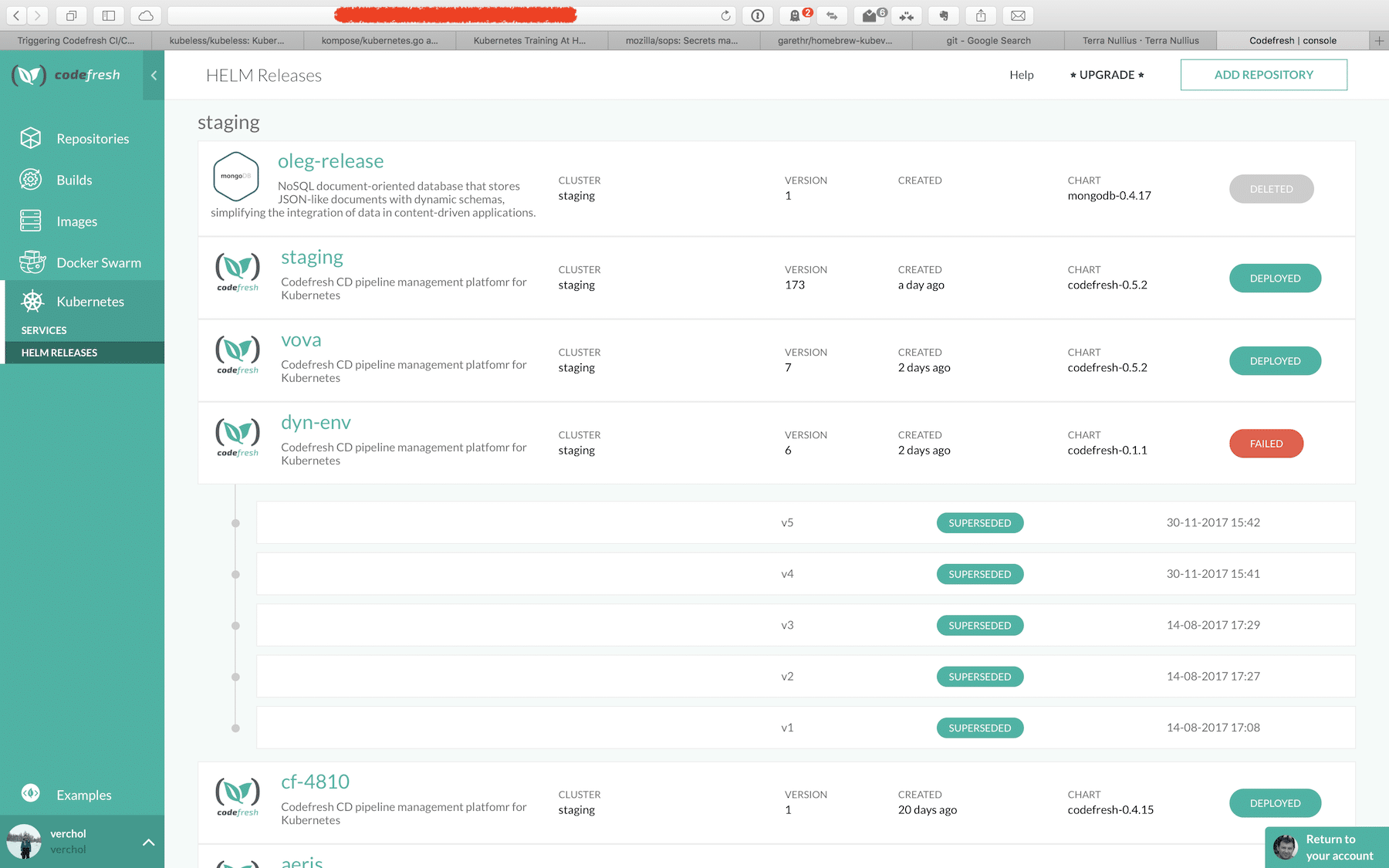

For our Kubernetes Continuous Delivery pipeline, we manually update Codefresh application Helm chart with appropriate image tags and sometimes we also update different Kubernetes YAML template files too (defining a new PVC or environment variable). Once changes to our application chart are pushed into the git repository, an automated Continuous Delivery pipeline execution is triggered.

Codefresh includes some helper steps that make building Kubernetes CD pipeline easier. First, we have a built-in helm update step that can install or update a Helm chart on specified Kubernetes cluster or namespace, using Kubernetes context, defined in Codefresh account.

Codefresh also provides a nice view of what is running in your Kubernetes cluster, where it comes from (release, build) and what does it contain: images, image metadata (quality, security, etc.), code commits.

We use our own service (Codefresh) to build an effective Kubernetes Continuous Delivery pipeline for deploying Codefresh itself. We also constantly add new features and useful functionality that simplify our life (as developers) and hopefully help our customers too.

Typical Kubernetes Continuous Delivery flow

- Setup a Docker CI for the application microservices

- Update microservice/s code and chart template files, if needed (adding ports, env variables, volumes, etc.)

- Wait till Docker CI completes and you have a new Docker image for updated microservice/s

- Manage the application Helm chart code in separate

gitrepository; use the same git branch methodology as for microservices - Manually update

imageTags for updated microservice/s - Manually update the application Helm chart version

- Trigger CD pipeline on

git pushevent for the application Helm chart git repository

- validate Helm chart syntax using

helm lintcommand - convert Helm chart to Kubernetes template files (with

helm templateplugin) and usekubevaltool to validate these files - package the application Helm

chartand push it to the Helm chart repository - Tip: create few chart repositories; I suggest having a chart repository per environment:

production,staging,develop

- Manually (or automatically) execute

helm upgrade --installfrom corresponding chart repository

After CD completes, we have a new artifact – an updated Helm chart package (tar archive) of our Kubernetes application with a new version number.

Now, we can run help upgrade --install command creating a new revision for the application release. If something goes wrong, we can always rollback failed release to the previous revision. For the sake of safety, I suggest first to run helm diff (using helm diff plugin) or at least use a --dry-run flag for the first run, inspect the difference between a new release version and already installed revision. If you are ok with upcoming changes, accept them and run the helm upgrade --install command without --dry-run flag.

Kubernetes Continuous Deployment (CD)

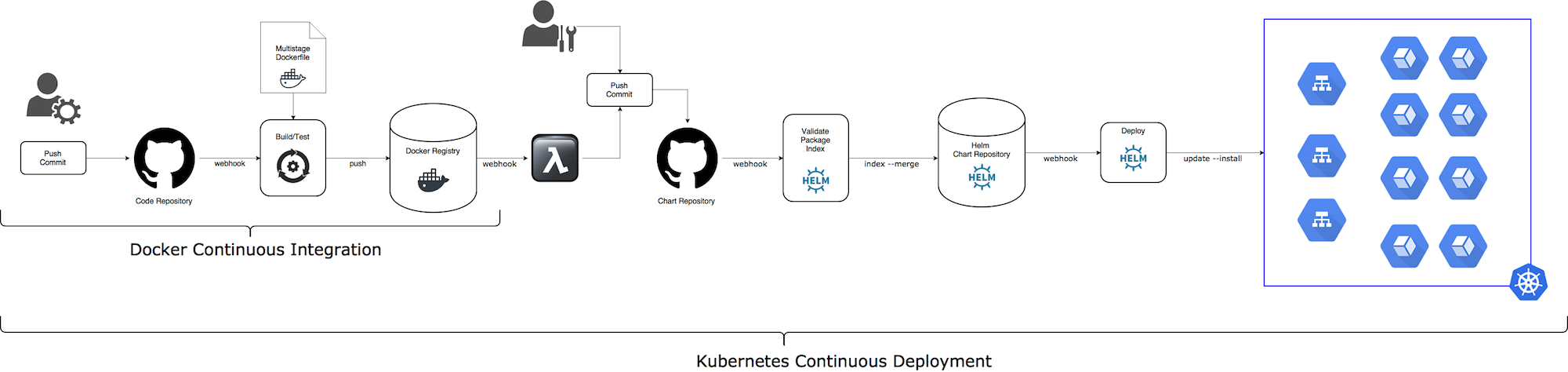

Based on above definition, to achieve Continuous Deployment we should try to avoid all manual steps, besides git push for code and configuration changes. All actions, running after git push, should be 100% automated and deliver all changes to a corresponding runtime environment.

Let’s take a look at manual steps from “Continuous Delivery” pipeline and think about how can we automate them?

Automate: Update microservice imageTag after successful docker push

After a new Docker image for some microservice pushed to a Docker Registry, we would like to update the microservice Helm chart with the new Docker image tag. There are two (at least) options to do this.

- Add a Docker Registry WebHook handler (for example, using AWS Lambda). Take the new image tag from the DockerHub

pushevent payload and update correspondingimageTagin the Application Helm chart. For GitHub, we can use GitHub API to update a single file or bash scripting with mixture ofsedandgitcommands. - Add an additional step to every microservice CI pipeline, after

docker pushstep, to update a correspondingimageTagfor the microservice Helm chart

Automate: Deploy Application Helm chart

After a new chart version uploaded to a chart repository, we would like to deploy it automatically to “linked” runtime environment and rollback on failure.

Helm chart repository is not a real server that aware of deployed charts. It is possible to use any Web server that can serve static files as a Helm chart repository. In general, I like simplicity, but sometimes it leads to naive design and lack of basic functionality. With Helm chart repository it is the case.

Therefore, I recommend using a web server that supports nice API and allows to get notifications about content change without pull loop. Amazon S3 can be a good choice for Helm chart repository

Once you have a chart repository up and running and can get notifications about a content update (as WebHook or with pool loop), and make next steps towards Kubernetes Continuous Deployment.

- Get updates from Helm chart repository: new chart version

- Run

helm update --installcommand to update/install a new application version on “linked” runtime environment - Run post-install and in-cluster integration tests

- Rollback to the previous application revision on any “failure”

Summary

This post describes our current Kubernetes Continuous Delivery” pipeline we succeeded to setup. There are still things we need to improve and change in order to achieve fully automated Continuous Deployment.

We constantly change Codefresh to be the product that helps us and our customers to build and maintain effective Kubernetes CD pipelines. Give it a try and let us know how can we improve it.

Hope, you find this post useful. I look forward to your comments and any questions you have.

New to Codefresh? Create Your Free Account Today!