Argo Rollouts is a progressive delivery controller created for Kubernetes. It allows you to deploy your application with minimal/zero downtime by adopting a gradual way of deploying instead of taking an “all at once” approach.

Argo Rollouts supercharges your Kubernetes cluster and in addition to the rolling updates you can now do

- Blue/green deployments

- Canary deployments

- A/B tests

- Automatic rollbacks

- Integrated Metric analysis

In the previous article, we have seen blue/green deployments. In this article, we will see an example that combines canary deployments with Prometheus metrics that automatically roll back an application if the new version is not passing specific metric thresholds.

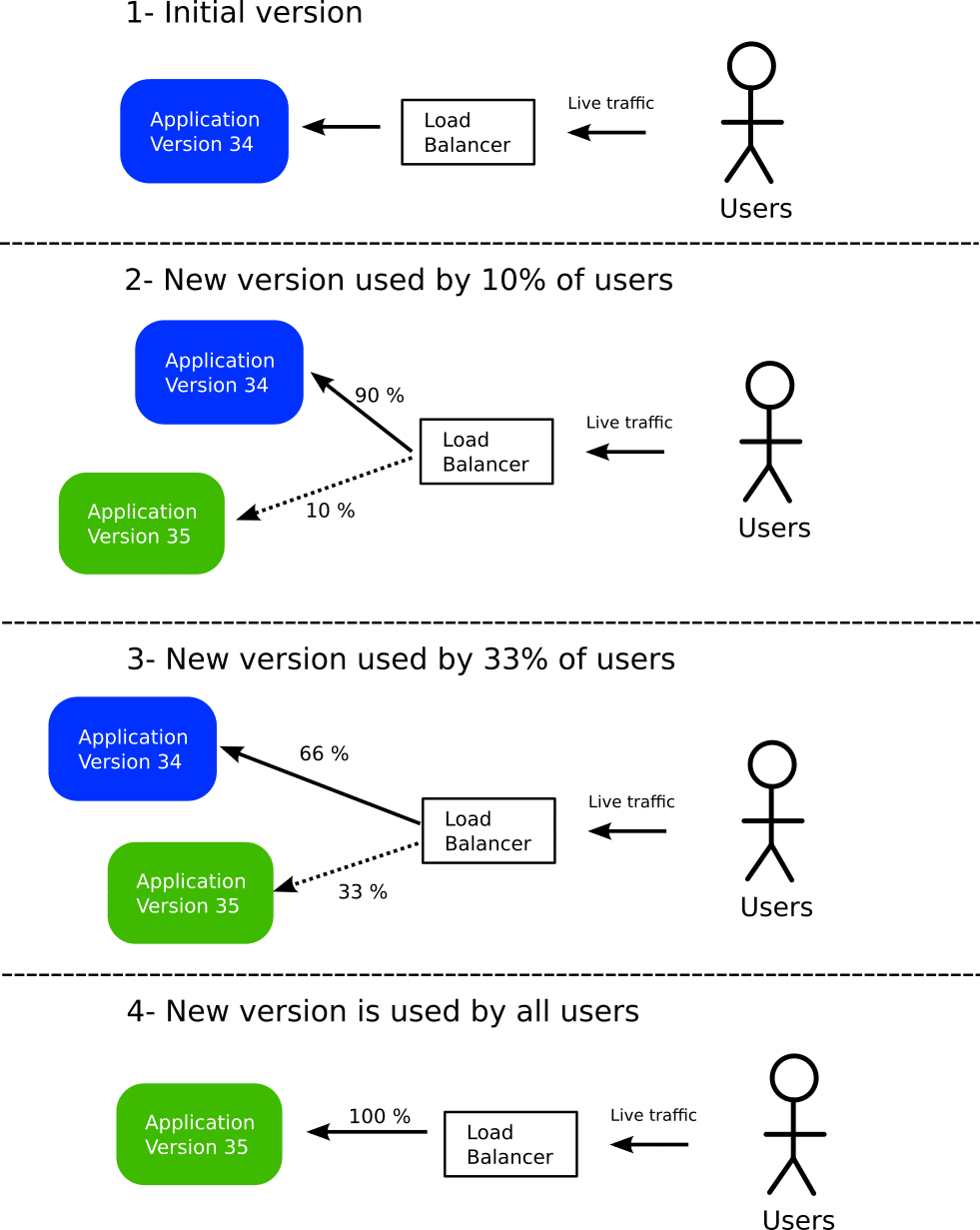

How Canary deployments work

Canary deployments are a bit more advanced than blue/green deployments. With Canary deployments instead of having a single critical moment when all traffic is switched from the old version to the new version, only a subset of the live traffic is gradually moved to the new version.

Here is the theory:

In the beginning, we have a single version of the application running. All live traffic from users is sent to that version. A key point here is that a smart proxy/load balancer acts as an intermediate between users and infrastructure. In the original state, this load balancer sends 100% of the traffic to the stable version.

In the next stage, we launch a second version of the application. We then instruct the load balancer to route 10% of the traffic to the new version. This means that if the new version has issues only a small number of users will be affected. This version acts as a “canary” that will notify us if something is wrong with the new deployment well in advance of a full rollout.

If we think the new application reacts well to live traffic we can increase the percentage of users that are redirected to it. In the second stage, we have chosen to route 1/3rd of all traffic. This means that we still have a failsafe and can always switch back all traffic to the previous version, but we can also see how our version behaves under a representative amount of traffic.

In the last step, we modify the load balancer and send 100% of the traffic to the new version. We can now discard the old version completely. We are back to the original state

Notice that the number of stages, as well as percentage of traffic, is user defined. The numbers we used (10% at stage 1 and 33% at stage 2) are just an example.

Depending on your network setup you can also have advanced routing decisions and instead of blinding redirecting a subset of traffic, you should choose who gets to see the new version first. Common examples are users from a specific geographical area or users from a particular platform (e.g. mobile users first).

Triple service canaries with Argo Rollouts

Now that we have seen how canary deployments work in general, let’s see what Argo Rollouts does with Kubernetes.

Argo Rollout is a Kubernetes controller and has several options for Canary deployment. Some of the most interesting capabilities are the native integration with networking solutions (for the traffic split) and with metric solutions for automatic promotions between canary stages.

One the most basic feature, however, is the way Argo Rollouts treats Kubernetes services during a canary release. Whenever a canary is active, Argo Rollouts can create 3 services for you

- A service that gets traffic to both the old and the new version. This is what your live users will probably use

- A service that sends traffic only to the canary/new version. You can use this service to gather your metrics or run smoke tests or give it only to some users that want to get access to the new release as fast as possible (for example your internal company users)

- A service that sends traffic only to the last/stable version. You can use the service if you want to keep some users to the old version as long as possible (e.g. users that you don’t want to see affected at all by the canary)

You can easily install the operator in your cluster by following the instructions.

You can find the example application at https://github.com/codefresh-contrib/argo-rollout-canary-sample-app. It is a simple Golang application that exposes some basic Prometheus metrics and has no other dependencies.

Before you use the example you need to install an ingress or service mesh in your cluster that will be used for the traffic split. Argo Rollouts supports several solutions including SMI support. We will use Linkerd for our example.

To perform the initial deployment, clone the git repository locally and edit the file canary-manual-approval/rollout.yaml by replacing CF_SHORT_REVISION with any available tag from Dockerhub (for example c323c7b)

Save the file and then perform the first deployment with

kubectl create ns canary kubectl apply -f ./canary-manual-approval -n canary

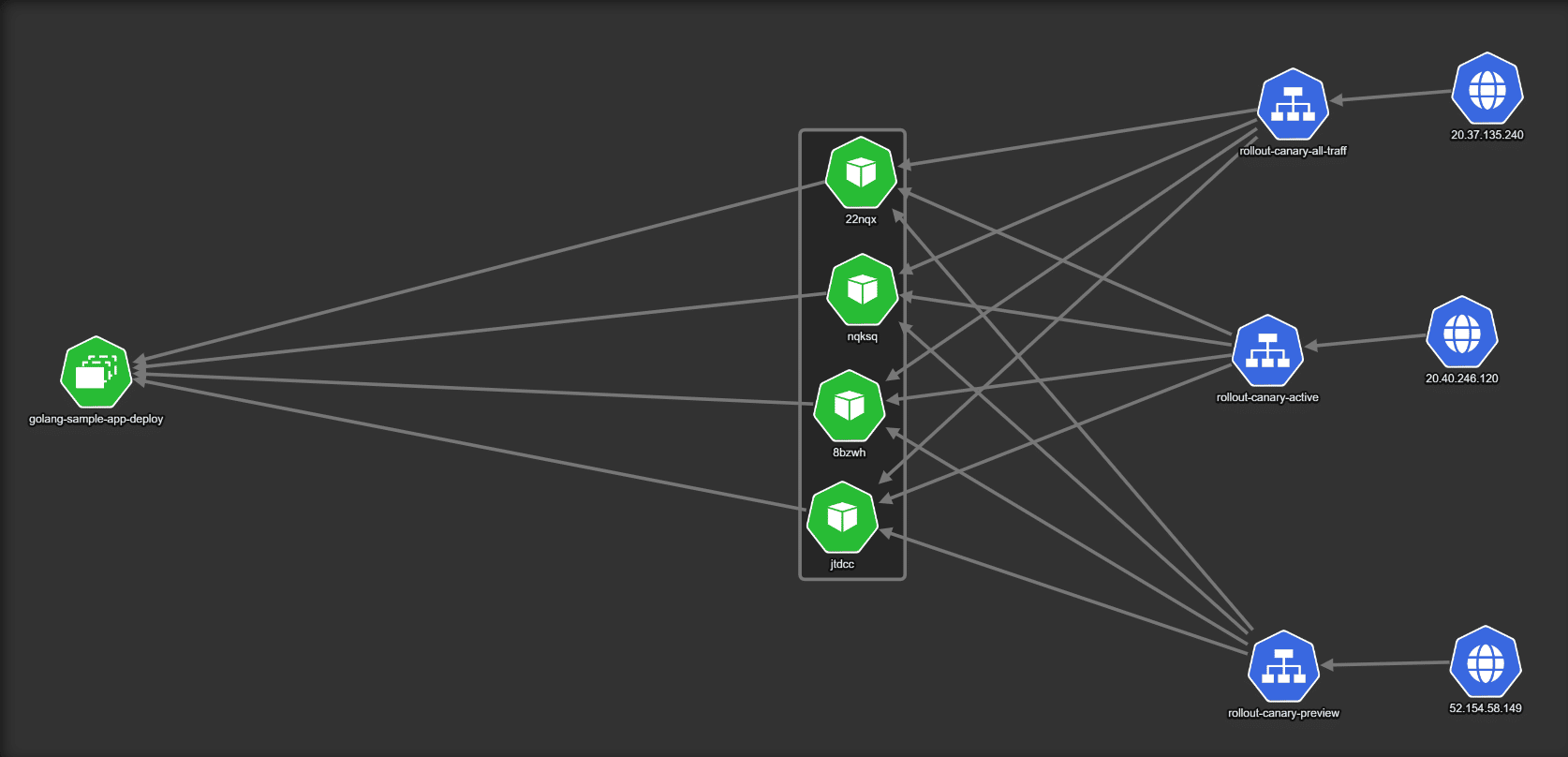

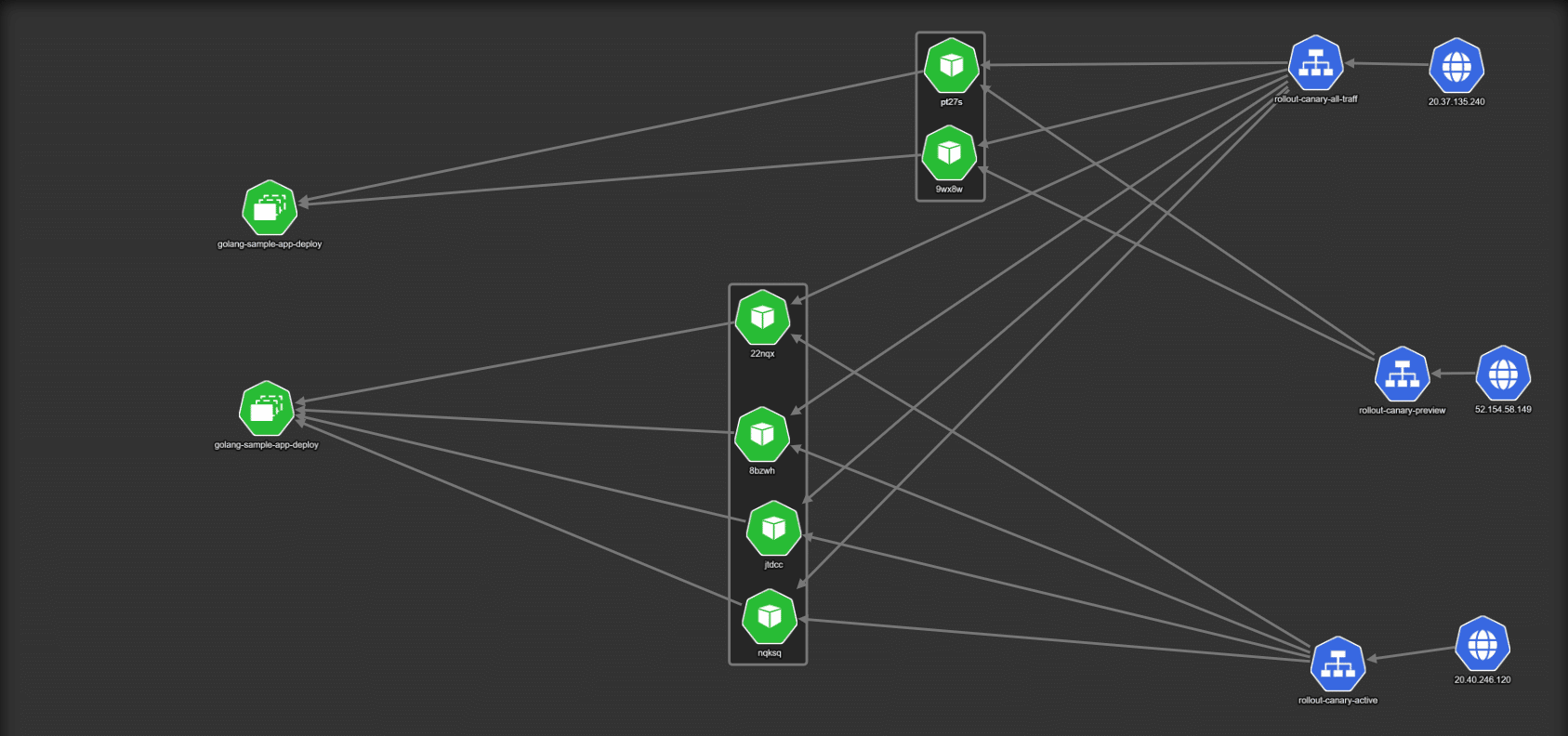

Here is the initial state using the kubeview graphical tool.

The application has 4 pods up and running (shown in the middle of the picture). There are 3 services that point to them. These are “rollout-canary-active”, “rollout-canary-preview” and “rollout-canary-all-traffic”. Your live traffic should use the last one.

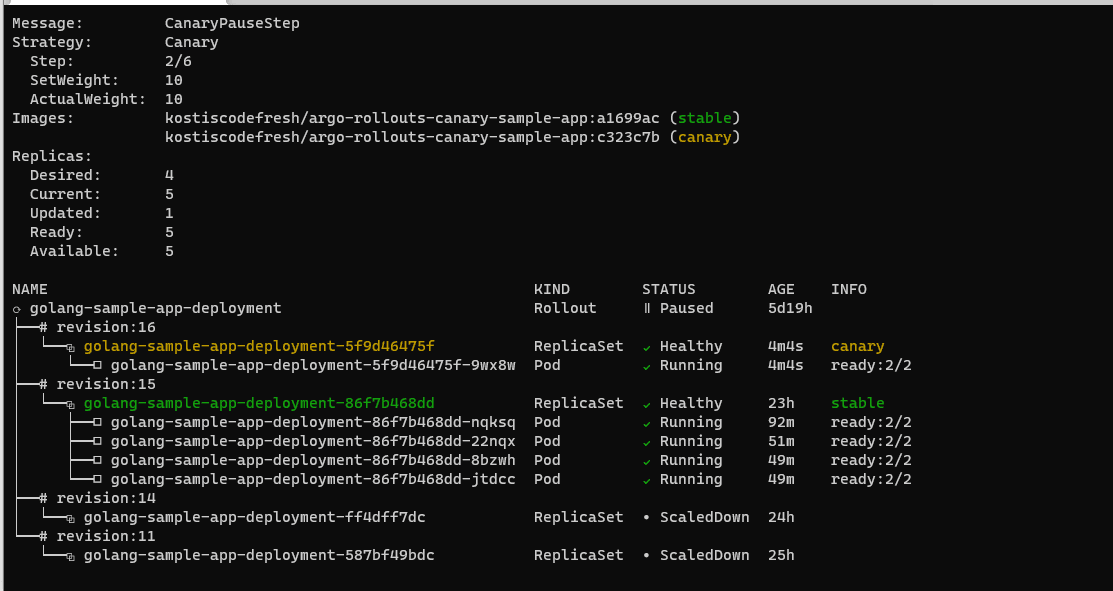

If you also want to monitor things from the command line be sure to install the Argo rollouts CLI and run

kubectl argo rollouts get rollout golang-sample-app-deployment --watch -n canary

The next step is to create a new version of the application. You can either change again the image tag on the manifest (and run kubectl apply again) or simply use the shortcut offered by Argo Rollouts

kubectl argo rollouts set image golang-sample-app-deployment spring-sample-app-container=kostiscodefresh/argo-rollouts-canary-sample-app:8c67194 -n canary

The image tag you use is arbitrary. It just needs to be different than the current one. As soon as Argo Rollouts detects the change it will do the following:

- Create a brand new replica set with the specified pods for the canary release

- Redirect the “preview” service to the new pods

- Keep the “active” service to the old pods (the existing version)

- Redirect the “all-traffic” service to both the active and new version.

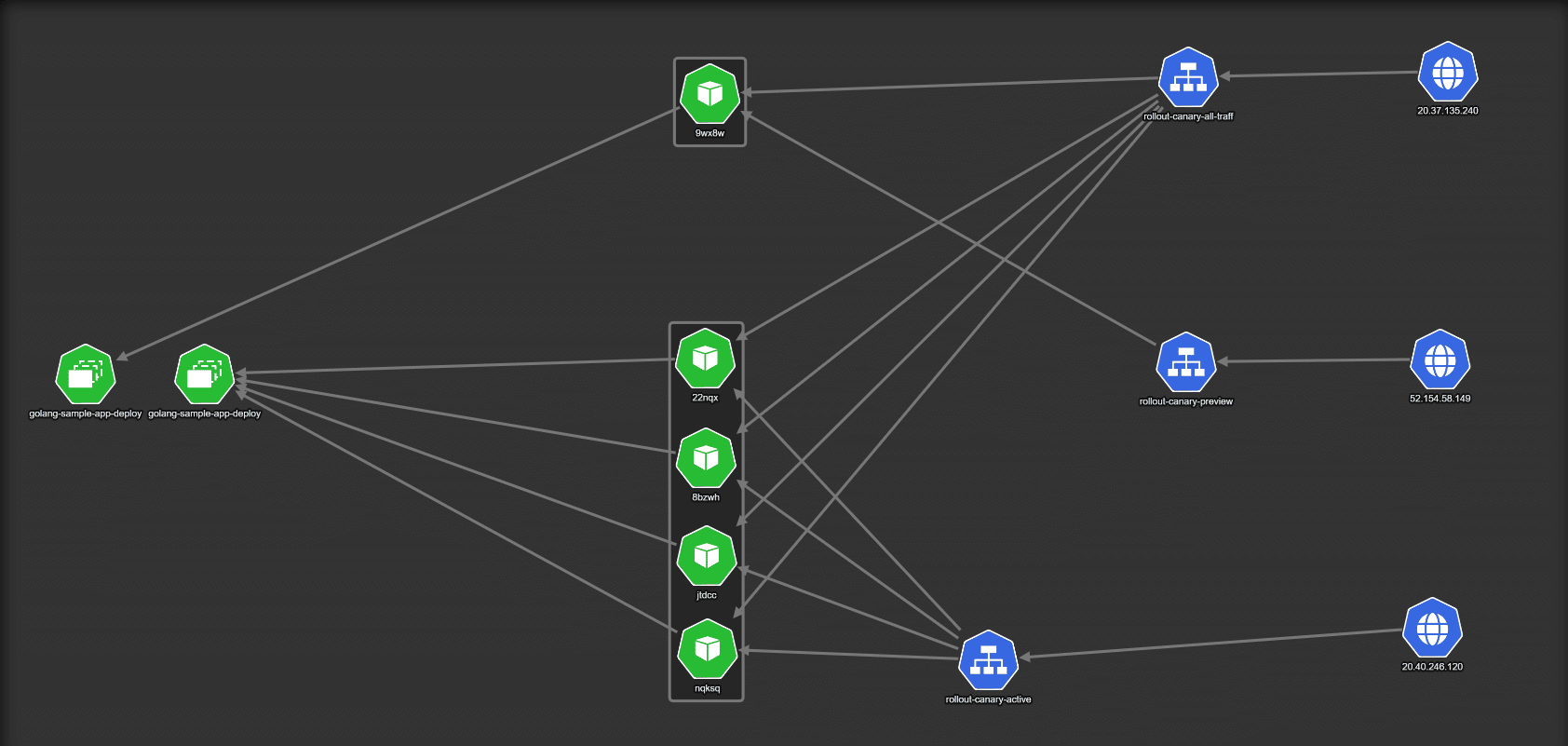

Here is how it looks in Kubeview:

The CLI will also report the split of traffic:

You can see also in the “setWeight” option that the canary only gets 10% of the traffic right now.

So now only a subset of all users can see the new version. At this stage, it is your responsibility to decide if the canary version is ok. Maybe you want to run some smoke tests or look at your metrics for the canary (we will automate this in the next section).

Once you are happy with the canary you can move more live traffic to it by promoting it.

kubectl argo rollouts promote golang-sample-app-deployment -n canary

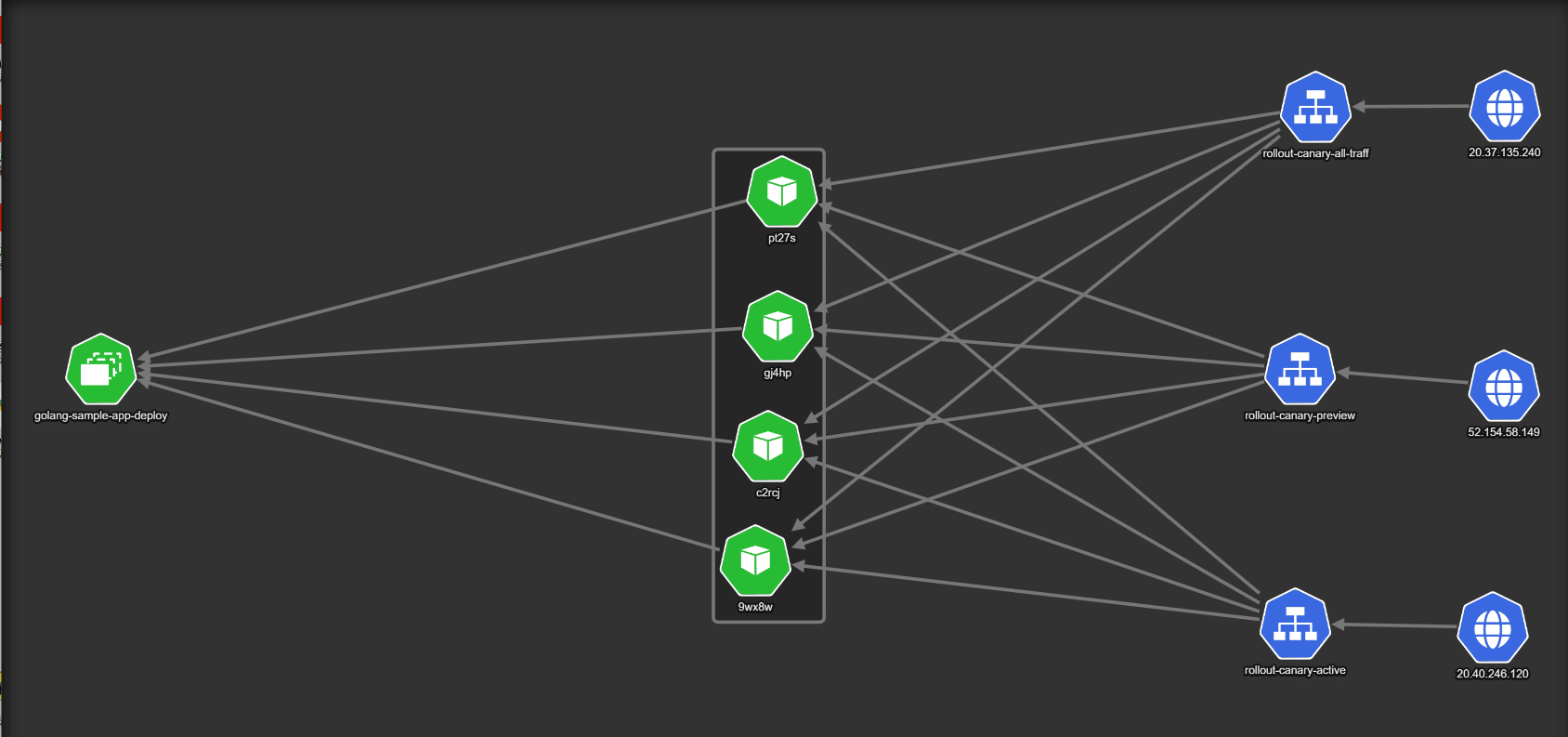

Argo Rollouts will create more pods for the canary release and shift more traffic to it:

At this stage, 33% of traffic is sent to the canary pods as defined in the Rollout specification:

With this amount of traffic, more users are exposed to the new version and any immediate problems should be easier to spot in your metrics or other monitoring mechanisms.

Assuming that everything still looks good you can promote the canary a second time:

kubectl argo rollouts promote golang-sample-app-deployment -n canary

Argo Rollouts will now shift all traffic to the canary pods and after a while discard the previous version:

We are now back to the original state where all 3 services point at the new version.

A new deployment will repeat the whole process.

If for some reason your smoke tests fail, and you don’t want to promote the new version you can abort the whole process with

kubectl argo rollouts abort golang-sample-app-deployment -n canary

You can also go back to a previous version with

kubectl argo rollouts undo golang-sample-app-deployment -n canary

Argo rollouts has more options that allow you to choose the number of versions that stay behind, the amount of time each version is kept, which version to roll back to, and so on.

See the whole rollout spec for more details.

Notice also that for simplicity reasons, the three services in the example are powered by Load Balancers. Check the pricing of your Cloud provider before running this example on your own and be sure to clean up everything after you finish.

Automating everything with Codefresh and Prometheus

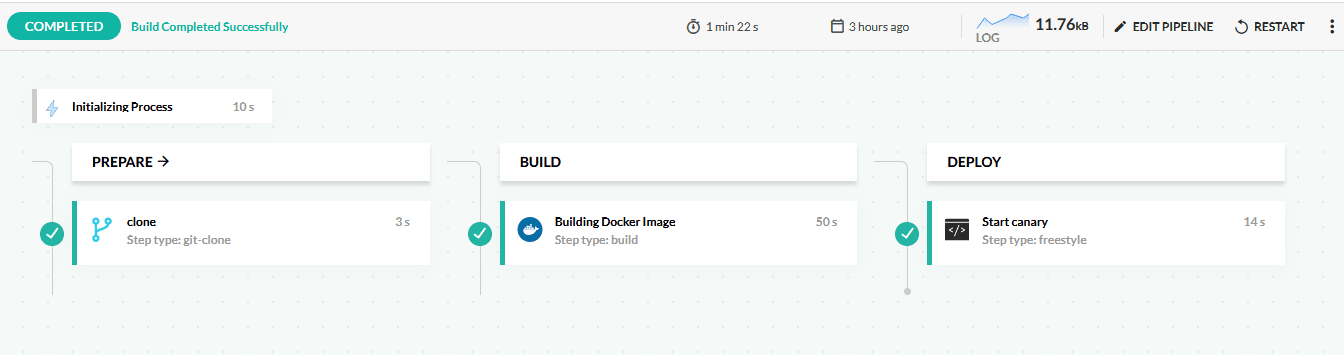

Now that we have seen how you can perform manually canary deployments, it is time to automate the deployment process with Codefresh pipelines.

We could write a similar pipeline as in our blue/green example that pauses the pipeline at each canary step and then goes to the next stage by waiting for manual approval. You can find an example pipeline at https://github.com/codefresh-contrib/argo-rollout-canary-sample-app/blob/main/canary-manual-approval/codefresh.yaml

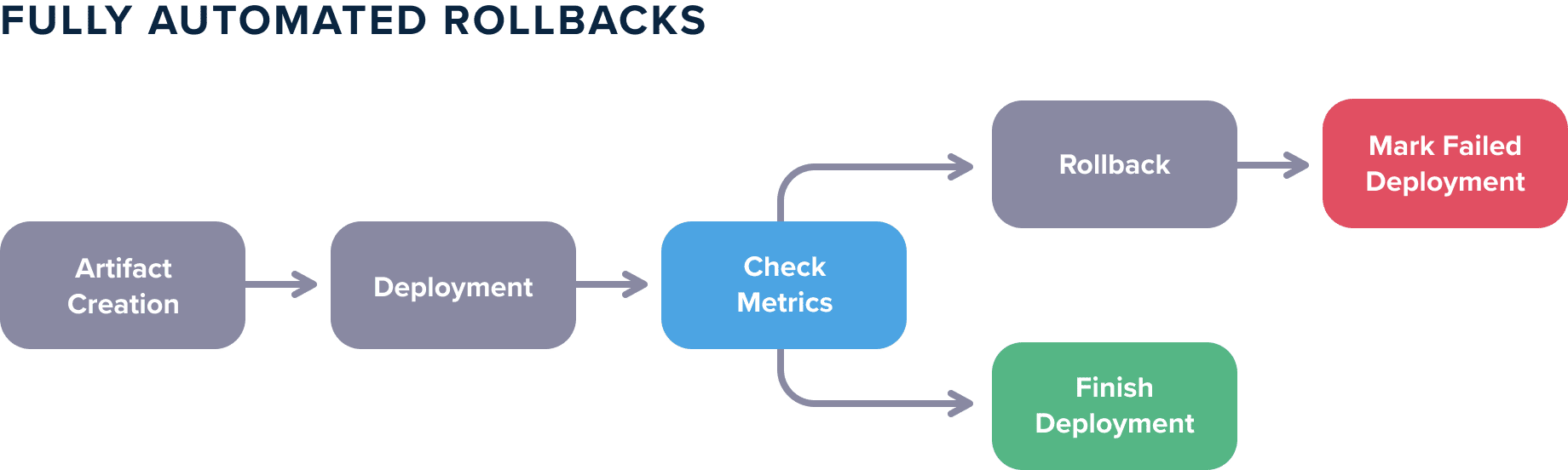

Remember however that the whole point of automation is to remove as many as possible manual steps. Thankfully Argo Rollouts has native support for several metric providers. By evaluating the metrics of your application at all canary stages we can completely automate the whole process by having Argo Rollouts automatically promote/demote a canary according to your metric criteria.

Here is the pipeline that we will create:

Notice how simple the pipeline is. Argo Rollouts will take care of all promotions automatically behind the scenes.

Our example is already instrumented with Prometheus metrics. We will add an Analysis in our canary deployment. You can see the full details at https://github.com/codefresh-contrib/argo-rollout-canary-sample-app/blob/main/canary-with-metrics/analysis.yaml

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: success-rate

spec:

args:

- name: service-name

metrics:

- name: success-rate

interval: 2m

count: 2

# NOTE: prometheus queries return results in the form of a vector.

# So it is common to access the index 0 of the returned array to obtain the value

successCondition: result[0] >= 0.95

provider:

prometheus:

address: http://prom-release-prometheus-server.prom.svc.cluster.local:80

query: sum(response_status{app="{{args.service-name}}",role="canary",status=~"2.*"})/sum(response_status{app="{{args.service-name}}",role="canary"})

This analysis defines that we will go to the next stage of the canary only if a particular PromQL statement (number of requests that return 2xx HTTP status divided by all requests) returns over 95%

If at any point metrics fall below this threshold, the canary will be aborted.

Here is the whole pipeline:

version: "1.0"

stages:

- prepare

- build

- deploy

steps:

clone:

type: "git-clone"

stage: prepare

description: "Cloning main repository..."

repo: '${{CF_REPO_OWNER}}/${{CF_REPO_NAME}}'

revision: "${{CF_BRANCH}}"

build_app_image:

title: Building Docker Image

type: build

stage: build

image_name: kostiscodefresh/argo-rollouts-canary-sample-app

working_directory: "${{clone}}"

tags:

- "latest"

- '${{CF_SHORT_REVISION}}'

dockerfile: Dockerfile

build_arguments:

- git_hash=${{CF_SHORT_REVISION}}

start_deployment:

title: Start canary

stage: deploy

image: codefresh/cf-deploy-kubernetes:master

working_directory: "${{clone}}"

commands:

- /cf-deploy-kubernetes ./canary-with-metrics/service.yaml

- /cf-deploy-kubernetes ./canary-with-metrics/service-preview.yaml

- /cf-deploy-kubernetes ./canary-with-metrics/service-all.yaml

- /cf-deploy-kubernetes ./canary-with-metrics/analysis.yaml

- /cf-deploy-kubernetes ./canary-with-metrics/rollout.yaml

environment:

- KUBECONTEXT=mydemoAkscluster@BizSpark Plus

- KUBERNETES_NAMESPACE=canary

You now have true Continuous Deployment! All deployments are fully automatic and “bad” application versions (that don’t pass our metrics) will be reverted automatically.

For more details on Canary deployments as well as the example with manual approval see the documentation page.

New to Codefresh? Create Your Free Account today!

Related Guides:

- What Is GitOps? How Git Can Make DevOps Even Better

- CI/CD: Complete Guide to Continuous Integration and Delivery

- Understanding Argo CD: Kubernetes GitOps Made Simple

Related Products: