What Are Kubernetes Liveness Probes?

Kubernetes liveness probes are tools used to determine the health and operational status of containers running within a Kubernetes cluster. They inspect the container environment and report back to the Kubernetes control plane on whether a container is functioning correctly. If a liveness probe detects that a container is unhealthy or unresponsive, it triggers a predefined action, typically restarting the container.

The importance of liveness probes lies in their ability to automatically manage container health without manual intervention. This automation ensures higher availability and reliability of applications, as problematic containers are swiftly handled, minimizing downtime and ensuring smooth operations. Use of liveness probes can significantly enhance the reliability of your Kubernetes deployments.

This is part of a series of articles about Kubernetes management.

Use Cases for Liveness Probes in Kubernetes

Here are a few common cases where liveness probes can come in useful:

- Handling application deadlocks: Liveness probes are useful in detecting situations where an application is stuck in a deadlock. For instance, if an application enters an infinite loop or gets stuck waiting for a resource, the liveness probe will eventually fail, prompting Kubernetes to restart the container and restore normal operations.

- Managing memory leaks: In scenarios where applications suffer from memory leaks that degrade performance over time, liveness probes can help by detecting when the container becomes unresponsive or too slow to respond. Kubernetes can then restart the container, mitigating the impact of the memory leak.

- Monitoring long-running processes: Liveness probes are effective in ensuring the health of long-running processes. If a background job or service fails after an extended period, the probe will detect the failure, allowing Kubernetes to restart the container and maintain service continuity.

- Ensuring web server availability: For web applications, liveness probes can monitor the availability of the web server. If the server stops responding due to an error or overload, the liveness probe will trigger a container restart, ensuring that the application remains accessible.

- Verifying custom health checks: Liveness probes can be customized to execute specific commands or scripts that perform detailed health checks on an application. This allows for granular monitoring and ensures that the application meets specific operational criteria before being considered healthy.

How Do Liveness Probes Work?

Liveness probes operate by periodically sending requests to a container to check if it is functioning as expected. Kubernetes offers three primary types of liveness probes:

- HTTP GET probe: This probe sends an HTTP GET request to a specified endpoint within the container. If the endpoint returns a successful HTTP status code (usually 2xx or 3xx), the container is considered healthy. If the response is an error (4xx or 5xx), or if there is no response within a specified timeout, the container is deemed unhealthy.

- TCP socket probe: This probe attempts to open a TCP connection on a specified port of the container. If the connection is successful, the container is considered healthy. If the connection fails or times out, the container is marked as unhealthy.

- Exec probe: This probe executes a command inside the container. If the command returns a status code of 0, the container is healthy. Any non-zero status code indicates that the container is unhealthy.

Each of these probes can be configured with parameters like initialDelaySeconds, periodSeconds, timeoutSeconds, failureThreshold, and successThreshold, allowing fine-grained control over how Kubernetes evaluates container health.

When a liveness probe fails, Kubernetes automatically restarts the container to recover it to a healthy state. This automated recovery process is crucial for maintaining application uptime and reducing the impact of failures.

Quick Tutorial: Configuring a Kubernetes Liveness Probe

Here’s a practical example demonstrating how to configure a liveness probe using the exec type, which runs a command inside the container to determine its health status:

apiVersion: v1

kind: Pod

metadata:

name: probe-example

labels:

test: probe-container

spec:

containers:

- name: probe-container

image: busybox:latest

args:

- /bin/sh

- -c

- touch /tmp/allgood; sleep 100; rm -f /tmp/allgood; sleep 500

livenessProbe:

exec:

command:

- cat

- /tmp/allgood

initialDelaySeconds: 5

periodSeconds: 5

Assuming you save the above code in a file called liveness.yaml, we can apply it using the following command:

kubectl apply -f liveness.yaml

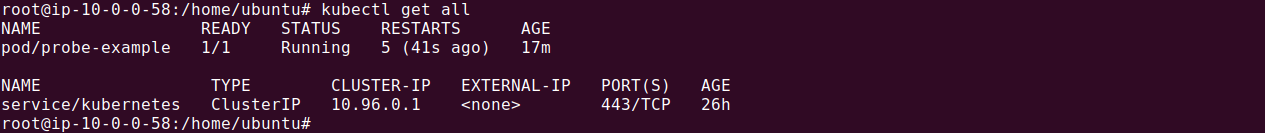

Check if the pod was created by running the following command:

kubectl get all

A few important details about this configuration:

- Container setup:

- The container uses the busybox image, a lightweight container. BusyBox combines tiny versions of many common UNIX utilities into a single small executable.

- The args parameter specifies the command sequence to be executed by the container:

- touch /tmp/allgood: This creates a file named /tmp/allgood, signaling that the container is healthy.

- sleep 100: The container then waits for 100 seconds.

- rm -f /tmp/allgood: After 100 seconds, the health file is deleted, making the container unhealthy.

- sleep 500: The container continues running for 500 more seconds, allowing the liveness probe to detect the failure.

- Liveness probe configuration:

- exec: The liveness probe runs the command cat /tmp/allgood inside the container.

- If the file /tmp/allgood exists, the command succeeds, and the container is deemed healthy.

- Once the file is deleted after 100 seconds, the command fails, marking the container as unhealthy.

- initialDelaySeconds: This setting tells Kubernetes to wait 6 seconds after the container starts before performing the first probe. This delay gives the container time to initialize.

- periodSeconds: The probe runs every 6 seconds to check the container’s health. This frequency ensures that failures are detected quickly.

- exec: The liveness probe runs the command cat /tmp/allgood inside the container.

This example demonstrates how Kubernetes can manage container failures and maintain application uptime with minimal intervention. This setup is particularly useful for simulating real-world scenarios where an application might fail after running for a while.

Kubernetes Liveness Probe Best Practices

When using liveness probes, focus on simplicity and lightweight design. Overly complex checks can introduce latency and inadvertently impact container performance. Setting realistic timeout values directly influences the effectiveness of liveness probes. Accurate timeout configurations ensure that false positives are minimized, and containers are only restarted when genuinely necessary.

1. Keep Probes Simple and Lightweight

Ensure your liveness probes are straightforward and minimally invasive. Probes should not add significant overhead to the container’s normal operations. For example, a simple HTTP GET request to a health check endpoint is often sufficient to determine the container’s status.

Complex probe logic or resource-heavy checks can impact performance and may result in false positives. Simplifying probe design ensures they achieve their primary objective: verifying if the container is alive without adding unnecessary load or complexity.

2. Set Realistic Timeout Values

Setting correct timeout values for liveness probes is crucial for accurate health detection. If the timeout is too short, you risk false positives where the probe determines a healthy container is failing. Conversely, a long timeout delays the detection and handling of actual failures.

For effective monitoring, align timeout settings with your application’s response characteristics. Consider the typical response time of your health check endpoint under normal and peak load conditions. This approach minimizes false positives and ensures timely intervention when real issues arise.

3. Set Failure Thresholds

Defining appropriate failure thresholds in your liveness probes configuration helps control how quickly Kubernetes reacts to container issues. If thresholds are too low, Kubernetes may restart containers too frequently, leading to instability. Conversely, high thresholds may delay necessary intervention.

In practice, setting a balanced failure threshold—considering the application’s typical error rates and tolerance—is essential. For instance, allowing a few consecutive probe failures before a restart can prevent transient issues from causing unwarranted restarts.

4. Apply Appropriate Restart Policies

Restart policies are integral to how Kubernetes handles container failures detected by liveness probes. Configuring these policies correctly ensures that containers are restarted appropriately without causing unnecessary churn or resource wastage.

Common restart policies include Always, OnFailure, and Never. Choose the policy that best fits your application’s behavior. For instance, OnFailure is often used for applications where a crash indicates the need for a restart, while Always is suitable for services expected to run continuously.

5. Monitor and Update Probes

Monitoring the performance and effectiveness of liveness probes is crucial for maintaining their relevance over time. Regularly review probe logs and metrics to ensure they accurately reflect container health without causing false positives or negatives.

As your applications evolve, update the probes to reflect new health check endpoints or altered application behaviors. Continuous monitoring and updating ensure that your liveness probes remain effective and aligned with current operational realities.

6. Avoid Cascading Failures

Cascading failures are a critical issue that can arise when liveness probes are not carefully configured, especially when they involve dependencies on external services. In a Kubernetes environment, if a liveness probe checks external dependencies like databases or authentication services, a failure in any of these dependencies can lead to a chain reaction. This occurs when multiple services, dependent on a single external service, detect a failure in that service and incorrectly mark themselves as unhealthy, leading Kubernetes to restart them unnecessarily.

To avoid cascading failures, liveness probes should focus strictly on the health of the container itself rather than its dependencies. For instance, rather than configuring a liveness probe to check the status of an external service, it should only check whether the service it is monitoring is responsive. This ensures that a temporary failure in an external service does not propagate through the system, causing widespread and unnecessary restarts.

Kubernetes Deployment with Codefresh

Codefresh GitOps, powered by Argo, helps you get more out of Kubernetes and streamline deployment by answering important questions within your organization, whether you’re a developer or a product manager. For example:

- What features are deployed right now in any of your environments?

- What features are waiting in Staging?

- What features were deployed last Thursday?

- Where is feature #53.6 in our environment chain?

What’s great is that you can answer all of these questions by viewing one single dashboard. Our applications dashboard shows:

- Services affected by each deployment

- The current state of Kubernetes components

- Deployment history and log of who deployed what and when and the pull request or Jira ticket associated with each deployment

Deploy more and fail less with Codefresh and Argo