What Is CI/CD with Kubernetes?

Containerization and Kubernetes are improving the consistency, speed, and agility of software projects. They offer a common declarative language for writing applications, operating jobs, and running distributed workloads.

In a Kubernetes environment, you create and apply a desired state in declarative YAML, Kubernetes parses it, and deploys resources in a cluster to achieve that desired state. For example, it can scale applications up or down, provision storage resources, and even add more physical machines (nodes) to a cluster via cloud platforms. Kubernetes handles the full lifecycle of applications, for example, healing application instances when their pod or container shuts down or malfunctions.

However, with all the power of Kubernetes, it still requires practicing the same continuous integration / continuous delivery (CI/CD) principles. You still need a robust CI system, which must be integrated with your container repository to automatically create new versions of container images. Within Kubernetes, you need automated tools that can help you establish a continuous delivery process. Most of these tools will be based on GitOps principles.

Codefresh is a Kubernetes-native CI/CD platform designed to simplify the continuous integration and delivery of applications.

5 Benefits of Kubernetes for Your CI/CD Pipeline

A CI/CD pipeline needs to ensure that application updates occur quickly and automatically. Kubernetes provides capabilities for automation and efficiency, helping solve various problems, including:

- Time to release cycles—manual testing and deployment processes can cause delays, pushing back the production timeline. A manual CI/CD process can result in code-merge collisions, extending the release time of patches and updates.

- Outages—a manual infrastructure management process requires teams to remain alert around the clock if a power outage or traffic spike occurs. If an application goes down, businesses can lose customers and money. Kubernetes automate updates and patches to provide a quick and efficient response.

- Server usage efficiency—applications that are not efficiently packed onto servers can incur overhead in capacity fees. It may happen whether the application runs on-premises or in the cloud. Kubernetes helps maximize the server usage efficiency to ensure capacity is balanced.

- Containerize code—Kubernetes enables you to run applications in containers deployed with all the necessary resources and libraries. Containerizing code makes applications portable between environments and easy to scale and replicate.

- Deployment orchestration—Kubernetes provides automation capabilities for managing and orchestrating containers. It can automate container deployment, monitor their health, and scale to meet changing demands.

The capabilities provided by Kubernetes help reduce the amount of time and effort required to deploy applications via a CI/CD pipeline. It provides an efficient model to monitor and control capacity demands and usage. Additionally, Kubernetes automates application management to reduce outages.

Related content: Read our guide to continuous deployment

What is GitOps and How is it Used in Kubernetes?

GitOps is a way to achieve continuous deployment of cloud-native applications. It focuses on a developer-centric experience using tools that developers are already familiar with, such as Git version control repositories.

Triggering CI/CD pipelines with Git-based tasks has several benefits in terms of collaboration and ease of use. All pipeline changes and source code are stored in a unified source repository, allowing developers to review changes and eliminate bugs before deployment, and easily roll back in case of production issues.

5 Notable CI/CD Tools for Kubernetes

Codefresh

Codefresh is a Kubernetes-native CI/CD platform designed to simplify the continuous integration and delivery of applications. It builds on open-source tools like Argo to provide a comprehensive solution for managing deployments, rollbacks, and version control in Kubernetes environments. Codefresh supports GitOps workflows, allowing users to manage deployments via Git repositories, which improves traceability and consistency.

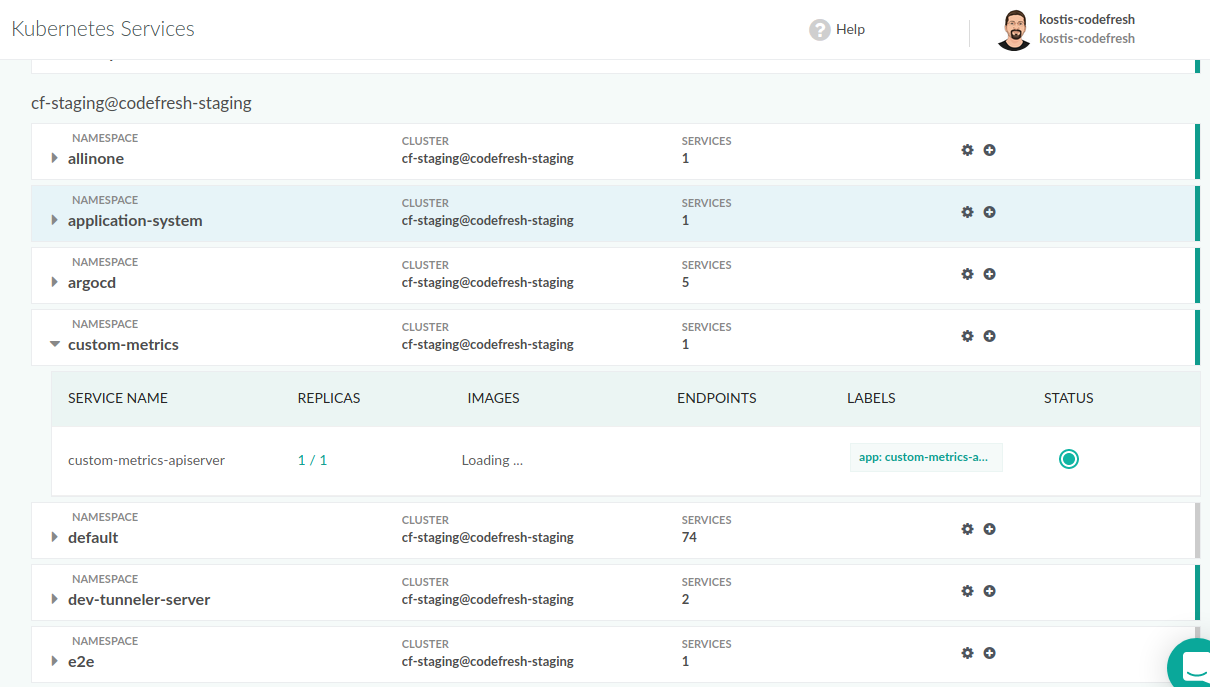

Codefresh’s intuitive interface and extensive integrations with Kubernetes make it ideal for teams looking to streamline their software delivery pipelines. Its built-in monitoring and visualization tools help track deployment statuses, automate testing, and ensure that applications are continuously aligned with a desired state.

Learn more about the Codefresh platform

Octopus Deploy

Octopus Deploy is a deployment automation tool that integrates with Kubernetes, allowing users to define, schedule, and monitor deployments across various environments. With its built-in support for Kubernetes, Octopus simplifies the release process by providing templates for Kubernetes resources, such as Helm charts, YAML, and Docker images. This enables teams to automate complex deployment workflows, track releases, and roll back changes if necessary.

Octopus Deploy is particularly useful for enterprises managing large-scale Kubernetes environments, as it supports multi-cluster deployments and can integrate with CI/CD pipelines to automate the entire release process from development to production. Octopus specializes in bringing simple automation of complex environment promotion scenarios, which many other Kubernetes tools struggle with.

Learn more about Octopus Deploy

Argo CD

Argo CD is a declarative, GitOps-based continuous delivery tool specifically designed for Kubernetes. It simplifies application deployment and lifecycle management by leveraging Git repositories as the single source of truth for the desired state of applications.

Argo CD automates the synchronization between the actual state of applications running in Kubernetes clusters and the target state defined in Git. It supports various configuration management tools, such as Helm charts, Kustomize, and Jsonnet, making it flexible for managing complex deployments. By continuously monitoring and reconciling application states, Argo CD ensures consistency, transparency, and reliability in Kubernetes environments.

GitHub Actions

GitHub Actions is a robust continuous integration and continuous delivery (CI/CD) platform that enables developers to automate their software build, test, and deployment pipelines directly within GitHub. With workflows written in YAML, it simplifies the automation of tasks triggered by repository events, such as pull requests, commits, or issue creation.

GitHub Actions provides flexibility by supporting both GitHub-hosted runners for Linux, macOS, and Windows, and self-hosted runners for custom infrastructure. Beyond traditional CI/CD, it can automate additional repository tasks like labeling issues or triggering API calls.

Jenkins X

Jenkins X is a cloud-native platform designed to automate and accelerate Continuous Integration (CI) and Continuous Delivery (CD) for developers building software on Kubernetes.

By integrating with popular open-source tools, Jenkins X provides a cohesive solution to improve development speed, reliability, and scalability. It leverages GitOps to manage Kubernetes clusters, offering a fully automated and consistent approach to application deployment and lifecycle management. With Jenkins X, teams can focus on creating software while the platform handles infrastructure, pipelines, and deployment workflows.

Kubernetes CI/CD Best Practices

Containers Should Be Immutable

Immutable containers ensure that once a container is built, its contents cannot be changed during runtime. This practice eliminates discrepancies between development, testing, and production environments. By treating containers as immutable, you create a clear separation between building and running stages, which enhances consistency and reliability.

Key Practices for Immutable Containers:

- Build Once, Deploy Anywhere: Build a container image once and deploy the same image across all environments, avoiding modifications that can introduce inconsistencies.

- Externalize Configuration: Use environment variables, ConfigMaps, or Secrets to manage environment-specific configurations instead of baking them into the container image.

- Avoid Ephemeral Changes: Do not store application logs, temporary files, or other runtime data within the container. Use external storage solutions or Kubernetes-native constructs like PersistentVolumes.

- Use Read-Only Filesystems: Configure containers to use read-only filesystems to prevent accidental or malicious modifications.

By following these practices, teams can achieve greater stability, traceability, and reproducibility in their CI/CD pipelines.

Leverage the Blue/Green Deployment Pattern

The blue/green deployment pattern minimizes downtime and reduces risk by maintaining two separate environments—blue (current production) and green (new version). This approach allows teams to test new deployments in isolation before switching traffic to the updated environment.

Steps to Implement Blue/Green Deployment in Kubernetes:

- Deploy the Green Environment: Deploy the new version of your application (green) alongside the current version (blue) using Kubernetes Deployment objects.

- Route Traffic Gradually: Use a Kubernetes Ingress or Service object to control traffic routing between the blue and green environments. Tools like Istio or Linkerd can simplify traffic management with fine-grained controls.

- Perform Testing: Verify the green environment through automated tests or canary releases before redirecting all traffic.

- Switch Traffic: Once validated, update the routing configuration to direct all traffic to the green environment.

- Rollback if Necessary: If issues are identified, revert traffic to the blue environment and troubleshoot the green deployment.

This pattern ensures seamless transitions during updates, enhancing the user experience and reducing downtime risks.

Learn more in our detailed guide to blue green deployment

Keep Secrets And Config Out Of Containers

Embedding secrets and configurations directly into container images poses security and operational risks. Instead, use Kubernetes-native solutions to manage sensitive data and environment-specific configurations securely and dynamically.

Best Practices for Managing Secrets and Configurations:

- Use Kubernetes Secrets: Store sensitive information like API keys or database credentials in Kubernetes Secrets and mount them as environment variables or volumes in pods.

- Leverage ConfigMaps: Externalize non-sensitive configurations, such as application settings, using Kubernetes ConfigMaps.

- Enable Encryption: Use Kubernetes encryption at rest to secure Secrets in etcd and integrate with external key management systems (KMS) for enhanced security.

- Implement Access Controls: Use Role-Based Access Control (RBAC) to restrict access to Secrets and ConfigMaps based on the principle of least privilege.

- Regularly Rotate Secrets: Automate the rotation of sensitive credentials and ensure applications can handle dynamic updates seamlessly.

By adopting these practices, you can protect sensitive data and simplify configuration management while maintaining flexibility in your CI/CD workflows.

Use Helm to Manage Deployments

Helm is a package manager for Kubernetes that simplifies the management of application deployments. It uses charts, which are pre-configured Kubernetes resources bundled together, to make deployment more consistent and reusable. By leveraging Helm, teams can automate the deployment process, manage versioning, and reduce configuration errors.

Key practices for using Helm include:

- Create Reusable Charts: Define reusable Helm charts for applications, including their dependencies, configurations, and templates. This ensures consistency across deployments and environments.

- Version Control Charts: Store Helm charts in a version-controlled repository to track changes and roll back to previous versions if needed.

- Use Values Files: Define environment-specific configurations in separate

values.yamlfiles, allowing you to customize deployments without modifying the chart templates. - Leverage Helm Hooks: Use hooks to execute tasks like database migrations or service warmups before or after specific lifecycle events.

- Regularly Update Charts: Keep Helm charts updated to incorporate fixes, new features, and security patches.

By adopting Helm, teams can streamline their CI/CD pipelines, minimize deployment errors, and improve collaboration across environments.

Try Pull-Based CI/CD Workflows

A pull-based CI/CD workflow is a GitOps practice where the deployment system pulls changes from a Git repository rather than relying on a push-based trigger. This approach aligns well with Kubernetes and enables better control, traceability, and security in CI/CD pipelines.

Best practices for implementing pull-based workflows include:

- Git as a Single Source of Truth: Ensure that all deployment manifests, configurations, and infrastructure definitions are stored in a Git repository. Changes in Git automatically reflect desired states for deployments.

- Use Controllers for Synchronization: Deploy controllers such as Flux or Argo CD in Kubernetes clusters to monitor repositories and sync cluster states with Git.

- Implement Access Control: Restrict write access to production branches in Git repositories and use pull requests for approvals and reviews to ensure changes are validated before deployment.

- Automate Change Detection: Set up automation tools to detect changes in Git repositories and initiate synchronization tasks automatically.

- Track Changes: Use Git logs and diffs to trace who made changes, when they were made, and what they involved, enhancing auditability and debugging.

Pull-based workflows enhance security by reducing the need for direct access to production environments, while providing a more structured and reliable deployment process.

Codefresh: The Kubernetes GitOps Platform

The Codefresh platform is a complete software supply chain to build, test, deliver, and manage software with integrations so teams can pick best-of-breed tools to support that supply chain. Codefresh unlocks the full enterprise potential of Argo Workflows, Argo CD, Argo Events, and Argo Rollouts and provides a control-plane for managing them at scale.

Codefresh provides the following key capabilities:

Single pane of glass for the entire software supply chain

You can easily deploy Codefresh onto a Kubernetes cluster, run one command to bootstrap it, and the entire configuration is written to Git. By integrating Argo Workflows and Events for running delivery pipelines, and Argo CD and Rollouts for GitOps deployments and progressive delivery, Codefresh provides a complete software lifecycle solution with simplified management that works at scale.

Built on GitOps for total traceability and reliable management

Codefresh is the only enterprise DevOps solution that operates completely with GitOps from the ground up. Using the CLI or GUI in Codefresh generally results in a Git commit. Whether that’s installing the platform, creating a pipeline, or deploying new software. The CLI/GUI simply acts as extended interfaces of version control. A change to the desired state of the software supply chain will automatically be applied to the actual state.

Simplified management that works at scale

Codefresh greatly simplifies the management and adoption of Argo. If you’ve already defined Argo workflows and events, they will work natively in Codefresh. Codefresh acts as a control plane across all your instances – rather than many instances of Argo being operated separately and maintained individually, the control plane allows all instances to be monitored and managed in concert.

Continuous delivery and progressive delivery made easy

Those familiar with Argo CD and Argo Workflows will see their configurations are fully compatible with Codefresh and can instantly gain value from its enterprise features. Those new to continuous delivery will find the setup straightforward and easy. The new unified UI brings the full value of Argo CD and Argo Rollouts into a single view so you no longer have to jump around between tools to understand what’s going on.

The World’s Most Modern CI/CD Platform

A next generation CI/CD platform designed for cloud-native applications, offering dynamic builds, progressive delivery, and much more.

Check It Out