What is Argo Workflows?

Argo Workflows is an open-source, container-native workflow engine for orchestrating parallel jobs on Kubernetes. It was developed to help solve complex data processing tasks in a containerized environment. Argo allows you to package your workflows into Docker containers and manage them via Kubernetes. This provides several advantages, such as scalability, maintainability, and the ability to run workflows on any Kubernetes cluster.

Argo Workflows offers powerful features such as Directed Acyclic Graph (DAG) workflows, step-based workflows, and scripts. It also supports workflow templates, allowing users to define reusable and composable workflow steps. This makes it a versatile tool for orchestrating complex jobs that require parallel execution and conditional branching.

Learn more in our detailed guide to Argo workflow template

What is Apache Airflow?

Apache Airflow is an open-source platform created by Airbnb to programmatically author, schedule, and monitor workflows. Its main strength lies in its ability to define workflows as code, allowing for dynamic pipeline generation, testing, and versioning.

Airflow follows a directed acyclic graph (DAG) model where workflows are defined as a collection of tasks with dependencies. This makes it easy to visualize and understand the progression of tasks, allowing for easier debugging and optimization. Airflow also supports a wide range of operators for tasks, including Bash, Python, and even Docker, further enhancing its flexibility.

Argo Workflows vs. Apache Airflow: 5 Key Differences

1. Kubernetes-Native vs Standalone

One of the key differences between Argo Workflows and Apache Airflow lies in their respective architectures. Argo Workflows is Kubernetes-native, meaning it’s designed to run on a Kubernetes cluster. This allows for easier scaling and resource management, as Kubernetes handles these aspects.

On the other hand, Apache Airflow operates as a standalone application. While it can be containerized and run on Kubernetes, it doesn’t inherently leverage Kubernetes’ features. This means that managing resources and scaling can be more of a challenge with Airflow.

2. Workflow Design

Argo Workflows and Apache Airflow also differ significantly in their workflow design. Argo Workflows supports more complex workflows with its support for loops, recursion, and conditional logic. This makes it a powerful tool for orchestrating complicated jobs that require a high degree of control and flexibility.

Airflow, however, excels in its ability to define workflows as code. This allows for dynamic pipeline generation, versioning, and testing. While it doesn’t support loops and recursion out of the box, it does offer a wide range of operators for tasks, providing ample flexibility.

3. User Interface

In terms of user interface, Apache Airflow offers a more robust and interactive UI compared to Argo Workflows. Airflow’s UI allows you to monitor your workflows in real-time, view logs, and even rerun tasks directly from the interface. This can be a huge benefit for debugging and optimizing workflows.

Argo Workflows, while having a simpler UI, provides a straightforward and clean interface for viewing and managing workflows. While it may not be as feature-rich as Airflow’s UI, it is more than capable for most workflow management tasks.

4. Scheduling

When it comes to scheduling, both Argo Workflows and Apache Airflow offer robust options. However, they approach scheduling differently. Argo Workflows uses Kubernetes CronJob to schedule workflows, leveraging the power of Kubernetes for resource management and reliability.

Apache Airflow, on the other hand, uses its own scheduler. This allows for more complex scheduling rules and dependencies, but it also means that the performance and reliability of the scheduler are dependent on the resources of the machine where Airflow is installed.

5. Community and Support

Both Argo Workflows and Apache Airflow have active and supportive communities. However, as Apache Airflow has been around for a longer time, it has a larger community and more extensive documentation. This can be a significant advantage when looking for help or trying to troubleshoot issues.

Argo Workflows, while having a smaller community, has a rapidly growing user base. The community is very active, and the documentation is improving and expanding rapidly.

Argo Workflows and Apache Airflow: How to Choose?

Here are the main considerations that can help you select which of these workflow tools is the best fit for your project.

Consider Your Project Requirements

Argo Workflows and Apache Airflow cater to different project requirements. Apache Airflow is a platform designed to programmatically author, schedule, and monitor workflows. It allows you to define workflows as Directed Acyclic Graphs (DAGs) and offers flexibility in setting up complex dependencies.

On the other hand, Argo Workflows is a container-native workflow engine for Kubernetes, designed for executing and managing jobs on Kubernetes. It supports complex workflows, offers graphical interfaces for workflow visualization, and enables the use of Kubernetes resources directly.

When considering your project requirements, think about the complexity of your workflows, the level of flexibility you need, and the kind of environment you’re working in. If you’re working in a Kubernetes environment, Argo Workflows may be a better fit. Conversely, if you need to set up complex dependencies and require a high level of flexibility, Apache Airflow might be the way to go.

Assess Your Team’s Skills

The skills of your team play a significant role in choosing between Argo Workflows and Apache Airflow. Both tools have a steep learning curve and require a certain level of expertise to handle effectively.

Apache Airflow is written in Python, and hence a good understanding of Python is necessary to create, modify, and manage workflows. Moreover, understanding the concept of Directed Acyclic Graphs (DAGs) is crucial for creating efficient workflows in Airflow.

On the other hand, Argo Workflows is built for Kubernetes, which means your team needs to be comfortable working with Kubernetes. Knowledge of Docker and containerization is also beneficial as Argo is container-native.

Consider Future Scalability

Scalability is another critical factor to consider in the Argo Workflows vs Airflow debate. As your business grows, your data workflows will become more complex, and the tool you choose should be able to scale with your needs.

Apache Airflow offers high scalability and can handle complex workflows with ease. It supports dynamic pipeline generation, which means it can adapt to changing workflows as your business scales. Moreover, Airflow’s robust community and extensive documentation make it a sustainable choice for the long term.

Argo Workflows, being a Kubernetes-native tool, offers excellent scalability as well. It can handle large-scale workflows and allows for easy scaling of resources. However, its community is not as extensive as Airflow’s, which could potentially impact long-term sustainability.

When considering future scalability, weigh in the scalability features of both tools, the robustness of their communities, and their ability to adapt to future changes in your business.

Choosing between Argo Workflows and Apache Airflow is not a one-size-fits-all decision. It requires a thorough understanding of your project requirements, an assessment of your team’s skills, and a consideration of future scalability. By taking these factors into account, you can make an informed decision that best suits your business needs.

Learn more in our detailed guide to Argo Workflow examples

Using Argo Workflows in Codefresh Software Delivery Platform

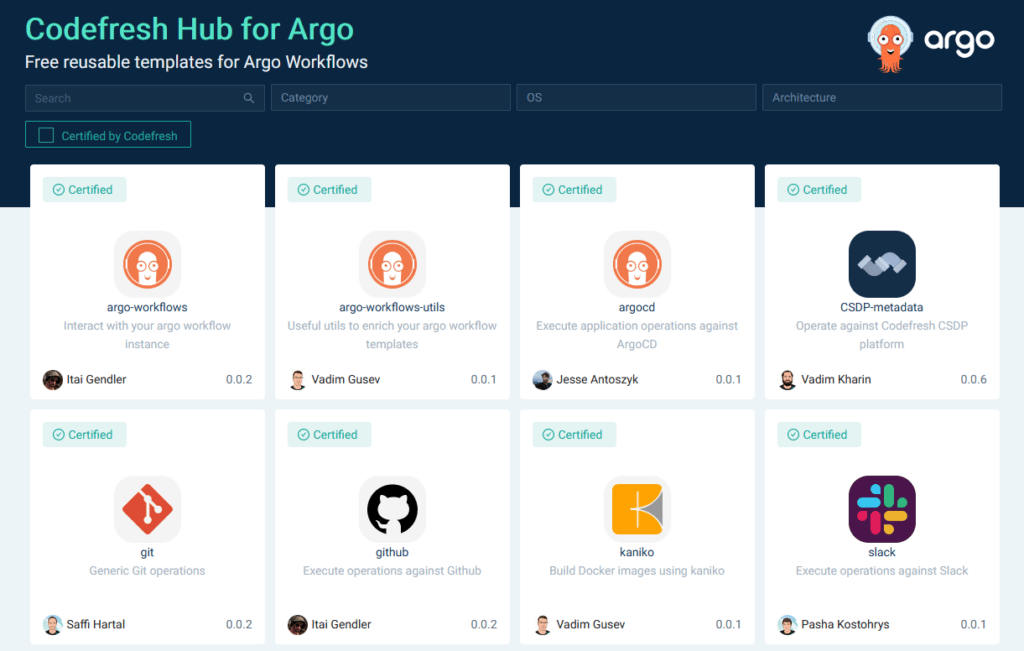

The Codefresh platform, powered by Argo, now fully incorporates Argo Workflows and Argo Events, including the Codefresh Hub for Argo. This is a central hub for Codefresh platform users and Argo users to consume and share workflow templates.

On a technical level adopting Argo Workflows has the following advantages:

- Mature and battle-tested runtime for all pipelines.

- Kubernetes native capabilities such as being able to run each step in its own Kubernetes pod.

- Offering a set of reusable steps in the form of Workflow Templates

- Ability to reuse all existing Artifact integrations instead of spending extra effort to create our own.

The non-technical advantages are just as important:

- Organizations familiar with Argo Workflows can adopt Codefresh workflows with minimal effort.

- Faster delivery of new features – any new feature shipped by the Argo Workflows project is available to Codefresh users.

See Codefresh Workflows in action – sign up for a free trial.

The World’s Most Modern CI/CD Platform

A next generation CI/CD platform designed for cloud-native applications, offering dynamic builds, progressive delivery, and much more.

Check It Out