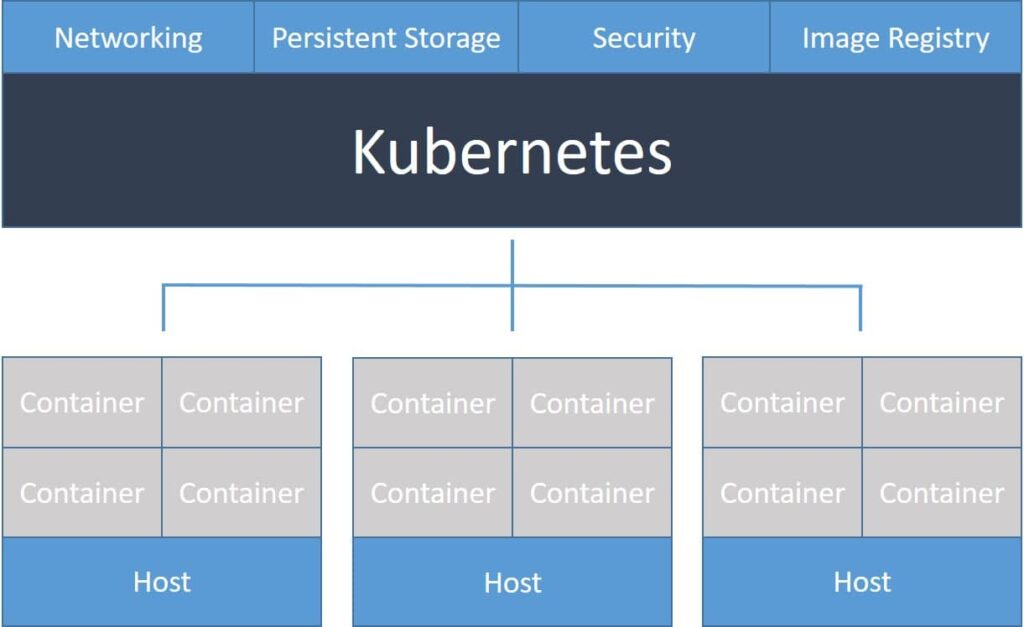

Kubernetes is an open source orchestration platform that automates the deployment of distributed microservices, delivered as one or more Linux containers (for example, Docker containers). Kubernetes allows you to centrally define your application in a declarative format. This specification enables Kubernetes to deploy your whole application in a distributed environment, and handle the more advanced use-cases such as replication.

But where did Kubernetes come from? It was actually originally created at Google, one of the first contributors and adopters of Linux containerization. Their internal Linux container orchestration project was called Borg (a reference to Star Trek).

The modern Kubernetes technology is based on the lessons learned from Borg. Google open sourced the project and it was eventually donated to the Cloud Native Computing Foundation.

Kubernetes is often called k8s (the letter k, 8 characters, the letter s), “kube” and even “kubes” (pronounced “coob” and “coobs”). It is one of the most popular open source projects on GitHub with over 50,000 commits in the past 3 years. It is actively deployed in both development and production by many organizations all over the world.

Why Should You Use Kubernetes?

In short, software deployments have been slowly migrating from monolithic legacy applications to microservices. Deploying legacy applications is straight forward: install the application on a virtual machine, install that virtual machine on several physical systems, and that’s it. Deploying microservices is significantly more challenging.

Setting up the proper networking configuration to ensure all application components can communicate is far from trivial. Load balancing your front-end for high availability mode requires almost as much engineering as creating your application. What about security and storage? And which tools should you use for all of these tasks?

Kubernetes greatly simplifies and abstracts this additional overhead, and lets your developers focus on creating great products, not figuring out how to deploy them.

Deploying your application as a microservice provides a few key benefits. Without getting too deep into the technical details, they include: improved stability and reliability; decreased overhead costs; better user experience; and natural integration with continuous integration and continuous deployment systems. With Kubernetes, you get all of these features.

What You Get Out Of The Box

Kubernetes is an extremely powerful orchestration tool. It enables you to run your containerized microservice application in a fully distributed environment. It is the perfect fit when migrating from monolithic applications to distributed microservices.

Kubernetes enables you to:

- Orchestrate your application across your distributed cluster

- Provide highly durable applications with scaling and load balancing

- Automate your deployments, updates, and rollbacks

- Manage persistent storage

- Assess the health of your application

In addition, Kubernetes ships with the following features:

Automatic binpacking: determines the optimal container:host mapping based on resource requirements (such as CPU) and other constraints to ensure that each container runs optimally.

Self-healing: Kubernetes will restart containers that fail, and also reschedule all containers if a node goes down, maximizing application up time.

Horizontal scaling: easily scale your application across your distributed cluster with the command line, the user interface, or automatically based on resource usage.

Service discovery and load balancing: in Kubernetes, each container gets its own IP address. Multiple instances of the same container all map to the internal DNS name, which provides load balancing by default.

Automated rollouts and rollbacks: when your application has updates – for example new code or configuration – Kubernetes will roll out changes to your application while preserving health. If this causes any failures, Kubernetes will also roll back the changes to heal your application.

Secrets and configuration management: you can easily deploy secret information (think usernames and passwords) to your microservice applications without making changes to your configuration.

Storage orchestration: automatically mount supported storage systems (local, cloud, even an NFS volume) for your applications to synchronize the data plane.

Batch execution: Kubernetes can replace containers that fail to guarantee maximum up time for your customers.

Learn The Lingo

There are a bunch of terms to learn when you start using Kubernetes. Understanding all of these components will help your team walk the walk and talk the talk when it comes to Kubernetes.

Master: since Kubernetes is a distributed orchestration platform, it requires a “head node” that manages your cluster. The master is this node.

Node: an individual machine (virtual or physical) that is part of your cluster. Nodes perform tasks, such as running containers.

Pod: a group of containers that execute on a single node. They share an IP address and communicate over localhost.

Service: a Kubernetes abstraction on top of individual pods. Each service includes a group of pods that all execute the same software on a given port. These pods become part of the abstracted “service.” The service has a single IP address, and load balances requests to individual containers.

Replication Controller: controls how many instances of each pod should be run on your cluster

DaemonSet Controller: runs a specified pod on every node in the cluster

Deployment: provides declarative updates for Pods and ReplicaSets (the next-generation ReplicationController). You only need to describe the desired state in a Deployment object, and the Deployment controller will change the actual state to the desired state at a controlled rate for you.

Automating Deployment to Kubernetes With Codefresh

To help you get your application up and running, Codefresh provides a streamlined integration with Kubernetes. You can deploy your first containers in just a few minutes.

Get started today!