Prologue

Docker, without any exaggeration, is the trendiest, the hottest topic for discussion amongst IT crowd nowadays. Companies, technologies, and solutions are born just to satisfy the ever-growing desire to ‘dockerize’ everything. Days and hours are spent writing Dockerfiles, revamping application architecture and chasing a 12-factor dream. And the most important question, that has to be answered before departing on this journey of containerization, is whether it’s worth it. Whether your product is ready to run as a Docker container, whether the effort needed will be rewarded with results, whether the whole thing won’t come crashing down on you eventually.

So, what things should you consider before introducing containers into play? First, the architectural changes your product has to undergo in order to make the best out of containers. This can cause a lot of changes or little, depending on the current state of a product. Is it a monolithic product, are the components of it tightly coupled, is the concept of state present or not, is your application very I/O intensive? Those are the types of questions that will get the thought process going. Docker is in no way a ‘one size fits all’ solution, and one has to utilize it properly in order to truly benefit. We will now take a look at the major topics, that can help you make the right decision.

Overall Architecture

It’s hard to argue with the fact that containers are very suitable for decoupled applications with clear separation of roles. Call it microservices or not, but the moment your application becomes less monolithic, that’s when you are on the right way towards containerization. If that move costs hours and hours of rewriting the entire codebase of a product, then it might be not worth the game. Consider researching the popular concept of 12-factor applications. While it’s not necessarily the only ‘true’ path, it does contain some valid points with regards to architectural revamping.

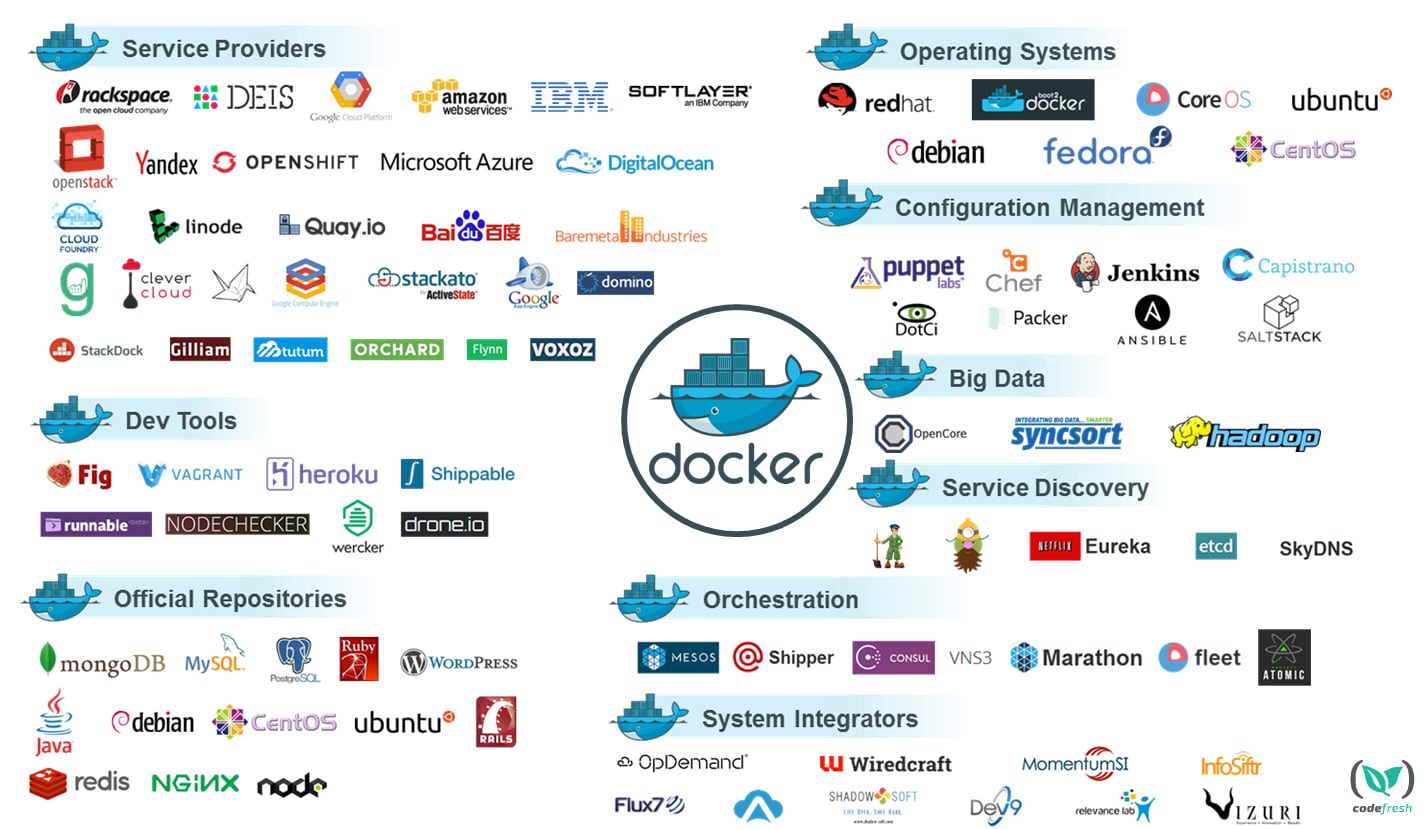

Such ideas, like stateless processes, disposability of instances, and streaming of logs as functional events, when done correctly, can bring a lot of benefits. But, of course, there is a price to pay, mostly due to the need of initial push and the necessity of building the whole ecosystem around containers. We’re talking orchestration, service discovery and registration, new ways of monitoring and logging, and of course new security considerations. Don’t get this wrong, going containers is not complicating things, it’s making things differently. It’s changing the mindset, changing the culture and adapting to new ways.

A portion of the vast Docker ecosystem

Overall Performance

Is your application very CPU-intensive? Does it need multi-core runtime? Then you have to consider placement of your container(s). You might think about dedicating the whole multi-core host per container. Or a host per few containers with strict runtime constraints per each, if you are optimizing the resource usage.

Are you utilizing your network a lot or need specific topology? Then you should consider using custom network drivers that the Docker ecosystem provides. There is a decent variety of them, from built-in Docker overlay networks to pluggable solutions like VXLAN, IPVLAN or Weave. Some of them will offer better performance, while other might offer better security. Since the introduction of Swarmkit, the Docker overlay network became a default one. It provides just enough performance and security for most, however extreme cases will definitely need alternative solutions.

Service Discovery, Configuration Management, and Orchestration

The nature of Docker containers is very volatile in the sense that containers are treated as disposable. And if one goes against that nature, one ends up making virtual machines out of containers. The larger the scale of an application, the more need for control. Scaling up is a very lucrative thing because of the sheer simplicity of it, but that also leads to a mess of containers flying around if the process is not tightly controlled. Orchestration tools help with placement and tracking of your containers.

It’s also important to have service discovery alongside orchestration. Containers are designed to be faceless and nameless, so once you get a containerized application running you have to register the details of it somewhere. The basic minimum info you need to register is the IP address, ports, names of the services running in a container, and healthcheck info. Normally, one would use tools like Consul or etcd, to leverage the service registration mechanism they provide. You can also solve a problem of dynamic configuration by using key-value store provided by those tools and storing configuration per service there.

Storage

Is your application truly stateless but produces a large amount of read/write operations? Do you want to run some data store as a container? Then you need to consider some performant storage and choose a volume driver with minimum overhead. You are still left with the task of managing volumes, but that is solvable with some of the volume drivers. At the end of the day, as rightly stated in the official documentation, “no single driver is well-suited to every use case.” Since the Docker ecosystem has a wide variety of plugins, it’s advisable to research and do a proof-of-concept before settling in on one in particular. And in the case of databases and data stores, for the sake of reliability, you might be better off with distributed solutions like Amazon RDS, which will provide you with nice features like high availability and replication by default.

In a case of a stateful application (as most legacy applications tend to be), you will need a persistent storage. And since containers are disposable you have to make sure your persisted data will be accessible to new containers. That might mean moving volumes around your platform or leveraging aforementioned plugins. A good example might be re-attachable Amazon EBS volumes that live far longer than a single container. You also need to consider the possibility of scaling your application and understand how that impacts the state it keeps since now there can be multiple instances of the application interacting with the state.

Treat Docker volumes as folders that are never gone

Security

Security is one of the most debatable topics when it comes to Docker containers. Since containers run as system processes and have quite unobstructed access to the host’s kernel they can be seen as a potential vulnerability. Apart from hardening and securing the host (on which containers are run) itself, you now have to think about profiling and properly isolating containers. The principle of least privilege seems to be quite fitting for containers since the Docker daemon allows you to limit capabilities. You can do this by running your application inside a container under non-root user (since the filesystem you mount can be mounted read-only).

We can also limit the resource consumption per container and eliminate attacks that target resource exhaustion. It’s also very important to make sure your Docker images come from a trusted source and undergo security scans. For example, it’s advised to build on top of verified base images from Docker Hub which are checked for content trust. The bottom line is to make sure you follow the mix of usual and container-specific procedures to ensure you aren’t in the risk zone.

Monitoring and Logging

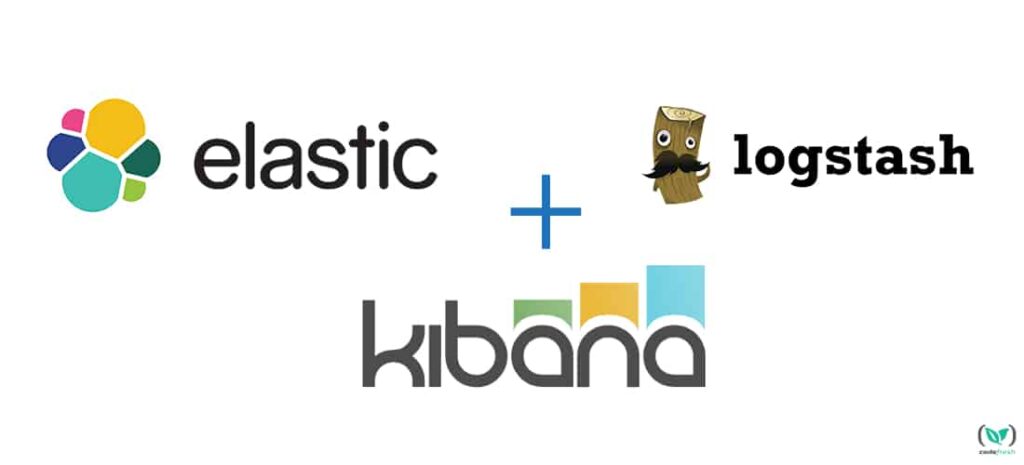

Questions of monitoring and logging are just as important as the other topics I mentioned before, and some might say they are even more critical. After all, how are we supposed to debug failures or prevent crashes? And it’s even more important in a containerized world since the bigger an application grows, the harder it becomes to keep track of resources. Not to mention the fact that you shouldn’t access the shell of a container to look up some logs. Assuming the logging and monitoring solutions were present for your legacy product, it shouldn’t be hard to switch them to work with containers. Containers are more than ready to work with ELK stack for example. Docker offers a good amount of logging options and allows you to ship your application’s output to a json file, fluentd, splunk, or plain old syslog. And that’s barely a third of the options you have for logging.

With regards to monitoring, there’s a lot modern tools can offer. You can choose hosted paid solutions like Datadog or New Relic. Or you can build your own solution based on tools like cAdvisor, Prometheus, and Grafana, which cover the same grounds as paid solutions and give you all the necessary features like alerting and dashboarding. It will all depend on the amount of effort one wants to put in. But you can be sure everything is covered by the insanely huge ecosystem that grew around Docker recently.

ELK stack, a popular solution for logging

Epilogue

It is very tempting to just give into this craze of ‘containerizing everything’, mostly because of the ease Docker brought into the world of software engineering. There’s no right or wrong decision when it comes to innovation, there’s just a path of trial and errors. We hope that this article will help you consider all the pros and cons so that you can make the right decision for your organization. And may the Docker force be with you!