Argo Rollouts is a Kubernetes controller that allows you to perform advanced deployment methods in a Kubernetes cluster. We have already covered several usage scenarios in the past, such as blue/green deployments and canaries. The Codefresh deployment platform also has native support for Argo Rollouts and even comes with UI support for them.

As more and more people adopt Argo Rollouts, we have seen organizations that examine even more advanced scenarios in their own applications, which are not natively supported out of the box. These are

- How to use Argo Rollouts for settings/config maps and not just Docker images

- How to handle multiple microservices with Argo Rollouts. (we’ll cover that in the next blog post)

In this series of blog posts, we will cover these extra requirements and explain some common approaches for solving your requirements while still gaining all the benefits for progressive delivery.

How the Rollout Resource Works

In the simplest scenario Argo Rollouts work by replacing the standard Kubernetes Deployment resource with the Rollout. Here is a quick example for a blue/green deployment.

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: rollout-bluegreen

spec:

replicas: 2

revisionHistoryLimit: 2

selector:

matchLabels:

app: rollout-bluegreen

template:

metadata:

labels:

app: rollout-bluegreen

spec:

containers:

- name: rollouts-demo

image: argoproj/rollouts-demo:blue

imagePullPolicy: Always

ports:

- containerPort: 8080

strategy:

blueGreen:

activeService: rollout-bluegreen-active

previewService: rollout-bluegreen-preview

autoPromotionEnabled: false

The Rollout resource has the same fields as the Kubernetes deployment but extends them with an additional “strategy” segment that explains how the rollout happens.

This works great when your new application version is just a new container image. Your CI system will update the Rollout with the new image version and Argo Rollouts will handle everything for you.

Several times however, your application has other resources, such as configuration stored in config maps. And in some cases, people want to apply blue/green and canaries to those resources as well. For example, you have a new application version that not only needs some changes in the source code (new docker image) but also requires a new configuration option (new entry in the configmap).

How should you handle cases like this? At first glance, it doesn’t seem possible. A configmap is not part of a Rollout. Updating a rollout to use a new configmap would actually cause the configmap to be mapped to the old pods as well (if any eviction or node event restarts them).

The naive approach would be to have a complex configmap that holds properties for all versions and have each version read its own fields. You could have for example, a configmap like this:

apiVersion: v1 kind: ConfigMap metadata: name: my-settings data: my-settings: "35" my-settings-new-version: "78"

The old version (let’s say blue color) reads the “my-settings” key as 35. Then for the new version (green color) you add a new key called “my-settings-new-version” and set it to 78. Old pods stay unaffected but you also need to modify the container of the new version to read from the new key.

This is very cumbersome and super difficult to achieve. It requires changes to source code, cleanup steps and doesn’t scale when you have a large number of options.

There is a much better way 🙂

The hack: Kustomize configmap generators

To make Argo Rollouts work for Configmap we need to manually replicate what Argo Rollouts does for deployments. We need a way to have 2 configmaps present at the same time. Each one with the corresponding options their respective version.

This can be achieved by using Configmap generators. Note that this feature is unrelated to Argo Rollouts. It is a built-in capability of Kustomize, which can work great for standard rolling deployments, and we can also exploit it with progressive delivery.

Here’s how it works, instead of having a static configmap that is saved on a real yaml resource, we instruct Kustomize to create a configmap for us on the fly. The important point is that Kustomize adds a unique suffix to the configmap that it creates, allowing us to have one configmap per deployment version.

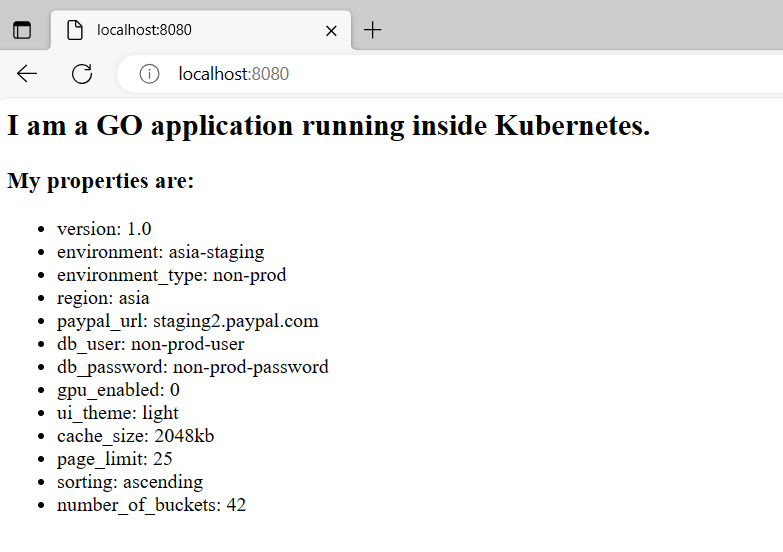

Let’s look at a real example. We will use the application mentioned in the ever-popular GitOps promotion blog post. This is a very simple GoLang application that prints all the settings it gets from a configmap.

Here is the deployment file

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-example-app

spec:

replicas: 1

revisionHistoryLimit: 2

selector:

matchLabels:

app: configmaps-example

template:

metadata:

labels:

app: configmaps-example

spec:

containers:

- name: my-container

image: docker.io/kostiscodefresh/configmaps-argo-rollouts-example:latest

env:

- name: PAYPAL_URL

valueFrom:

configMapKeyRef:

name: my-settings

key: PAYPAL_URL

- name: DB_USER

valueFrom:

configMapKeyRef:

name: my-settings

key: DB_USER

- name: UI_THEME

valueFrom:

configMapKeyRef:

name: my-settings

key: UI_THEME

[...snip...]

Right now the application reads all the settings from a real configmap named “mysettings”. Let’s replace this file with a configmap generator.

apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization # declare ConfigMap as a resource resources: - deployment.yml - service.yml # declare ConfigMap from a ConfigMapGenerator configMapGenerator: - name: my-settings literals: - APP_VERSION=1.0 - ENV="asia-staging" - ENV_TYPE="non-prod" - REGION="asia" - PAYPAL_URL="staging2.paypal.com" - DB_USER="non-prod-user" - DB_PASSWORD="non-prod-password" - GPU_ENABLED="0" - UI_THEME="light" - CACHE_SIZE="2048kb" - PAGE_LIMIT="25" - SORTING="ascending" - N_BUCKETS="42"

There are many options for defining the source of the values. In this simple example, we provide them as literals in the file itself.

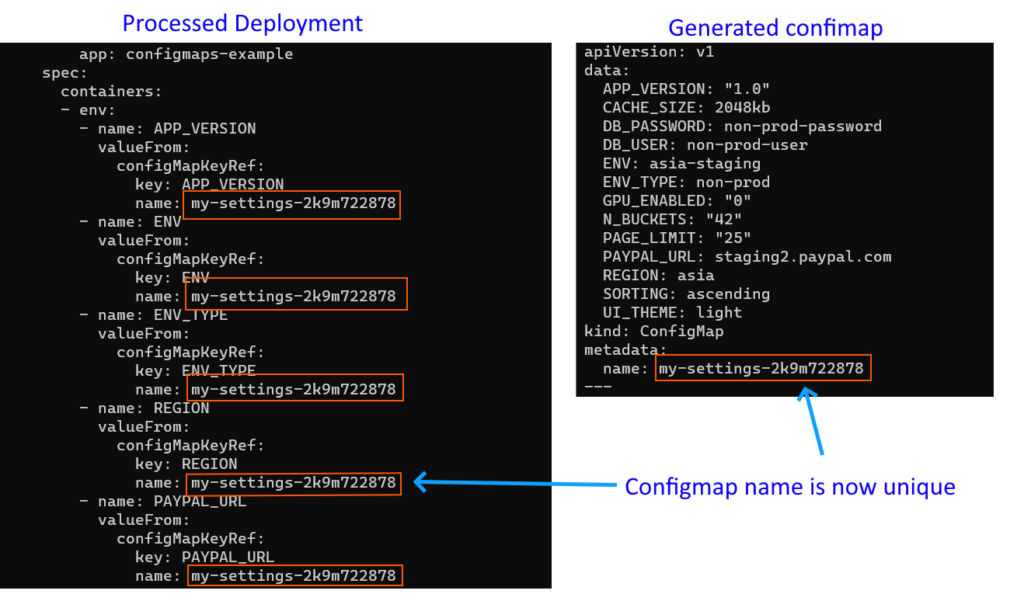

Now let’s run kustomize and see what it produces

Not only has Kustomize created a Configmap for us, but also named it in a unique way. At the same time, the processed deployment also has the generated name injected so everything maps correctly.

That’s it.

We can now deploy the application:

cd manifests/plain kustomize build . | kubectl apply -f -

And everything will work as expected.

Using a configmap generator with Argo Rollouts

Now that we have seen how the config map generator works, we will apply the same trick to Argo Rollouts. First, we need to convert our deployment to our Rollout

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: my-example-rollout

spec:

replicas: 3

strategy:

blueGreen:

activeService: configmaps-example-active

previewService: configmaps-example-preview

autoPromotionEnabled: false

revisionHistoryLimit: 2

selector:

matchLabels:

app: configmaps-example

template:

metadata:

labels:

app: configmaps-example

spec:

containers:

- name: my-container

image: docker.io/kostiscodefresh/configmaps-argo-rollouts-example:latest

env:

- name: APP_VERSION

valueFrom:

configMapKeyRef:

name: my-settings

key: APP_VERSION

- name: ENV

valueFrom:

configMapKeyRef:

name: my-settings

key: ENV

[...snip...]

We used a blue/green deployment this time.

Let’s verify that the configmap generator still works as expected. If you run “kustomize build” in the folder with the rollout, you will find out that even though our configmap is created, the Rollout is NOT injected with the unique suffix.

This happens because Kustomize is not aware of Rollouts as they are not a native Kubernetes resource. We need to teach Kustomize about Rollouts by updating our kustomize file.

apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization # declare ConfigMap as a resource resources: - example-rollout.yml - service-active.yml - service-preview.yml configurations: - https://argoproj.github.io/argo-rollouts/features/kustomize/rollout-transform.yaml

Now, if you try again, you will see that everything works as expected.

Progressive delivery for configuration

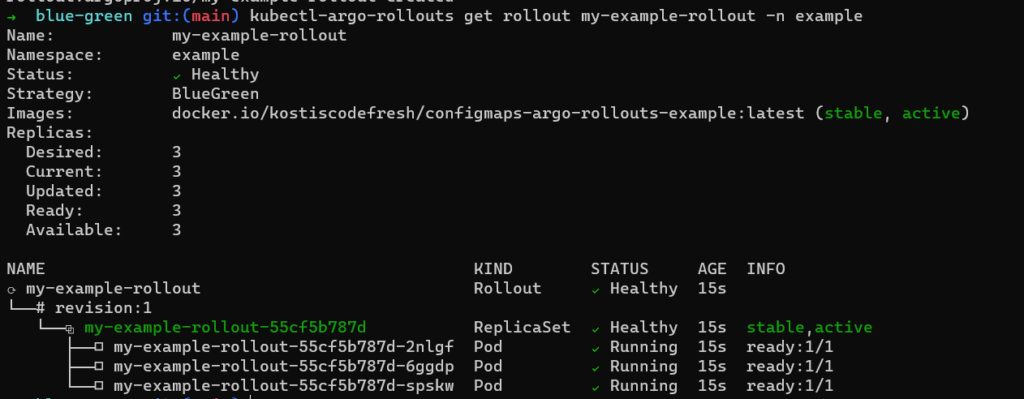

Let’s deploy the first version of the rollout

cd manifests/blue-green kustomize build . | kubectl apply -f - kubectl-argo-rollouts get rollout my-example-rollout -n example

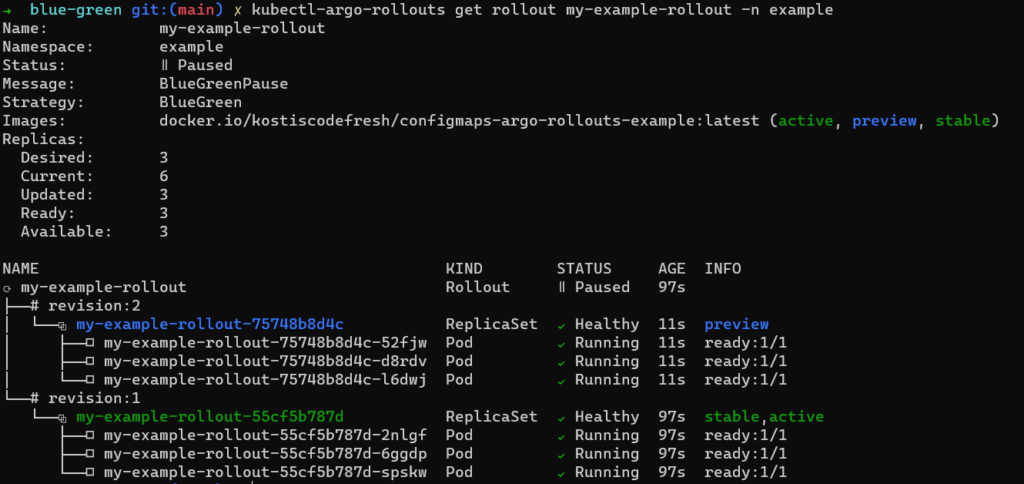

For the second version, we will change some values in the configmap generator to simulate an update in configuration. As an example, we will change the values of the PayPal URL, the cache size and the number of buckets (all dummy values).

After repeating the commands above, we finally how a rollout with two versions:

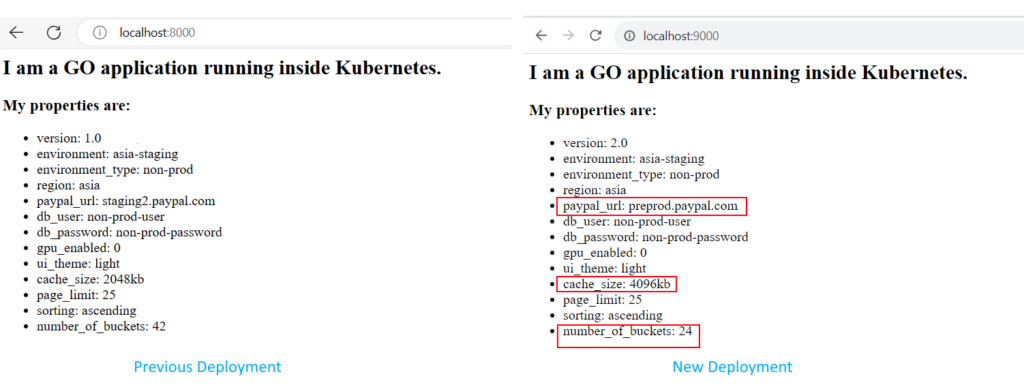

Let’s see how the application looks.

kubectl port-forward svc/configmaps-example-active 8000:8080 -n example kubectl port-forward svc/configmaps-example-preview 9000:8080 -n example

Success!

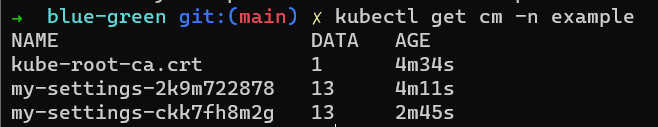

We now have two versions deployed. Each one of them has its own configmap with new settings. You can even see the configmaps in the cluster

kubectl get cm -n example

You can now continue the rollout like any other Argo Rollouts. Promote it or abort it knowing that each replicaset has its own configuration.

Bonus: Performing deployments with just configuration changes

In the initial problem statement we explained how configmap generators are useful when you have changes both in the docker image and the configmap. Notice, however, that since the generator is actively changing the rollout spec in each deployment (because of the unique hash) you don’t actually need to change the docker image tag in order to notify Argo Rollouts that a new Rollout must take place.

This means you can use Argo Rollouts for only configuration changes. You can keep the same docker image and perform blue/green and canaries just by changing the configuration 🙂

If you have ever wanted to fine tune your application settings in a production Kubernetes cluster, without actually creating a new container tag, you will find this technique very useful.

If you want to learn more about Argo Rollouts check the documentation. In the next article, we will see an even more advanced scenario with multiservice deployments and also see how Codefresh can orchestrate everything instead of having to manually run kubectl commands.

Related Guides:

- What Is GitOps? How Git Can Make DevOps Even Better

- CI/CD: Complete Guide to Continuous Integration and Delivery

- Understanding Argo CD: Kubernetes GitOps Made Simple

Related Products: